In her Contextual Integrity theory of privacy, Helen Nissembaum defines privacy as “appropriate flows of information” where the appropriateness is defined by the context and its contextual informational norms. Contextual Integrity is a paradigm shift away from the Fair Information Practice Principles, which emphasizes a model of privacy focused on the control of personal information. Framing privacy through the lens of control has led to a notice & consent model of privacy, which many have argued that notice and consent fails to actually protect our privacy. Rather than focusing on the definition of privacy as the control of private information, Contextual Integrity focuses on appropriate flows of information relative to the stakeholders within a specific context who are trying to achieve a common purpose or goal.

Nissembaum’s Contextual Integrity theory of privacy reflects the context dependent nature of privacy. She was inspired by social theories like Michael Walzer’s Spheres of Justice, Pierre Bourdieu’s field theory, as well as what others refer to as domains. These are ways of breaking up society into these distinct spheres that have their “own contextual information norms” for how data are exchange to satisfy a shared intentions. Nissembaum resists choosing a specific social theory of context, but has offered the following definition of context in one of her presentations.

Contexts – differentiated social spheres defined by important purposes, goals, and values, characterized by distinctive ontologies, roles and practices (e.g. healthcare, education, family); and norms, including informational norms — implicit or explicit rules of info flow.

Nissembaum emphasized the importance of how the informational exchanges should be helping to achieve the mutual purposes, goals, and values of that specific context. As an example, you would be more than willing to provide medical data to your doctor for the purpose of evaluating your health and helping you heal from an ailment. But you may be more cautious in providing that same medical information to Facebook, especially if it was unclear how they intended on using it. The intention and purpose of these contexts help to shape the underlying information norms that helps people understand what information is okay to share given the larger context of that exchange.

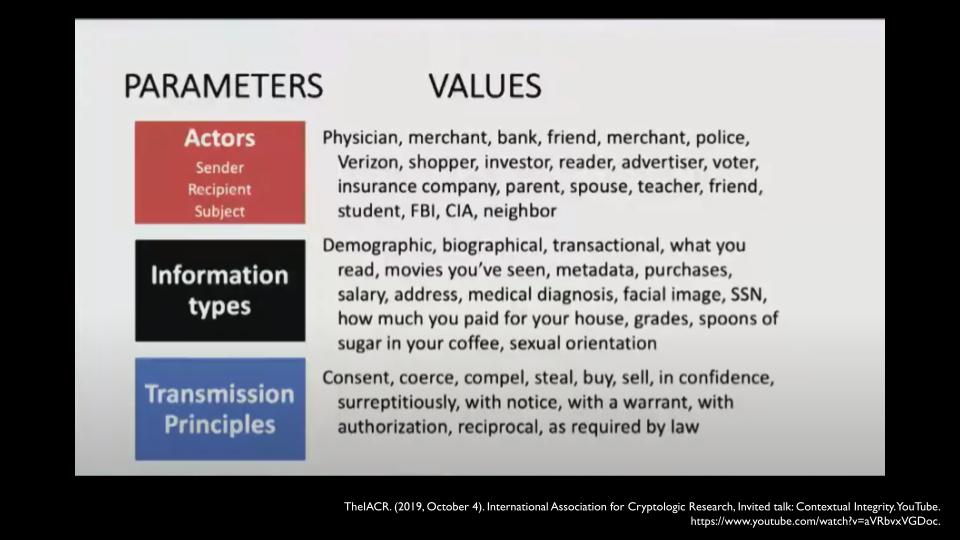

Nissembaum specifies how these contextual information norms have five main parameters that be used to form rules to help determine whether or not privacy has been preserved or not.

(Image credit: Cornell)

The first three are the stakeholder actors including the sender and recipient and the subject or intention of the data exchange.

Then there’s the different types of information that are being exchanged, whether it’s physiological data, biographical data, medical information, financial information, etc.

Finally, the transmission principles that facilitate an exchange include a range including through informed consent, buying or selling, coercion, asymmetrical adhesion contracts or reciprocal exchange, via a warrant, stolen, surreptitiously acquired or with notice, or as required by law.

Notice and consent is embedded within the framework of Contextual Integrity through the transmission principle, which includes informed consent or adhesion contracts. But Nissenbaum says that in order for the contextual integrity to be preserved, then all five of these parameters need to be properly specified.

The final step within the Contextual Integrity theory of Privacy is to evaluate whether or not the informational norm is “legitimate, worth defending, and morally justifiable.” This includes looking at the stakeholders and analyzing who may be harmed and who is benefitting from any informational exchange. Then looking to see whether or not it diminishes any political or human rights principles like the diminishment of the freedom of speech. And then finally evaluating how the information exchange is helping to serve the contextual domain’s function, purpose, and value.

Overall, Nissembaum’s Contextual Integrity theory of privacy provides a really robust definition of privacy and foundation to build upon. She collaborated with some computer scientists who formulized “some aspects of contextual integrity in a logical framework for expressing and reasoning about norms of transmission of personal information.” They were able to formally represent some of information flows in legislation like HIPAA, COPPA, and GLBA into their logical language meaning that it could be possible to create computer programs that could enforce compliance.

Even though Contextual Integrity is very promising as a replacement to existing privacy legislation, there are some potential limitations. The theory leans heavily upon normative standards within given contexts, but my observation with immersive XR technologies is that not only does XR blur and blend existing contexts, but is also creating new precedents of information flows that goes above and beyond. Here’s my state of XR privacy talk from the AR/VR Association’s Global Summit that provides more context on these new physiological information flows and still relatively underdeveloped normative standards of what data are going to be made available to consumer technology companies and what might be possible to do with this data:

The blending and blurring of contexts could lead to a context collapse. As these consumer devices are able to capture and record medical-grade data, then these neuro-technologies and XR technologies are combining the information norms of the medical context with the consumer technology context. This is something that’s already been happening over the years with different physiological tracking, but XR and neuro-technologies will start to create new ethical and moral dilemmas with the types of intimate information that will be made available. Here’s a list of physiological and biometric sensors that could be integrated within XR within the next 5-20 years:

It is also not entirely clear how Contextual Integrity would handle the implications of inferred data like what Ellysse Dick Refers to as “computed data” or what Brittan Heller refers to as “biometric psychographic data”. There is a lot of really intimate information that can be inferred and extrapolated about your likes and dislikes, context-based preferences, and more essential character, personality, and identity from observed behaviors combined with physiological reactions with the full, situational awareness of what you’re looking at and engaged with inside of a virtual environment (or also with eye tracking + egocentric data capture within AR). Behavioral neuroscientist John Burkhardt details some of these biometric data streams, and the unethical threshold between observing and controlling behaviors.

Here’s a map of all of the types of inferred information that can come from image-based eye tracking from Kröger et al’s article “What Does Your Gaze Reveal About You? On the Privacy Implications of Eye Tracking”

It is unclear to me how Nissembaum’s Contextual Integrity theory of privacy might account for this type of computed data, biometric psychographic data, or inferred data. It doesn’t seem like inferred data fits under the category of information types. Perhaps there needs to be a new category of inferred data that could be extrapolated from data that’s transmitted or aggregated. It’s also worth looking at the taxonomy of data types from Ellysse Dick’s work on bystander privacy in AR.

It’s also unclear whether or not this would need to be properly disclosed or whether the data subject has any ownership rights over this inferred data. The ownership or provenance of the data also isn’t fully specified within the sender and recipient of the data, especially if there are multiple stakeholders involved. It’s also unclear to me how the intended use of the data is properly communicated within contextual integrity, and whether or not this is already covered within the “subject” portion of actors.

Some of these open questions could be answered when evaluating whether or not an informational norm is “legitimate, worth defending, and morally justifiable.” Because VR, AR, and neuro-technologies are so new, then these exchanges of information are also very new. Research neuroscientists like Rafael Yuste have identified some fundamental Neuro-Rights of mental privacy, agency, and identity that are potentially threatened because of the types of neurological and physiological data that will soon be made available within the context of consumer neuro-tech devices like brain control interfaces, watches with EMG sensors, or other physiological sensors that will be integrated into headsets like Project Galea collaboration between OpenBCI, Valve Software, and Tobii eye tracking.

I had a chance to talk with Nissenbaum to get an overview of her Contextual Integrity theory of privacy, but we had limited time to dig into some of the aspects of mental privacy. She said that the neuro-rights of agency and identity are significant ethical and moral questions that are beyond the scope of her privacy framework in being able to properly evaluate or address. She’s also generally skeptical about relying about treating privacy as a human right because you’ll ultimately need a legal definition that allows you to exercise that legal right, and if it’s still contextualized within the paradigm of notice and consent, then we’ll be “stuck with this theory of privacy that loads all of the decision onto the least capable person, which is the data subject.”

She implied that even if there are additional human rights laws at the international level, as long as there is a consent loophole like there is within the existing adhesion contract frameworks of notice and consent, then it’s not going to ensure that you can exercise that right to privacy. This likely means that US tech policy folks may need to use Contextual Integrity as a baseline to be able to form new state or federal privacy laws, which is where privacy law could be enforced and the rights to privacy be asserted. There’s still value in having human rights laws shape regional laws, but there is not many options to enforce the right to privacy through the mechanisms of international law.

Finally, I had a chance to show Nissenbaum my taxonomy of contexts that I’ve been using in the context of my XR Ethics Manifesto that maps out the landscape of ethical issues within XR. I first presented this taxonomy of the domains of human experience at the SVVR conference on April 28, 2016, as I was trying to categorize the range of answers of the ultimate potential of VR into different industry verticals or contexts.

We didn’t have time to fully unpack all of the similarities to some of her examples of contexts, but she said that it’s in the spirit of other social theorists who have started to may out their own theories for how to make sense of society. I’m actually skeptical that it’s possible to come up with a comprehensive or complete mapping of all human contexts. But I’m sharing it here in case it might be able to shed any additional insights into how something like Nissembaum’s Contextual Integrity theory of privacy could be translated into a Federal Privacy Law framework, and whether it’s possible or feasible to comprehensively map out the purposes, goals, and values of each of these contexts, appropriate information flows, and stakeholders for each of them.

It’s probably an impossible task to create a comprehensive map of all contexts, but it could provide some helpful insights into the nature of VR and AR. I think context is a key feature of XR. With augmented reality, you’re able to add additional contextual information on top of the center of gravity of an existing context. And with virtual reality, you’re able to do a complete context shift from one context to another one. Also, having a theoretical framework for context may also help develop things like “contextually-aware AI” that Facebook Reality Labs is currently working on. Also if VR has the capability to represent simulations of each of these contexts, then a taxonomy of contexts could help provide a provocation on thought experiments on how existing contextual informational norms might be translated and expressed within virtual reality as they’re blended and blurred with the emerging contextual norms of XR.

I’m really impressed with the theory of contextual Integrity, since there are many intuitive aspects about the contextual nature of privacy that are articulated through Nissenbaum’s framework. But it also helps to elaborate how Facebook’s notice and consent-based approach to privacy in XR does not fully live into their Responsible Innovation principles of “Don’t Surprise People” or “Provide Controls that Matter.” Not only do we no have full agency over information flows (since they don’t matter enough for Facebook to provide them), but there’s no way to verify whether or not the information flows are morally justifiable since there isn’t any transparency or accountability to these information flows and all of the intentions and purposes that Facebook plans to use with the data that are made available to them.

Finally, I’d recommend folks check out Nissembaum’s 2010 book Privacy in Context: Technology, Policy, and the Integrity of Social Life for a longer elaboration of her theory. I also found these two lectures on Contextual Integrity particularly helpful in my preparation for my interview with her on the Voices of VR podcast: Crypto 2019 (August 21, 2019) and the University of Washington Distinguished Speaker Series at the Department of Human Centered Design & Engineering (March 5, 2021). And here’s the video where I first discovered Nissembaum’s Contextual Integrity theory of privacy where she was in conversation with philosophers of privacy Dr. Anita Allen and Dr. Adam Moore at a Privacy Conference: Law, Ethics, and Philosophy of End User Responsibility for Privacy that was recorded on April 24, 2015 at the University of Pennsylvania Carey Law School.

LISTEN TO THIS EPISODE OF THE VOICES OF VR PODCAST

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Rough Transcript

[00:00:05.452] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to The Voices of VR Podcast. So in this series of looking at the ethical and privacy implications of virtual and augmented reality, privacy and the implications of privacy is probably one of the biggest open gaps that There's not really a good legal framework to really approach privacy. We don't really have a good definition that's universally accepted for privacy. And this whole notice and consent model for controlling information that's private is completely broken. You sign an adhesion contract of terms of service and privacy policy that essentially gives the companies carte blanche access to do whatever they want. They may try to define what they are or are not going to do with your data. within a blog post, but that blog post is not legally binding, and you're still left with the terms of service that allows them to change their mind later. We're in this system, as consumers, even if we are making these decisions, we may not actually be the best people to be making these decisions because there's such an asymmetry of power when it comes to these companies that have so much information on us. These are completely new contextual norms that are emerging here, and we're still trying to navigate that. So you may have heard me talk about Helen Isenbaum and her theory of contextual integrity, which is a completely different paradigm on privacy. She makes it pretty simple. The simplest I can state it is that privacy is the appropriate flow of information. Now, she adds on to that, that it's also context dependent. So there's different informational norms depending on the context. And then you have to see what is appropriate depending on that context. defined by whatever the purposes and intentions of that context are. And then the next step is that there's information that's going back and forth and being transmitted. So there's the, the subject and the content of that data, there's the sender and receiver, there's the type of data that you're sending, in this case, with virtual and augmented reality, there's all sorts of biometric and physiological data. And then there's a transmission principle. which could be everything from informed consent, you know, citing these adhesion contracts, or it could be through coercion or buying or stealing information given in confidence with a warrant, there's lots of different ways in which that the data are being transmitted. So those are the five parameters that are talked about with the subject center recipient, the information type and the transmission principle. And then finally, there's this aspect of whether or not it's morally justifiable or appropriate and that's sort of like it meets your expectations and both involve parties of this information flow or having their intentions being met and there's certain normative standards that come up around those exchange of information. So this is a framework that's actually really robust. and I think really describes the contextual nature of privacy, but also tries to break down these five parameters. If those five parameters aren't being met, then there's not really contextual integrity. You've kind of lost contextual integrity. What I would argue is that the way that we have right now with these existing systems and these terms of service and privacy policies, we're losing this contextual integrity. It's not really meeting the standards for how to best meet the consumer expectations. You know, the number one responsible innovation principle from Facebook is don't surprise people. And I think in some ways, it's trying to meet this contextual integrity, which is that we are expecting what type of transmission is happening when we're exchanging different data. But we don't really have any way to verify that, no way to have any sort of audit trail. And it's, in a lot of ways, the context under which some of these data are being used, there's no way for us to opt out of that. It's just like an all or nothing. Either you take it or leave it, and that's power asymmetry that's being set up here. So I'm really excited to really dig in and do a bit of a primer of contextual integrity just to get the conversation going and to start to unpack how this starts to apply with virtual reality. Is this a robust enough framework to really handle what we need in terms of a better system for these immersive technologies and neurotechnologies? Or are there additional things that need to be added onto this theory in order to really take into account all the specific considerations when it comes to, say, biometric psychography or computed data? So that's what we're covering on today's episode of the Ways of VR podcast. So this interview with Helen Isenbaum happened on Tuesday, June 8th, 2021. So with that, let's go ahead and dive right in.

[00:04:10.446] Helen Nissenbaum: I'm Helen Isenbaum. I'm a professor at Cornell Tech, which is in New York City. And I run something called the Digital Life Initiative, which looks at ethics, politics, policy and quality of life in digital societies. And this is really an upshot of the issues that I've been thinking about for many years. Privacy has been a preoccupation alongside other kinds of issues that have, in my view, ethical importance in digital societies and maybe as a result of the development of digital technology. But everything came together with privacy. There was incredible things going on with the technology that cause people to freak out, to use a technical term. And I was so curious to understand what it was. I'm trained as an analytic philosopher, taken on the more technical side of things, mathematics and a little bit of computer science, really just programming and so on. And I could see that this technology was doing things that was resulting in systems that was causing people to be fearful and surprised, and they would label this reaction with the term privacy. And as far as I could tell, nobody really had a good definition for it. So this term, the things that were happening were technically interesting, But they were also conceptually interesting, because nobody could, in my view, the conversation was really confusing. So it was, from that point of view, you could say conceptually or philosophically really interesting. And then at the same time, it was ethically interesting, because people asserted privacy as a right, as something that should be protected. And this then had dual implications, both for the law and regulation, and also for technology. And I was really interested when technologists started being self-critical and thinking about what they were doing in terms of the technology. So that was what was so gripping about privacy is that it had all these dimensions to it. There are many other really important ethical issues and policy issues, but I don't think they have as many of the dimensions as privacy does. So it felt to me that even if I could just clarify the landscape a little bit, I could be doing something valuable for society.

[00:07:00.391] Kent Bye: Yeah, well, I came across a video online that was at a privacy conference at the University of Pennsylvania that featured yourself as well as Dr. Anita Allen, as well as Dr. Adam Moore. And I really appreciated that to really dig into the philosophy of privacy because I think a lot of the discussion and discourse is all around the fair information practice principles from 1973, that then the privacy policy and all the ways that the FTC is implementing it. It's all this model of privacy as the control of private information. Yes. And more of this libertarian approach where you could sort of consent over to buy, sell, or trade your privacy. Dr. Anita Allen is taking maybe a little bit more of a paternalistic approach of trying to say, hey, maybe we should treat this as a human right. That's more of an organ that we should maybe have more protections around what you can and cannot do with this data. And I see that the contextual integrity takes a completely different paradigm of saying that it's all about the appropriate flow of information, but it's also based upon the context. which it feels like intuitively your approach not only feels like what is actually true in terms of the contextual dimension of it, but also the appropriate flow, what's appropriate based upon the normative standards. That's to be debated, but I guess the laws that are out there are already, at least in the United States, doing that for health and education and children and your entertainment, different aspects where there's already laws around some of these different contextual dimensions, but you're coming in with kind of a different take rather than trying to see information as something that you're controlling and not having flow, but you're really emphasizing the flow, but also the contextual dimensions and whether or not it's appropriate. So maybe talk about how you were resisting against the mainstream to be able to start to develop this.

[00:08:36.267] Helen Nissenbaum: Yes, yes. So I'm resisting the mainstream, but I'm also noticing that people take Anita Allen. I admire her thinking tremendously. And I hear her talking about privacy. And I say, there's some really incredible insights into what she's saying. And I hear other colleagues, I mean, that's a big community really excellent community because these folks, they're concerned, you know, even the Code of Fair Information Practice principles there are great insights in it, but they don't pull together as a total story. And so what I was pleased to find in contextual integrity, once I started filling out the different pieces of it, is that it can incorporate some of what's really important, what I consider to be incredibly valuable in these other approaches, So in contextual integrity, for example, let's take this idea of privacy as control over information about ourselves. Actually, usually privacy means that I control my information, which in itself is a problem. But there's just no doubt that that idea of control is so much part of the way people think about privacy. When you have a commitment or belief that's so strong like that, you can't just deny it. I want to frame it properly. What I'm saying is when so many people are saying this, they're identifying something that's important and you can't simply deny it because so many people are saying it. Now, in my view, There are those people who say it with conviction because they think it's right, and there are those people who are saying it because it's expedient and they can exploit it. And so let's set those people aside for a sec. The way contextual integrity recognizes control is as one of the parameters, which is the transmission principle. So it says that in certain circumstances with certain values for the parameters, we can get to this, so it doesn't matter if people aren't following, one of the constraints on the flow of information from party A to party B is the consent of the data subject. Let's say the student, or the patient, or the voter, or whoever it is. And those parties we identify in contextual terms, so we identify them as acting in certain capacities, and those capacities are part of what constitutes these different societal contexts. So it may be the case that if you look at a lot of data flows out there and a lot of the areas in which information technology, digital media platforms and so forth, a lot of those domains in which technology has entered are socializing, if you will. The transmission principle just so happens to be dominated by control. But if you think about other contexts, like imagine people in a court of law, you have a witness on the witness stand and the prosecutor is asking a question, that person can't simply refuse to answer the question, otherwise they're in contempt of court. So the conveyance of information in those circumstances, their control is not like, where were you, you know, Mr. Bai? on blah, blah, and you just say, I refuse to answer that question. Of course, you can plead the fifth, that's a whole other thing. But once you're there sitting on the stand, you are compelled to answer the question. You can't say, I refuse to answer for reasons of privacy. You just don't have control in those circumstances. And nobody will, I don't think, say, oh, your privacy has been violated. Oh, that's a long answer.

[00:13:10.460] Kent Bye: Yeah. So the way that I think about it at least is when you go to a doctor, you give medical information because you want the doctor to help you. So if you have biometric devices that are measuring information, then you may not want Facebook to have that information because they might use it to exploit you, but you want to have that same information to the doctor. So it's not like you could just blanket say, we should put all stops to this transmission of this data because there is a contextual dimension there. And so you're trying to put forth this appropriate flow that is conforming with the norms, it's meeting the expectations of those norms, and that flow is kind of justified by it's legitimate, it's worth defending, and it's more than justifiable. So you have these exchanges of information, which I'd say is different than the existing ways that our privacy laws are. So maybe as a quick question, before we start to dive into your actual framework, has contextual integrity been applied to any laws

[00:14:01.017] Helen Nissenbaum: Well, I don't know that anybody has devised laws while thinking about contextual integrity, but what we did find, and when I say we, there are really a number of people who've been helpful in developing contextual integrity in different areas. And this was a group of computer scientists who had developed a formal language for being able to express the norms of contextual integrity. formally, so which meant that, you know, if you could express these norms formally, you could even maybe program them into a computer system. They had wanted to show that this language that they had developed in order to express norms could be useful in a variety of settings, and so they looked at the HIPAA rules And they found that actually, if you take the HIPAA rules, the HIPAA rules just happen to, a lot of them have that amazing structure of what I've called contextual informational norms. Again, I don't know that anyone who wrote the rules said, oh, let's see what the norms should look like. They just naturally came upon them, which was beautiful. Like that's exactly, because what I'm doing is not so much making up the norm structure, I'm trying to say, as a matter of fact, what is it that people need to know about a data flow in order to ascertain whether it respects privacy or doesn't? And the proposal of contextual integrity is that you really need to have values for these five parameters mentioned or fixed, otherwise you don't know enough about the flow. And what we would find then is that in HIPAA, so many of the rules actually do contain that structure, which was sort of like empirical evidence for the usefulness of that norm structure that contextual integrity embodies.

[00:16:04.913] Kent Bye: Yeah. So just to elaborate on those five aspects, the actors, and so the subject and the center and the recipient, and then the type of data. So the context of what type of information and what the purpose of that data is, and then the transmission principle that you are giving. So whether or not it's by consent or coerced, and so those different principles. So what you're saying in some sense is that even though it's not explicitly defined, some of the existing laws by their very nature, already kind of following this formalization that you've come up with.

[00:16:33.489] Helen Nissenbaum: Yes, and I do actually think that so the opposite holds is where we try and express rules that don't actually acknowledge the importance of these other parameters. you run into trouble. So laws that try to reduce everything to whether the data in question is sensitive or not sensitive is problematic from the contextual integrity point of view, because, and you just said it yourself, Kent, which is that you could take some information, let's say your heart rate, which your physician should have that information, And some people might call it sensitive. Maybe we would say Facebook shouldn't, but the brilliance of the way a lot of privacy regulation and I just mean it norms expectations has evolved in society. is that we recognize, we don't say that the flow of your heart rate information to the physician, we don't say that that's a privacy violation. We don't even say that that means you have less privacy because now the physician has your heart rate. we say that's appropriate flow. But the brilliance, what I'm saying, of the evolution of many of these norms is that they recognize that in order for the patient, in this case, not to feel scared of having this information flow, we still need to put like a stopgap so that the physician, and it doesn't have to be like government imposed, but even the Hippocratic Oath, that's an ancient idea, that the physician understands that in order to do the best job, they need to have assurances of confidentiality of this data. But right now we're living in a moment in time where so many of the actors that are receiving information about us are really not constrained in any way. It's because we haven't understood privacy. We've said, well, as long as you can get people to click yes, you've done everything you need to do. Whereas if you think about these ancient norms that have evolved, you understand their intelligence. And now we're putting the burden on individuals to make these decisions one at a time when they can take such a long time to evolve.

[00:19:09.172] Kent Bye: Yeah. I think that's the heart of the technology as it stands, but as we move forward into virtual and augmented reality technologies and neurotechnologies, this whole concept of appropriate flow of what's appropriate or not appropriate, you're talking about, well, it depends on the normative standards of the culture. Well, We're also talking about emerging technology where there are no established norms and they're all being made up and it's already gone too far. And so they have the notice and consent. I kind of think of it as a form of digital colonization, where if you have any device that's recording anything, all of a sudden you're seizing control over that data. And then whoever's data it is, they have no transparency, no accountability and no paper trail or audit or no way to actually do any checks and balances as to whether or not there's any appropriate use of that data or not. So it feels like we're in this space with notice and consent, with being able to sign the terms of service and privacy policies. People don't understand them. They don't read them. You talk about the transparency paradox in terms of you can either on one hand be really robustly specific about everything that's really confusing and no one's going to read it, or you do sort of a high level overview of that transparency. And yet either way, whatever end of the spectrum you fall on, it ends up not protecting privacy. So we have the notice and consent model that we have, but at this point we have no way of actually defining what's appropriate or not based upon what these big tech companies are doing. So I'm looking at this and I'm like, okay, I, I totally buy into Nissenbaum's contextual integrity, but how do we go from where we're at now into something where you can actually have some sort of audit trail as to determine as to whether it's appropriate or not?

[00:20:35.619] Helen Nissenbaum: Yes. Well, okay. So I think that you're merging two ideas and let's disentangle them. The one is the enforcement and the other is what should the rules be? And you rightly point out that with emerging technologies, what is new and what isn't new? So we really should think about what is new and what isn't new. One of the reasons we don't have norms yet for many of these circumstances is that the actors that have emerged into the sphere are new kinds of actors. Now, there was a moment maybe back in a few decades, I don't know how old Google is, that was very cute. You know, Google came about and they said, do no evil. And that was terrific. Now, what is Google? Google was just a big global corporation, nothing exciting. Yeah, they make stuff that's good. And a lot of it's very good and good for people and it's helpful and so forth. But like any corporation, they don't want any constraint, you know, they're single minded, they have to satisfy their shareholders and so forth. So eventually you can say, okay, they're just a corporation, we can bring on board some of those norms, we can't treat them as like kind of cool rebels or something like that, where they get special dispensation to do some naughty things because they're also doing some terrific things as well. No, that's not what they are anymore. So some of the rules that we've created over a while, centuries maybe, not eons, but we now need to import to them as simply as big corporate bullies, which they are, because that's what big corporations are. They don't want anyone to stop them doing what they're doing. Not special about these, they're not worse than any other companies, all of that, so I'm not picking them out. On the other hand, they are producing technology and objects that are different in what they can do. So we have to figure out what the rules should be. There's something hard about that. So I like fantasize the Hippocratic Oath back in the day, maybe that profession of a physician was just like flowering. Nobody knew, like, what's a physician? What should the obligations be? What do they do? What should we allow them to do? And so the profession itself came together and said, how do we make sure we do the best job possible and people don't get too annoyed with us and start to develop the norms? We haven't had that yet. Right now, it's just being full speed ahead. because we've had this rather stupid, if I may, rule of notice and choice, because we're married to this idea of control. We haven't had a lot of conversations about, you know, even in the AR, VR environment, like what exactly are the data flows? Who are the people? What is their role in society? Who's benefiting? Who's not benefiting? you know, in the environments in which these ARVR systems are operating, what are the purposes if we're utilizing it in an educational setting or an entertainment setting or a you know, all the different environments, what are the purposes? And how are these data flows helping to promote them? I think these are kind of, it's new, new, new, but many things stay the same people, bodies, spirit, you know, the human spirit, and so on. But we have to like, have that conversation.

[00:24:35.632] Kent Bye: Yeah, there's two things that come up. One is the degree of how this technology, does it change some of how even contextual integrity is able to handle some of this? Because in your book, Privacy in Context that you wrote in 2009, you said, you know, even though I'm not being specific about the technology, hopefully this is an abstracted enough approach that's going to be applicable to the future. I think generally that's true. Although I do have some specific questions that maybe we can dive into about what's happening with neurotechnology and XR and virtual augmented reality. But first, before we get into that, I want to maybe elaborate on this whole thing of context, because context is such a key part of your approach. When I saw Dr. Anita Allen speak, she gave the presidential address at the American Philosophical Association in 2019. And she said in this lecture, she said, you know, there's not a comprehensive framework for privacy. It's so confusing and that, you know, your approach is probably the closest that I've seen, but a lot of what I've talked to lots of different people about privacy, time and time again, people will say, well, it all depends on the context. It's all contextually dependent and identities connected to context and privacy is connected to the context. And so you have some, you have some slides here and it says, please don't ask me to define context, but I am going to ask you to say like, how do you make sense of what this context is in the context of contextual integrity?

[00:25:53.891] Helen Nissenbaum: Right, because here's why I said, please don't ask me to define. Because if I'm defining it, then I'm just coming in and creating some kind of artificial object, the way a mathematician might say, I'm defining this function to be such and such, like this is how it operates. Rather, really, I'm not a social scientist, but I'm aware that social philosophers will conceive of society in broad terms. And a lot of the social theorists and social philosophers that I'm reading, they conceive of society not as an undifferentiated space, but as divided into these domains or spheres. And they call it different things. So, Bourdieu was one that I really found valuable, but I struggled with. He talks about fields. And as I say, Michael Waltzer talks about spheres. Others talk about domains. But this is a natural concept. It's a way that human analysts have created or discovered in order to characterize social life. So when I was saying, don't ask me to define it, it's because I'm saying, I don't want to commit to Bourdieu's idea, I don't want to commit to Michael Walters, I want to say the assumption here is that social life, it's constituted by these different spheres. If you look at the way the law is divided up, then we see, oh, there's commercial law, there's family law, there's whatever. So, these are just points of connection. If you look at the way government is divided, you have the Department of Agriculture, you have the FDA, you have the Health and Human Services. So, we carve society into different spheres. And I'm saying, once you have these spheres, now you're up and running, and you just use this most generic word of context, which has caused no end of confusion, because context sort of, I don't just mean like, look, I'm sitting in a room, this is my context. I mean, social domain, but I didn't want to commit to one theory or another. So given we have context, there is evidence that people, government, law, divide societies into these contexts, and the contexts are characterized in certain ways. Okay, I'll stop here, but that's where I'm heading.

[00:28:29.316] Kent Bye: Yeah, I just will say in linguistics, there's a concept of pragmatics, which is given the language, different words will mean different things depending on the context. And so I think linguistically, there's different ways that that's been formalized. But the definition that you're giving in this slide, you say that contexts are the differentiated social spheres, which we were just talking about. with important purposes, goals, and values characterized by distinctive ontologies, roles, and practices, such as education, healthcare, and those norms, those information norms. And so there are standards based upon those contexts.

[00:29:00.769] Helen Nissenbaum: Yes, perfect. That's perfect. And the thing that I've come to realize after many years of people asking me questions and challenging me and so on is that Now, I believe that the most important defining dimension of a context is its purpose or purposes. So, let's say education, which doesn't mean they're easy. I don't think everybody in society agrees. These are the purposes of education. There's a lot of contestation and some people say it's the three R's and some people say it's citizenship, blah, blah, blah. But we have the purpose and then We have values on the one hand that are more abstract and then less abstract we have function. So, there's certain activities that are important because they're sort of instrumental to promoting the purposes or goals or ends. And it's that which defines. And then everything else is in service. of those purposes and ends. So, if you have an educational context that's oriented around certain purposes, now suddenly you have the eruption of the ontologies. Who are the people that we need in order to, so we have teachers and then institutions within the educational context and principals and superintendents and parents of students and students and etc, and you have this whole social construct, and then you have the norms of behavior that all support

[00:30:43.578] Kent Bye: Yeah, after I listened to Dr Anita Allen say that there wasn't like a comprehensive framework, I suddenly realized that there was such a contextual, well, after talking to many different privacy engineers from SIGGRAPH that this is such a contextual dimension. And then as I started to look at a lot of the ethical issues, I also realized that so many issues of ethics is about the blurring of contexts from one domain of social norms, blending into other social norms. And then that technology in general is all about blurring these different contexts that used to be there. And now you're all adding them together. And so I started to come up with a little bit of a taxonomy of these contexts. And I'm a fan of girdle and incompleteness, meaning that any sort of formal system is going to be either consistent or complete. So there's either going to be inconsistencies or it's going to be incomplete. So I don't think it's possible philosophically to ever come up with a comprehensive model of context. I think that may be part of the issue, but I just wanted to share briefly this taxonomy that I've been using because there are different aspects of self and identity, biometric data, our finances, our money, our early education and communication, our home and family and private property and entertainment and our hobbies and who we're having sex with, our sexual identities, our medical health information, our partnerships, information around death and philosophy, higher education. There's governments and institutions and friends and community cultures and stuff that's hidden or inaccessible or being in exile. I mean, as I've gone through and mapped out different aspects and ethics, I think as I ask people about the ultimate potential of VR, they usually answer in one of the contexts, they'll say, well, it'll be all about education or all transform health, or it's going to change remote work, or it's going to change the way that we communicate. It's going to change the way we record memories of our family, or it's going to change our sense of our identity and the way that relates to other people. So I started to come up with this model of context. Then I actually came across your theory of contextual integrity, which is like, oh, wow, this is a hard problem of philosophically that it hasn't necessarily been well-defined or mapped out. But the advantage at least of me mapping out this taxonomy in this way has shown stuff like when you have these immersive technologies, all of a sudden you have information that would normally be in a communication context, But now all of a sudden it's so intimate with your physiological and biometric data, it's actually able to extrapolate medical information from this technology. So how do you deal with something that's a consumer technology that used to only have access to this information that would only be available for people who are doing in the context of medicine? Now, Facebook has access to all this information. It's a blurring and a collapse of all these contexts. And in context, you have also virtual reality is all about you being in one context and switching into a different context. In augmented realities, you're in a center of gravity of your existing context, but you're overlaying different contexts on top of it. So context, conceptually, is a huge aspect of both virtual and augmented reality, both in how the technology is functioning, but also I'd say in these information flows of what's appropriate and not appropriate, you start to get into these things where there's a collapse of context, And as we have the emerging technologies, there's a crisis in determining what's appropriate or not. So anyway, I just wanted to kind of share some of that and I'd love to hear some of your.

[00:33:56.794] Helen Nissenbaum: Yeah. I mean, I think that there's some common intuitions. I do want to, let me just get this off my chest. When you bump into people that say, Oh, privacy, it's such a blah, blah, blah. It's true that there are a lot of bad theories of privacy out there. And there are a lot of bad theories of everything out there, justice, freedom, or a lot of theories that disagree with each other as well. So the fact that they draw the conclusion that, oh, it's really a problem. And I think that when I talk about expedience, what I mean is the companies, maybe governments, who capitalize on people running around saying, oh, privacy is such a messy concept. Because as long as we're not converging on some understanding of why privacy is important and what it is, like roughly speaking, they can carry on doing whatever the heck they're doing and not be bothered because everyone's just saying, oh, what a messy concept. So it pains me when people say that. What I also want to say is that if I say no, no, no, it's actually very straightforward. Privacy is appropriate flow of information. End of story. You may disagree with me on how I go from that point onwards, but it feels like there's some unity in that claim, and it really opens the door to a different type of reasoning. Okay, now coming back to your taxonomy, we're not going to have time to go into like the parallels, but it was clear from looking at the taxonomy that at least in some instances, we are seeing whole contexts that are, and I want to say are constitutive because it's not like you go into a room, there's a room. And now we carve the room into different spaces. That's not the way it is. It's that social life is constituted by the context. And the context, there isn't a context like an empty box, and then we throw things into it. The context is constituted by those dimensions of it that we've discussed, the capacities in which people act, information types and so forth. So, anyway, but coming back to this idea, is that you too, like Bourdieu, or like the social philosophers, are proposing a certain construct of society and in your mind, this particular taxonomy makes sense, and that's your starting place. Now, okay, coming back to the augmented reality and the virtual reality part. So, in what you're calling context collapse, like I want to unpack that a little bit. I'm only looking at privacy. So I'm not saying that the other part isn't interesting. I'm just saying it's hard enough to grapple with what I'm grappling with. So don't make me say, do anything more. So when you think about your example of, because we already had it, you know, Fitbit and the Apple watch, we have these systems that are, or even back in the day, I don't know if you're familiar with the target pregnancy case, although now people say maybe it wasn't exactly accurately described, but what we're saying is that we have a capacity with data. And I, you know, that talk that I gave, I think maybe you saw the slides or something, there are ways in which Facebook, if you're using the goggles and you're walking around a room, can kind of reconstruct a room in your house, let's say. And we could say that we have the fourth amendment that says that law enforcement can't enter your house without a warrant. So it's like a pretty high bar. Now you've put these goggles on and you're mapping out your living room. And it's possible that that information is going to a server, a Facebook server or whoever it is. Now we have to reckon with that. I'm going to stop there because I know there's more to say there, but I just want to see whether we're approaching.

[00:38:36.346] Kent Bye: Yeah. I guess the thing that I, with the time we have remaining, I really wanted to get into some of the, you know, you've talked about XR, but I think also my whole question of appropriate low, here's my concern. My concern is that with these technologies, neurotechnologies, we're going to be able to do things like decode the brain, put on a FNIRS headset in the next five to 10 years, whatever's in your working memory. It's basically going to be able to sort of translate our thoughts into synthesized text that then has all this intimate information that is our eye tracking data, our information that is coming from our body, our behaviors, our actions, our attention, our arousal, our emotions, all this physiological and biometric data fused together with contextually aware AI, which is actually what Facebook calls it, which is from egocentric data capture, creating a model of not only what's happening in the world, but the context in our relationships and what's happening inside of our body. This feels like we're on a roadmap where we're going to be the total information awareness of all of this information that's coming from our body, that Rafael Justa is coming forward and saying, we need these things as a human right. We need mental privacy as a human right. We need identity and agency and the right to fair access to these technologies and to be free from algorithmic bias. But the three big ones that I want to emphasize here is identity, agency, and mental privacy. and that it may be that we need a human rights framework to be able to protect this because notice consent model is maybe not going to be, certainly not going to be able to.

[00:40:05.864] Helen Nissenbaum: No, I don't think there's an either or here. First of all, I don't know where identity and agency, like agency, I want to set those aside a little bit because, you know, these are ethical concepts that probably have a relationship with privacy, but they're very big, you know, agency, freedom, autonomy, they big in their own right. Privacy is instrumental in promoting agency. So to that extent, but if we want to think about agency itself in light of some of these, you know, future looking technologies, that's question in itself. And I hope that there are people who are thinking about that, because I think that this is really terrible and terrifying. When we're thinking about mental privacy, and, you know, you should go back to Chris Regan's book, which was, I forget when, but anyway, there was a time when people thought that lie detectors were going to work. And, you know, there were all these other psychosomatic ways of figuring out like what people were thinking. And there was some anxiety about psychological, I forget what the exact term was, privacy. So this is, I don't want to belittle it by saying it's not new. I hate those kinds of responses. I'm saying there's been an anxiety about it. Like what's in my head. This example I was giving you about the glasses and then Facebook reconstructing, it's the same sort of thing. We have a room. This is in my house. There were solid walls around it. And this technology is suddenly allowing insight into what we have considered to be the space that only certain people with certain access could have. Now we could make the same case about what's going on in my head, both like knowing my thoughts, but then maybe even affecting my thoughts. I'm not talking about affecting my thoughts because this is like a whole nother issue related, but another issue. When you say a right, that's fine, but then how are you going to exercise the right? So contextual integrity, I don't care. You can call privacy a human right, and you can still have the lamest theory of privacy of what it means to exercise the right. The place that contextual integrity plugs in is what does it mean to exercise that right? And I promise you, someone's going to come up with notice and consent as the way to exercise your right to mental privacy. And gosh darn, we have to prevent that. We have to think about who are the actors. And we're not letting ourselves do that because we're just stuck with this theory of privacy that loads all the decision onto the least capable person, which is the data subject.

[00:43:14.073] Kent Bye: Well, yeah, what's happening this week is RightsCon. There's going to be a number of different human rights lawyers. Rafael Justo's Neuro Rights Initiative, which I listed the different rights, maybe taking this to the UN Declaration of Human Rights to maybe add it to this, you know, some of these rights into that. And then even if that happens, though, then it still has to sort of interface with these local laws around privacy.

[00:43:34.118] Helen Nissenbaum: And that's where I start to have more... No, because the law is so broken. So Kent, if you can make one contribution when you go to this event, say to them, how are you going to exercise the right? What does it mean to have a right to mental privacy? And if they can't answer, if they come up with like, you have to explain to people that when they have the senses stuck in their brain, can do this and this, and then they have to sign consent and you have to make sure that this consent is informed, Wrong answer. That is the wrong answer.

[00:44:14.512] Kent Bye: I think what I've heard at least is that the devices would be normal, but the data may be treated like say medical data. So maybe following existing laws around medical information where you can't just sort of use it for surveillance capitalism, but it would be like more controlled. I don't know if that's a viable solution or not.

[00:44:30.206] Helen Nissenbaum: No, not if you're realistic about the protection for medical data that we currently have. because HIPAA, you know, thank the Lord we have that, is still a law that ultimately reduces to notice and choice. So it's nice that we have it. I mean, I've just got this paper that is very much in a draft form with a couple of colleagues in law, where we're looking at these sectoral regulations. We're saying, yes, we like the fact that we have sectoral regulation, the current sectoral regulation has huge exceptions gaps in it. And it's also at the end of the day, is very, very limited by the possibility of people opting out of these rights. And I don't mean just that, oh, stupid people don't know this. It's impossible to know, unless you like a real specialist and understand specific systems. I want the XR people or these folks who are doing the work, I want them to figure out what the privacy rules should be. I don't want me to have to figure them out, but as long as they don't go notice and choice, don't pass the buck.

[00:45:54.642] Kent Bye: Right. Well, as we're coming up to the end of the hour here, I just want to sort of have maybe a wrap up question, which is, you know, you've given a lecture at the XR Ethics Symposium last year at the Florida Atlantic University. And, you know, you've obviously been in dialogue with the larger community around this contextual integrity theory of privacy and talking to different technologists and, you know, have a whole mathematical formalization, but there's still, you know, work to be done. And also as we move forward into potentially having it more formalized and reform of US federal privacy law. I mean, there's lots of different debates and discussions, but for you, what's next in terms of the work that you've been doing with the contextual integrity theory of privacy, and you've been engaging with some of the XR community. I don't know if XR is making you have any new revelations or insights or maybe not, but I'm just curious what's next for you in terms of as we move forward with all this stuff and your work with contextual integrity.

[00:46:46.086] Helen Nissenbaum: Yeah. I mean, the XR stuff, I was interested to engage with the folks in that community, even though I'm really not expert in it, and I was happy to learn, is that information, you know, digital media platforms create a world for us. And this is what I see in common with augmented virtual realities and so forth. They create a world for us. And if you take just art, I go to an art gallery and I see the world that's interpreted by the artists, but This technology is so close to me that I think that what I'm viewing is the world. And people lose a sense that the world that they're seeing through Amazon, through Netflix, through Google, through Facebook is the world as constructed by those companies. And I'm just naming the big names, but I'm sure there are other names in different spheres. You take that reality as reality. And you don't realize that that is a constructed reality. Sometimes that constructed reality, often that constructed reality is constructed in a specific way that's expedient. Netflix wants you to watch the next episode because the more you watch the better for them. I mean, I don't know. Amazon wants you to engage with them in a certain way. So these are very expedient and they're not necessarily in anybody's interest. They just want you to stay glued to your screen for as long as possible. You know, this is not a new observation. The data they're collecting about you in this kind of feedback loop that they're then utilizing again, this is where I've become interested in this work in manipulation to say, yeah, these general things called dark patterns, we know about behavioral economics and how they utilize these human bounded rationality, But in addition to that, I know a lot about Helena Sembom or Kent Bye, and I'm going to make his world so real to him by interjecting that stuff. And that's where I think the privacy story marries some of that, the world presented you by these technical systems. And I don't think we've begun to scratch at the surface of how problematic that can be because they're not created by your friend or your mother or your child, or they're created by these other entities that have really very vastly different interests for you.

[00:49:34.224] Kent Bye: Awesome. Is there, is there anything else that's left and said that you'd like to say to the immersive community?

[00:49:39.330] Helen Nissenbaum: I mean, the immersive, I don't know that privacy is the main story for the immersive community. Privacy is one aspect of it. For me, the question for the immersive community is how do you maintain a grip on that layer between you and the world that begins to feel real to you and yet it's constructed so that you can be critical of what the construct is, what purposes and whose intentions and whose interests are being served by that layer that these immersive technologies interject between you and what's beyond them.

[00:50:24.027] Kent Bye: Awesome. Well, thanks again for joining me here on the podcast and for talking about this. It's been a real honor. And I think there's these active debates right now, like the neuro rights movements and human rights and contextual integrity and trying to expand notice and consent or do something different. So I think these technologies are catalyzing these conversations and yeah, it's an ongoing discussion. So I'm very pleased to be able to get your perspective on it.

[00:50:48.656] Helen Nissenbaum: Yeah. And you know what question to ask those folks, right?

[00:50:52.672] Kent Bye: Yeah. How do you exercise your right?

[00:50:56.278] Helen Nissenbaum: You learn fast. All right. Thanks Ken. Good luck. Enjoy yourself there.

[00:51:02.513] Kent Bye: So that was Helen Isenbaum. She's a professor at Cornell Tech, where she runs the Digital Life Initiative, and she's also the founder of the Contextual Integrity Theory of Privacy. So I have a number of different takeaways about this interview is that, first of all, well, I'm just really glad to be able to get a primer and overview of contextual integrity, because I really, really do think that this is probably the most comprehensive definition of privacy that I've seen. It just makes intuitive sense. And it's simple. You know, it's like privacy is the appropriate flow of information that's dependent upon the context. Now, what is the context in these emerging norms? And how do we define what appropriate flow is within these emerging technologies? That's still up for debate. But I think that it's least reflecting how these norms evolve over long periods of time, and what we see as appropriate or not appropriate, and how people will have that kind of like these which is a technical term of a freak-out when it comes to these technology systems, and then people feeling either fearful or surprised, and that people were labeling it as privacy. There's this mindset that I think we've come up with since the 1973 Fair Information Practice principles, which I think has been a lot of the foundation of our privacy laws, is that we think of privacy as this controlling of that information. static thing that we own and it shouldn't go anywhere. But I think this is a little bit of a paradigm shift and saying, no, actually, information wants to be flowing in between different people because it serves specific functions. And there's ways in which that that information given into the right context is totally appropriate. And so you can't just have these blanket statements saying they're going to just stop this flow of information in all contexts. There is still this aspect of control within the system, which is the transmission principle. Is it through consent? Is it coercion? Is it through these adhesion contracts, which is some sort of form of coercion that's a little bit different than just informed consent? There's still an aspect of the user being able to have some way in which this information is being transmitted. but it's the appropriate flow of information. There's the context, which is defined by these social philosophers and theorists, like Bordeaux talking about field theory, or Waltz talking about spheres, or there's the domains of human experience. And so these are different ways in which we differentiate society. So she defines in one of the lectures that she gave, she has a slide that says, contexts are these differentiated social spheres that are defined by important purposes, goals, and values, and then characterized by distinctive ontologies, roles, and practices. like healthcare, education, and family. And then they have these norms, these contextual information norms that are either implicit or explicit rules of information flow. So again, there's these fears that we're breaking up society into different categories. You can look at law, you can look at government, you can look at the industry verticals when it comes to XR. So there's different domains of these human experience. For me, when I ask people the ultimate potential of VR, they usually answer into one of these domains. And she said one of the most important things about these different domains is that there's very specific purposes, goals, and values of how you start to define what these contexts are. So that's the context. And then there's the five parameters that she's talked about. You know, there's three actors, which is kind of like when you're sending an email, you have the subject. So what the intention of that data is, and then you have the subject and the sender. So you have this exchange that is happening between two entities, and then you have the information type that's everything from the movement data, the physiological data, your input, your voice data, all the data that you're putting into the system. It could be things that are personally identifiable, like your name or your social security number, your phone number, or it could be things that you're kind of radiating off from your body. And then there's the transmission principle, which is the ways in which that is transmitted. That could be everything from consent, coercion, compelling, stealing, buying, selling. There's sharing and confidence, giving it with notice, with a warrant, or there's some sort of reciprocity. So there's all sorts of different types of transmission principles there. And that's, again, where it comes into this ability to have that choice or that consent. In one of her talks, she actually adds like a six, which is the use. What's the context of how it's used? And I would maybe put in there the ownership, because sometimes the subject and the sender who owns that data is not always known either. And so that's yet another question as to if we have this model of owning your own data, then is there some sort of ownership there? Or does the information flow mean that it's a shared ownership across this network of different people? There's some laws of GDPR that allows you to revoke your access in different ways. If you do have the ability to revoke your access, then I think there could be additional things that would need to be included in here, which is if there is an ability to veto or revoke access to some of this information. Actually, another aspect that may not be included in here is the computed data or the biometric psychographic data. Being able to take all these individual data streams and then being able to extrapolate certain aspects of your personality or your character or the context that you may be in. Information Technology and Innovation Foundation calls it computed data. Britton Heller, human rights lawyer, calls it biometric psychography. There's all sorts of biometrically inferred data, which I think Helen Isenbaum has talked about before, but I'm not sure if that's included in this information type because it's really kind of extrapolated based upon information that you're aggregating. So it's like this aggregate data that you're collecting, and then you're able to extrapolate all sorts of different inferences and meaning from that. How does that fit into, do you own those inferences? Or because these companies have aggregated all this information, do they own those inferences? There's a lot of things that I think are open questions that I still have in terms of this contextual integrity theory and how they're really handling some of this computed data or biometric psychographic data. And then finally, there's the appropriate flow of information as to whether or not it's legitimate, worth defending, and morally justifiable. So again, I think as we look at whether things are appropriate, there are some ways in which that immersive technologies, you are exchanging your information in order to actually have the technology work. But are you also consenting to have that data be put into this big database that is tracking your attention and trying to extrapolate certain things of what's happening in your context, what your interests are, and all this psychographic data that is going to be used for advertising? Is that morally justifiable? Because that business model, to make the technology affordable, you're mortgaging different aspects of your privacy in order to get access to the technology. You're giving something away over time to get immediate access to something, but you may not know that you're having this kind of exchange. Does that make it morally justifiable, this type of exchange? Having the way that we're being tracked in these technologies for this type of value exchange of a cheaper technology? So I think that's sort of the dilemma here, is trying to really identify some of these different contextual norms. And Helen's talking about how a lot of times with, like, physicians, these kind of norms evolve over many decades and centuries and millennia, where you have the Hippocratic Oath and you kind of have a lot of expectations for the confidentiality that you have when you interact with a doctor. Now, all of a sudden, you have these corporations with networked technology that are having all sorts of privileged information that we don't even fully aware of what they have, and there's this power asymmetry. She's calling them bullies in a lot of ways, because they have these adhesion contracts that are asymmetrical, we don't really have much choice, and they're doing what they want with no oversight or accountability. I think she's asking these basic questions like, what are the data flows? Who are the people? What is the role in society? Who is benefiting? Who is not benefiting? What are the purposes? How are these data flows helping to promote those purposes? I think those are some basic questions here that when you get down to it, it all comes down to the information flows. Some of the things that are happening within XR Technologies is that you have these adhesion contracts that are very broadly and loosely defined in terms of what is allowing them to do. for all the different purposes and contexts. You read the privacy policy and some of the terms of service, but it's pretty vague. It just allows them access. It's really more about the transmission principle of the consent. It's less about the context and what they're actually doing with it, because they'll be defining the boundaries of what they will and will not do in these blog posts that are completely separate. They're saying, don't worry, we're not going to be using movement information for any advertising purposes currently. That's our current plans. And then at the same time, everything that's in the terms of service is allowing them to do just that. So it's not really preventing them from doing it. It's just saying, this is the context we're trying to establish. And they're trying to, you know, live into this principle of don't surprise people by informing people. But yeah, they're also tipping themselves off saying, you know, this may change in the future. So trying to determine the appropriate flows and how the full context is, there's not a lot of transparency, there's no accountability. There's no way you could verify what the actual context of some of this data that are being used, whether it's being used to train artificial intelligence or to create these psychographic profiles of us for advertising. So there's just not a lot of transparency. So a few more points is that, you know, I think there is this shift from really thinking about privacy as this static concrete thing that you're controlling. and seeing it as more of a process-relational thing, where it's information exchanged within a network of people within a certain context. And so, given that, she's saying that it's constitutive. It's not like an empty box. It's not like a room that we're in. That social life is constituted by the context. And that context is constituted by the dimensions of its capacities in which people act in these different information types. And so, in other words, there's these different actors and these relational dynamics of having these information flows and the context and the purposes under which that information is being Exchange and it's not like the actual physical space. It's not a concrete static object it's actually more of this relational dynamic where it's more about our relationships between the stakeholders and the contextual information norms of how information flows in order to achieve specific goals within these networks of people and So I think that's a bit of a paradigm shift and really thinking about those stakeholders. Who are these people? What are they trying to do? And really trying to define all of these different aspects within the framework of contextual integrity. And if those five parameters aren't being met, then it's not really living into contextual integrity for privacy. I was able to share with Helen Nissenbaum my taxonomy of context, and that's helped me at least map out different ethical dimensions. She says, you know, there's lots of different social theories, like Bordeaux, who talks about spheres, and Waltz talks about fields. In some ways, this is a social theory to help navigate some of these different aspects within the context of XR. From that, I'm able to say, well, there seems to be all these different contexts collapsing. Things that used to be only in the medical context is now all of a sudden being in the consumer context. blending the other across these different contexts. So I do see that there's existing information norms that we used to have. Technology, by its very nature, is dissolving some of those boundaries, and we have to kind of reestablish them in some ways through our informational norms that are dictating what is or is not okay. And then if we need to be able to start to formalize some of these different things within our laws from our government. I think there is some utility from starting to look at some sort of taxonomy, because you have to map over some of these things if you are going to translate them into laws and start to try to formalize some of these informational exchange norms. I think that's the challenge of a federal privacy law, is that you have to do this translation from all these different contexts and detail all these different information norms, or if there's some way to abstract it, as Helen Eisenbaum has done, and then let the interpretation of that fall into the enforcement, like how do you actually enforce or verify some of these things. And so I think that was things that she was also trying to emphasize is that there's a whole aspect of enforcement and how do you actually enforce and ensure that you're living into the ideals of contextual integrity. Then this whole approach of rights and our human rights, if you have bad law that has bad definitions of privacy, then you're not going to really have much enforcement mechanism to really ensure that you're able to exercise your rights to privacy. I think she's in some ways resistant to privacy as a human right in the absence of trying to take some sort of contextual integrity approach. If you don't, then you end up in some sort of notice and consent model, which he sees as, we're stuck with the theory of privacy that loads all of the decisions onto the least capable person, which is the data subject. And I think notice and consent is broken. I think a lot of people, there's wide-ranging consensus around that. It's just not working. working in terms of protecting our privacy. And she's cautioning that if you just go through treating privacy as a human right without having an underlying good definition of how that definition is reflected in ways which it's enforced within the law, then you're still going to run into some of these same issues. And I think she's on to something there. You really have to kind of translate some of these principles into the state and local and federal laws Otherwise, if you go to the sort of high level of human right, you know, you can say you have a human right to privacy, but then if you're still able to hand over that right through consent, then you have nowhere to go because it's basically this model that is the transmission of consent sort of negates a lot of these other discussions. By declaring it as a human right, it may actually help force and change those laws. And so from that sense, but at the end of the day, it does seem like you still have to change the regional laws in order for it to be enforced in any reasonable fashion. And yeah, just this final thought that as we have these companies that are constructing these realities, what is the rhetorical strategies of the intentions and the purpose of these being aware of a media literacy around world building and immersive experiential design to be able to connect what we're seeing in the world and what we're feeling and to be able to deconstruct what the rhetorical or intentional strategy for how these worlds are being constructed. So being aware of that and then being able to deconstruct it, I think, is a key part that she's also cautioning us as we move forward. So, that's all that I have for today, and I just wanted to thank you for listening to the Voices of VR podcast, and if you enjoy the podcast, then please do spread the word, tell your friends, and consider becoming a member of the Patreon. This is a listener-supported podcast, and I do rely upon donations from people like yourself in order to continue to bring you this coverage. So, you can become a member and donate today at patreon.com slash voicesofvr. Thanks for listening.