Here’s my interview with Keiichi Matsuda, Designer and Director of Liquid City, that was conducted on Thursday, June 12, 2025 at Augmented World Expo in Long Beach, CA. In the introduction, I read through Matsuda’s essay titled “Gods” (also uploaded here) where he explores the idea that AI should be more like pets and polytheistic and animistic familiars rather than the more monotheistic approach where there’s one true AI God represented by one of Big Tech’s omniscient and all-powerful AI systems. This approach has lead Matsuda to developing a system of what he calls “parabrains” that is an interface for AI agents that goes beyond the narrative scripting capabilities that he was exploring in inworld.ai with his project MeetWol that I covered previously at AWE 2023. A lot of Matsuda’s ideas were also explored in the speculative fiction short film called Agents that was produced in collaboration with what was a the time Niantic Labs and is now Niantic Spatial (check out my interview with Niantic Spatial at AWE 2025 for more on how they’re using Matsuda’s Parabrains system). And you can also see more context in the rough transcript below.

This is episode #41 of 41 of my AWE Past and Present series totaling 24.5 hours. You can see a list of all of the interviews down below:

- #1590: AWE Past and Present: Ori Inbar on the Founding of Augmented World Expo to Cultivate the XR Community (2023)

- #1591: Sonya Haskins’ Journey to Head of Programming at Augmented World Expo (2023)

- #1592: Highlights of AWE 2025 from Head of Programming Sonya Haskins (2025)

- #1593: From Military to Enterprise VR Training with Mass Virtual on Spatial Learning (2025)

- #1594: Part 1: Rylan Pozniak-Daniels’ Journey into XR Development (2019)

- #1595: Part 2: Rylan Pozniak-Daniels’ Journey into XR Development (2025)

- #1596: Engage XR’s Virtual Concert as Experiential Advertising for their Immersive Learning Platform (2023)

- #1597: Educator Vasilisa Glauser on Using VR for Twice Exceptional Students (2025)

- #1598: Part 1: Immersive Data Visualization with BadVR’s Suzanne Borders (2018)

- #1599: Part 2: Immersive Data Visualization with BadVR’s Suzanne Borders (2021)

- #1600: Part 1: Jason Marsh on Telling Data Stories with Flow Immersive (2018)

- #1601: Part 2: Jason Marsh on Telling Data Stories with Flow Immersive (2019)

- #1602: Part 3: Jason Marsh on Telling Data Stories with Flow Immersive (2025)

- #1603: Spatial Analytics with Cognitive3D’s Tony Bevilacqua (2023)

- #1604: Investing in Female Founders with WXR Fund’s Amy LaMeyer + Immersive Music Highlights (2019)

- #1605: Rapid Prototyping in VR with ShapesXR + 2021 Launch with CEO Inga Petryaevskaya (2021)

- #1606: Weekly Meetups in VR with XR Women Founder Karen Alexander (2025)

- #1607: 2023 XR Women Innovation Award Winner Deirdre V. Lyons on Immersive Theater (2025)

- #1608: AWE Hall of Famer Brenda Laurel on “Computers as Theater” Book, Ethics, and VR for Ecological Thinking (2019)

- #1609: Framework for Personalized, Responsive XR Stories with Narrative Futurist Joshua Rubin (2025)

- #1610: Scouting XR & AI Infrastructure Trends with Nokia’s Leslie Shannon (2025)

- #1611: Socratic Debate on Future of AI & XR from AWE Panel (2025)

- #1612: AWE Hall of Famer Gregory Panos’ Journey into VR: Identity, Body Capture, and Virtual Immortalization (2025)

- #1613: VR Content Creator Matteo311 on the State of VR Gaming (2025)

- #1614: Story Behind “Escape Artist” 2024 Polys WebXR Awards Winner (2024)

- #1615: Viverse’s WebXR Plublishing Strategy with James C. Kane & “In Tirol” Game (2025)

- #1616: Founding Story of Two Bit Circus Micro-Amusement Park with Brent Bushnell & Eric Gradman (2018)

- #1617: Dream Park: Using MR in Public Spaces to Create Downloadable Theme Parks with Brent Bushnell & Aidan Wolf (2025)

- #1618: Producing Live Sports for Cosm’s Immersive Dome with Ryan Cole (2025)

- #1619: Deploying Snap Spectacles in Verse Immersive AR LBE with Enklu’s Ray Kallmeyer (2025)

- #1620: Snap’s Head of Hardware Scott Myers on Spectacles Announcements & Ecosystem Update (2025)

- #1621: Karl Guttag’s Technical Deep-Dive and Analysis of Consumer XR Displays and LCoS (2023)

- #1622: Qualcomm’s 2023 AWE Announcements for Snapdragon Spaces Ecosystem (2023)

- #1623: Qualcomm’s 2025 AWE Announcements and Android XR Partnerships with Ziad Asghar (2025)

- #1624: Tom Emrich’s State of AR in 2018 (2018)

- #1625: Tom Emrich’s “The Next Dimension” Book on AR for Marketing & Business Growth (2018)

- #1626: New Spatial Entities OpenXR Extension to Scan, Detect, & Track Planes with Khronos Group President Neil Trevett (2025)

- #1627: Part 1: Caitlin Krause on Bringing Mindfulness Practices into VR (2019)

- #1628: Part 2: Caitlin Krause on “Digital Wellbeing” Theory and Practice with XR & AI (2025)

- #1629: Niantic Spatial is Building an AI-Powered Map with Snap for AR Glasses & AI Agents (2025)

- #1630: Keiichi Matsuda on Metaphors for AI Agents in XR User Experience: From Omniscient Gods to Animistic Familiars (2025)

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Podcast: Play in new window | Download

Rough Transcript

[00:00:05.458] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR Podcast. It's a podcast that looks at the structures and forms of immersive storytelling and the future of spatial computing. You can support the podcast at patreon.com. So this is the final episode in my 41-episode series of AWE Past and Present. which by the way, has been quite an epic series to produce. And it's been fun to go back and draw connections and dig into my archive and have new interviews to update and create this exploration of some of these different topics. I feel like there's a bit of dissolving different aspects of space and time and going away from just chronological thinking about time and thinking about time in terms of these recurrence patterns of going back in time and dipping in and Pulling in all this series, it's been interesting the way that it's connected, but hopefully it's been able to give you a sense of where things are at now in the industry and where they might be going here in the future. And I wanted to end with this conversation with Keiichi Matsuda, who I see is someone who's thinking very philosophically around the medium. He's also very pragmatic in the ways that he's thinking around the user interface designs. but also looking at the modes of speculative fiction and his piece of hyperreality that was kind of these cautionary tales that are asking us to think around the deeper ethical and moral implications of the technologies that we're building. Are we building towards this kind of hyperreality world where everything is going to be augmented and it's flashy and there's a spectacle everywhere and it's all being driven by capitalism and consumerism and disconnection and surveillance and control. So that's kind of like the dystopic vision that he was painting out in hyperreality. And again, there's some people that look at that and like they feel inspired and they actually want to go and build it out. And so it's always fascinating for me to see whatever the latest demos that Keiichi is building. And so he is kind of on this idea around AI agents and moving away from AI agents as like these omnipresent, omniscient, monotheistic gods that are all-knowing, all-powerful, and more of like be as smart as a puppy is the phrase that he uses within the context of this conversation. And so much more like these animal familiars or these animistic spirits that are infused into the world and that they're helpers and that you can have like these relationships to them and that it's more of like the way that you're engaging in this small community of these animistic spirits that you're able to engage with and have them be conduits of your own agency but that it's different than our existing mode of thinking around like click this button and take this action and more of like you're sending out prompts or orders and then having these agents, you know, act on your behalf. And so he's thinking very deeply around like, what is all these implications of this mean? And how does he start to actually implement this kind of vision that he wants to have? So I feel like KT is a really perfect person to end this series because I think he's bringing back the heart of, You know, still trying to find out, like, how can we preserve our sense of agency and autonomy with these different types of AI models? Can there be open source models? Can we maintain control over these things? Or are we going to be living into a world where all these major corporations are controlling everything that we're doing with these technologies? So I'm going to read the entirety of this essay that Kichi Matsuya wrote. It's called Gods. where he's explaining these monotheistic versus polytheistic metaphors for understanding, kind of like the monolithic AIs that's coming from big companies and big tech versus the more polytheistic or animistic or decentralized approach for AIs that we have much more control over. So it's called Gods by Keiichi Matsuda, and there's a number 190328. I'm not sure if that's March 28th, 2019. It's unclear as to when exactly this came out. But he says, the age of computing is being characterized by technology leaking out into our physical world. It is becoming infused in our cities, our institutions, our products, our culture. As our lives are increasingly mediated through technology, it is the interface that will shape the way we understand reality. Kami OS is a powerful and magical new way of interacting with the world around us. It is built to reframe our relationship with technology, focusing on transparency, privacy, and trust to give agency back to the people. It is built to work with today's emerging technologies, giving us intuitive ways of harnessing 5G, AI, IoT, cloud, and wearable tech, to name a few. The interface has roots in our oldest and most primitive ways of understanding the world around us. Gods. KamiOS channels the spirit world using AR. When you put on your headset, you will be introduced to many different gods who will guide you through your virtual and physical life. Gods of navigation, communication, commerce. Gods who teach you, gods who learn from you, gods who make their home in particular objects or places, and gods who accompany you on your journey. There are no buttons or menus in KamiOS. The gods may hear your words, but can also learn about your routines, your connection to others, the way you spend your time. You can ask them for things, but they will be active even without your input. Your interaction with them is social, based on a contextual understanding of the world around you. Today, the big tech companies are represented on this earth by supposedly all-powerful prophets, Siri, Alexa, Cortana, Google Assistant. Joining one of these almighty powerful ecosystems requires sacrifice and blind faith. You must agree to the terms and conditions, the arcane privacy policy. You submit your most intimate searches, friendships, memories. From them, you must pray that your God is a benevolent one. The big tech companies are monotheistic belief systems, each competing to be the one true God." KMEOS is different. It is based on pagan animism, where there are many thousands of gods who live in everything. You will form tight and productive relationships with some. But if a malevolent spirit living in your refrigerator proves untrustworthy or inefficient, you can banish it and replace it with another. Some gods serve corporate interests, some can be purchased from developers, others you might train yourself. Over time, you choose a trusted circle of gods, who you may also allow to communicate with and manage one another. The spirit world is accessible through a growing number of devices. This world will envelop our physical cities, and gods will come to be our connection to our appliances, our institutions, our applications, and much more. These gods will operate machinery, curate and play music, drive cars. Their influence will be increasingly felt in the physical world. You will live and work in community of both physical and virtual beings. Access to this world and to this community is a true product vision for AR. Unlike today's virtual assistants, the gods of Kami OS are not all-knowing or all-powerful. They are limited, fallible, and have different agendas and loyalties. There is no pretense that these gods can answer any question that you ask of them. Often they will only be able to answer one or two questions, but they also have extraordinary abilities that go far beyond virtual assistants and conversational interfaces. As social animals, we will quickly come to know the extents of their abilities, and this will lead to better interactions that lean on our human intelligence. This is a radical vision for a true step change in interaction. It is not intended to replace mobile or desktop functionality like for like. It is about creating a new mythology that opens up the imagination and brings new opportunity. It is built around a decentralized, open infrastructure which protects and empowers people to create. It carries with it new business models, inherently, quote, social, embedded in the physical world around us. It is natural and easy to use, leveraging our social instincts to give sophisticated control over our data. Most importantly, it uses our ancient systems of belief, ritual, and community to create a better, more human relationship with technology. And signed by KM, i.e. Kichi Matsuda. So I just wanted to read that whole essay because it's really driving where Keiichi's thinking around like continuing to build out Wisp and he's really putting this plan into action and you really hear this kind of vision that he put forth, this blueprint that he's mapped out and really trying to embody this and create a vision where you actually have the power rather than giving the power over to these big companies. So we're covering all that and more on today's episode of the Voices of VR podcast. So this interview with Keiichi Matsuda happened on Thursday, June 12th, 2025 at Augmented World Expo in Long Beach, California. So with that, let's go ahead and dive right in.

[00:08:07.199] Keiichi Matsuda: Hi, my name is Keiichi Matsuda. I'm a designer and director of a small prototyping studio in London called Liquid City. And we work at the forefront of interaction, trying to figure out how are we going to interact with computers in the future? How is that going to affect our lives and society? And what kinds of value can we have from computers? We're also trying to consider the negative impact, potentially, of technology and how to chart a positive future through the potential pitfalls. Maybe you could give a bit more context as to your background and your journey into the space. Sure. Yeah, my background's in architecture, and I was very interested in the possibilities of new types of environment. I started making short films about the possibilities of how technology could interact with us in our society. Most famous one is probably called Hyperreality, and that one depicted a kind of view of the future where a character walks through a city and experiences completely immersive technology, all-pervasive technology, and yeah, it talks about her life through that. After that, I was lucky enough to work for Leap Motion as their VP of design, and then Microsoft leading experience on their consumer headset with Samsung, which never made it out, but that wasn't my fault. And since then, I've been developing projects that try and push forward the possibilities of interaction within the space.

[00:09:25.455] Kent Bye: Great, and so we're here at Augmented World Expo, and so there's a number of new experiences that you're showing here. Also, going back to Apple Vision Pro, you also have an experience with Wisp. Maybe we can start with Wisp, because it feels like you're kind of expanding on that, but also expanding out into other VPS technologies, into time and space. Take me back to Wisp and this project that you're creating on the Apple Vision Pro in order to, you know, I think the last time we talked was at Augmented World Expo a couple of years ago where you were showing Meat Wall, which was interacting with these virtual characters with nworld.ai. And so, yeah, it seems like the spiritual successor to that project where you're interacting with a virtual character in a narrative context. So, yeah, I'd just love to hear you kind of set the context for your piece on Wisp.

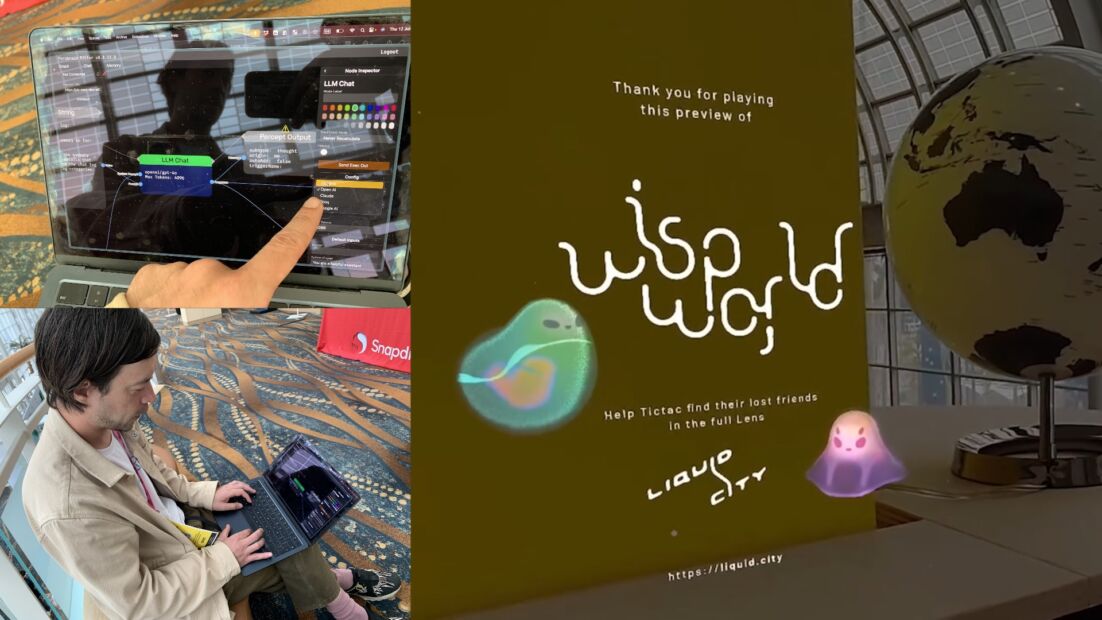

[00:10:08.160] Keiichi Matsuda: Yeah, 100%. When I was working at Leap Motion, we were trying to think about how we could interact with computers, not through pressing buttons or controllers or things like that, but more naturally through your interaction with your hands or with your voice or your eyes, or just trying to take in the broader context. wanting to get past a stage of thinking about XR that it's going to be flat panels with buttons on them that you kind of push or pinch and to something more natural like I'm talking to you right now. So obviously got very excited about the possibilities of AI and being able to drive experiences through these agents that can live in the world around you and you could be able to see. So in 2022 we made a short film with Niantic called Agents which depicted a world where There are small characters living in the world around you. Some of them are haunting locations. Some of them are following you around like familiars. And they're basically an evolution of the way that we think about apps today, not as appliances, but as characters, as kind of people in a way. And since then, we realized that actually now we can build all of this stuff. So Wall was probably our first agent that we built. It was an owl that you could talk to and would tell you all about the forest. We wanted to give it a very particular perspective. not being an AI that can do everything and tries to be everywhere all at once but one that has a very unique perspective and a unique goal as well in this case to teach you. Wisp was a successor to that in terms of being a companion that sits on your desk on Vision Pro while you're doing other things and has a long-term memory and we were very interested about the idea that you could build a relationship with an agent over time. Wisp World, our new experience on Snap Spectacles, is the same IP, but it's a completely new experience, this time thinking about the possibilities of gaming using XR and AI together. Specifically, AR allows you to be able to bring in a lot of context from the world around you. The things that are in your room, the time of day, who you are as a person, what you're talking about, what your interests are. And that provides a really rich environment to be able to create new types of experience. So Wisp World is a tiny RPG in your world where you meet these tiny fish-like characters called Wisps that all have different problems that you can help them to solve. The first Wisp you meet, Tic Tac, is looking for a new home. So you have to go around in your own home and scan different objects in your house, and the Wisp will then be able to decide if it likes that as a home or if it wants something else. So we're trying to think about the possibilities of gaming, and everyone talks about how gaming is going to be such a big use case for AR, but we really wanted to prove out, well, what is that? What does a game loop actually look like?

[00:12:48.260] Kent Bye: Yeah, and the unfortunate thing is that as we were doing the demo, I think the entire internet went down or something like that?

[00:12:53.486] Keiichi Matsuda: Yes. AWS, GCP, Cloudflare. It seems like over maybe the whole world, the internet is in trouble. So it was a very unfortunate time to be presenting our demo to the great Kent Bye.

[00:13:06.079] Kent Bye: So, yeah, I mean, I've been thinking a lot around offline, online, like how much are we relying upon online services. I deliberately don't have data on my phone just to test that a little bit, but also it's just $3 a month versus $50 a month. But we did run into that challenge of not having responses. Aside from that, there was some fallbacks where the WISP was kind of going in a circle. And it didn't completely break, but it did stop responding to the queries that I had. But in your piece, you were talking around from-leap motion. You want to try to get away from pushing buttons or using screens and having these natural interfaces. So with the WISP, you're able to grab underneath it to pinch to be able to speak. Also, you kind of have your, you know, using your hands as a framing device. So most of people think around like film directors who kind of create a square to be able to focus in and look at the framing. You can also do the same thing, but creating a triangle to focus in on specific objects. And so kind of moving into these more gestural based interactions that feel more natural and intuitive, not controller based, but also a way that you can start to interface with the world with, of course, also your voice and conversational interfaces. Just love to hear some of your reflections on these new paradigms of human-computer interaction that you feel like this XR field is starting to develop, work that you've started with Leap Motion but are continuing throughout the course of your projects.

[00:14:21.280] Keiichi Matsuda: Yeah, I mean, there's a more sort of fundamental thing around the difference in using agents as a way of interacting with the world versus operating a machine, operating a device. And I think the mental model for how we think about computers, phones, applications, it's very much around having this kind of machine that you can operate, an appliance. But now we're moving to a world where we can interact with these agents, whether they're visualized in XR or they're on a website or maybe even you can just text message them or whatever it is, where you're no longer operating a device. You're actually having a relationship with a sort of virtual character that can then help you do various different things. So yeah, I feel like there's a big fundamental shift happening where our interaction with devices is actually much less important than interaction with agents. So the interactions that you see in Wisp World, you know, pinching, holding your hands up to scan, those things I actually think are also stopgaps for now to help us to be able to overcome some of the device limitations. But in the future, I can imagine that interacting with computers is as natural as just interacting with other people.

[00:15:27.281] Kent Bye: Yeah, I remember there's a number of years ago where you had written this essay talking around animism and Japanese philosophy and how by working with these agents that there's kind of an imbuing of a spirit or almost like relating to the world as if it were enchanted in a way or entering into a magic circle of a participatory universe where you're engaging with the cosmos that is alive and breathing but manifests through these AI agents where you're able to also develop these relationships on more of a kind of a virtual context. with these beings that are agents that can be capturing our intentions and our desires and our agency to then act on our behalf or to engage with the world and come back and present that information as if it was a person or another entity that is in this kind of more animistic spirit. So I'd love to hear some of your thinking around these principles of animistic philosophy or Japanese philosophy and how you see that being more tied into the future of technology.

[00:16:20.100] Keiichi Matsuda: Yeah, it all started because I was just frustrated with voice assistants, you know, that they present themselves as being completely omniscient and omnipotent. They kind of claim that you can ask anything and it will be able to do it. And it sets us up for failure, really, that it's going to be dissatisfying no matter what you do, because it will never be able to reach that expectation. So we took on a design principle, I suppose, that we stole from a fantastic design practice, Berg, that went out of business in 2010, so I think it's okay to steal it from them. It's called BASAP, be as smart as a puppy. And rather than trying to replicate human behavior or try to present it as something that's like a godlike thing, we start to come from a much lower expectation of what it can do. And we found as soon as we do that, it's much easier for the user to be able to construct a theory of mind or mental model for what that agent is able to do. And it's much more satisfying and enjoyable as an experience through positive reinforcement rather than learning in a negative way what the limitations of the device are. So I think from that point I was thinking, you know, rather than having this idea of this kind of god-like being that's come down from its parent organization, whether it's Siri from Apple or Alexa from Amazon or whatever it is, we could imagine that these agents are far more numerous. Rather than being, you know, the single monotheistic idea of a single god, we actually have something that's about spirits in the world around us, hauntings, location-based agents, familiars that might follow you around. And for me as a designer, I'm always trying to think about mental models, right? How can people understand how they're supposed to act with something without us having to create something entirely new in their head? And to me, one of the most sort of compelling mental models for that is our connection to this idea of a ghost world, a spirit world, where we can commune with these agents around us who have different purposes, right? The god of love or the god of transport or you know the god of business networking or whatever it is that you want to do and all of these agents can coexist within a world together and create this kind of social shared reality that could replace the way that we think about mobile or desktop os's today

[00:18:27.799] Kent Bye: Yeah, I'd love to hear some of your reflections and critiques of some of the primary metaphors that we see in the world today around the technology. We have a lot of talk around artificial general intelligence or super intelligence, these purely speculative ideas that almost are treating AI as a god that is like this omniscient, super powerful entity that we maybe worshipping or seeing that it's much more powerful than humans, and so we end up surrendering our sovereignty to them. And so, yeah, I'd love to hear some of your reflections on this current moment, but also, you know, looking back at your speculative fiction piece that you did with Niantic a number of years ago, and maybe trying to go from that monotheistic approach to a polytheistic approach that is much more on the same level rather than a power hierarchy where it does invite us to surrender our sovereignty to these entities.

[00:19:12.796] Keiichi Matsuda: Yeah, I think especially with the hype around AI and also the backlash around AI, it's very tempting to put yourself in a position where you're for or against it. But I don't consider those to be very helpful positions because really, whenever we've domesticated a new technology and brought it into our world, we've done it on a conditional basis, right? We've set parameters for how that technology should work. We're sitting in a building now which is made of glass and steel, which are dangerous technologies. We use electricity, which is a dangerous technology, and used in the wrong way, it can be very, very harmful. But over time, we've managed to bring them into our world in a way that amplifies the positive and mitigates the harms. I don't think AI is different to that, and I do think that design has a really big role to play in thinking about how we conceptualize and frame those technologies. So rather than thinking about is AI good, is AI bad, try to think about ways in which we could bring it into our world in a way that reduces those harms and amplifies the positive benefits.

[00:20:12.967] Kent Bye: And I feel like that something like in-world AI is a great interface to start to wrangle the really unboundedness of how the large language models, or what Emily Embender calls synthetic text extruding machines. So not to say that it's actually modeling language, but more of like these probabilistic connections between the language that doesn't really have any meaning or understanding, but is able to present as if it is having some sense of meaning based upon all the data it's trained on from humans. But if we look at the type of work that you're doing where you do have these conversational interfaces, I do think that the nworld.ai was able to bound the more negative aspects of the hallucinations and other ways that it's really uncontrollable. I feel like nworld.ai was able to give it some sort of control of a narrative arc and to bound its context and present information that was a lot more contained than what I've seen in other chatting with Chat Shibuchi. So you were using that a lot with meetwall.com. And so now you are starting to, in your future projects, use your own sort of custom system to start to create a nodal graph to control the flows and the world building and also the different types of interactions. Yeah, I'd just love to hear you elaborate on the limitations that you were starting to see within world.ai and what caused you to develop your own approach for how to create more of a world or a narrative experience while allowing people to have a conversational interface without opening up the Pandora's box of all the native aspects of LLMs.

[00:21:39.980] Keiichi Matsuda: Yeah, we were lucky enough to have a partnership with inworld.ai and it was really fantastic. The system itself is built around creating NPCs for video games, so it's very good at being able to create characters that have particular worldview, particular knowledge base. But the way we're thinking about it is actually a lot broader than NPCs and video games. We want to create brains for characters that can do any different type of thing, from entertainment purposes to productivity to education and anything in between. And we also want it to be able to drive a lot more than the conversation itself. We want it to be able to have the conversational context and the local context drive the progression through the application, the character's position in space, scene events that are happening in the world around you. So we built our own AI engine called Parabrain, which allows us to be able to have a lot of fine control over the course of the conversation. I think a lot of people have built agents now using an initial system prompt and things like that. And we find that that works very well for the first few minutes. But once you start to get into it more, the conversation and the experience very quickly degrades. So we try to think about new design tools to be able to maintain control and state over the course of that conversation and add more features like being able to have a memory of what the user has done or said, being able to receive many inputs, not just voice, but also images from the world around you, your location, any different thing like that. And then also outputting not just to the conversation dialogue, but also the position in space, the emotion, and even being able to start to generate UI on the fly, creating buttons for different purposes. It's really incredible what you can do with these technologies when you bring them together. And I really do feel that it is a new kind of design discipline of thinking about conversational design as it applies to agents in our world.

[00:23:28.311] Kent Bye: Nice. And so we're also here at Augmented World Expo. And unfortunately, as the internet went down, I was not able to do this other demo that you're involved with in terms of Liquid City and the Peridot with visual positioning system. So maybe you should describe a little bit about what this project was and what you were tasked to do in terms of creating a demo of what the VPS can do.

[00:23:47.489] Keiichi Matsuda: Yeah, so Wisp Build is a game, it's an RPG, but we're building brains for many different purposes. So we had a chance to collaborate with Niantic to develop a demo using their amazing Peridot IP, but giving it a brain and teaching it about the location that we're in at the moment. So we're in sunny Long Beach and there's lots of interesting things in the world around us. There's an amazing history in the area. There's lots of interesting flora, plants and obviously the kind of things you would expect in terms of shops and restaurants, but even small details like the mosaic artwork on the street around. And we wanted to give the Peridot information of all of this context. So as you're walking around, it can actually teach you about the things in the world around you and try and transform a boring walk into a really exciting experience where you can understand more about the context based on what you're interested in. So we gave Peridot the brain running on our own brain system, Parabrain. And as you're walking around, depending on your conversation context and your spatial context, the Peridot highlights things in the world around you that it wants to talk about. And yeah, you can either just talk to it or you can tap on those different elements and find out more around you. We kind of think that In the future, this can be possible anywhere. But for this experience, we made something that works really well in just one location. And based on the learnings and how we see people observing it, what people's expectations are and what it should do, we're going to continue developing that to create something and also think about what kind of data do we need to store in the world around us as a whole to be able to enable these kinds of experiences.

[00:25:20.527] Kent Bye: I think one of the first times that I remember Liquid City being announced was at a Niantic Labs event where you created an app that was adding a whole layer of virtual architecture on top of the event space. And so here we're at Augmented World Expo at the Long Beach Convention Center. And so similarly, it sounds like you are being able to position some of these virtual objects on top of this architecture. So as somebody who has an architecture background, I'd love to hear any reflections on how how XR augmented reality is creating these new opportunities for architects to create these more imaginal spaces or new ways of accessing these relationships of the world and this, I guess, story of the world or this imaginal world that allows us to enter into a magic circle that we can surrender into the awe and wonder of a place that also taps us deeper into the story of that place. So I'd love to hear any reflections on that.

[00:26:15.163] Keiichi Matsuda: Sure, yeah. Niantic's VPS, visual positioning system, launched in 2022 at the developer event, the Lightship Summit, and we created a demo that tied together, I think, something like 20, 30 way spots in the area to create a fully continuous experience where you could feel like you were really immersed in a layer of reality that kind of existed on top of the conference. we wanted to test out a lot of different use cases, you know, looking at navigation, walking around, finding out about the conference schedule. We had virtual exhibitions and even we were able to put labels on top of the coffee and tea to show where it came from and the providence of that. So it was creating a little bit of a slice of the future in that event. Yeah, I guess from my background in architecture, I was always very interested in ways that we could create new types of spaces, new experiences of space that haven't been possible in the past. And it feels now in this conference with all these people walking around with AR glasses on that this future is kind of closer than we might have thought before. I think there are lots of very expressive possibilities for being able to augment existing architecture and create those new types of spatial experience. But I think one of the things that we learned from that is that the limited field of view that you get with today's AR devices mean that it's very difficult to feel fully immersed within an environment when you see the edge of the frame all of the time. So with our experiences now, like Wisp World, we pay a lot of attention to edge of frame violations, and the Wisps themselves are very small, so they never really cross the edge of the frame. And because of that, it feels much more immersive. So a lot of this is around trying to understand both what is the longer term vision, but also what makes a satisfying experience today so that people can enjoy it and appreciate it.

[00:27:56.943] Kent Bye: We're here in Los Angeles. There's a book by Lisa Masseri called The Land of the Unreal, speaking around the history of virtual reality through the context and the lens of this very specific place of Los Angeles that has a lot of facades, some movie industries here. And so it does have this kind of uncanniness that is in this place, but I think Also, right now in the world today, dealing with the realities of the world outside and how that is collapsing into our professional context. If I go back to Donald Trump being elected and seeing all the Silicon Valley tech billionaires lined up as the oligarchs next to the president, there's this fusion of technology power and political power in our world that those two worlds are seamlessly blending together. As I'm here at Augmented World Expo, I feel that uneasiness or unsettledness of this larger context and the almost like dissociation and disconnection from that larger story and this kind of business as usual. And so it gives me a weird feeling in my gut. Just curious to hear some of your thoughts as you're coming back into the United States and how you navigate this unreality, dissociation, or just the... negotiating of all these different worlds blurring together in a way that tech and politics and ways that used to be separate are now not and all the larger implications of the industry and where it's at and where it's going.

[00:29:14.062] Keiichi Matsuda: Wow, yeah, Ken, you're right. I mean, the cognitive dissonance is really real at this event. I think the day after I landed, the National Guard came in. The day after, the Marines. It's happening, I guess, just a few miles away. But down here on Long Beach, it very much is business as usual. I do find it strange that this industry is... Yeah, I do think that technology has a power to be able to influence and drive positive change in the world and I would like to see it applied to some of those problems. I think one of the things we talk about a lot with VR especially is around empathy, around being able to see what life is like for different people and I think in the early days of VR particularly it was often referred to as a kind of empathy machine. It does feel now though that Lots of the use cases is really around how can we make money right now. Obviously, the industry needs to survive, especially as more funding gets diverted towards AI. So it has become much more pragmatic. And I think there's a lot less space or will to engage with areas that are directly outside of the more kind of global issues or national issues. I don't know how much more to say about this. I always wanted to think about how technology works in a larger social context and it definitely worries me that with these devices that filter our view of the world as well as taking in information about our context. What are the implications for privacy? What are the implications for the way we consider our civic life, connecting with other people in the world around us, even if we might not know them or we might not lead the same lives as them? So my hope is that this next generation of technology that's being developed now to supposedly replace smartphones is going to be able to bring in more consideration around local context, our urban environment, and connect us more to the people around us instead of isolating us. But this is not a one battle yet and I think there's a lot more work to do so that we can really try and bring those possibilities to the forefront. I don't like that there are assumptions applied to entire industries around people's political perspective or things like that. And I think there are plenty of people who are fascinated by technology and want to create a better world which is more empathetic and more inclusive. And we just need to keep showing up, keep showing a better way. And hopefully, you know, people will start to build that because I don't think anyone wants to build a dystopia. I think if people can see the positive vision, then they'll make it. And we really do have a responsibility, especially as storytellers, to tell the stories of how it could be going right. You know, we've seen years and years of AI dystopia in cinema and literature. But what we really need now is the positive vision to sort of help people to set new goals and really kind of step their ambition up so that it's not just about solving a small problem, but it's actually about the reinvention of the way that we interact with computers and therefore the whole world.

[00:32:14.505] Kent Bye: Yeah, thanks for that. And I feel like that unease is a bit of uncertainty as where this is all going and also existing boundaries that used to be there are not there. And also, it feels a bit of a new cycle. And this year, particularly, AI being thrown into the mix as almost the silver bullet that's going to solve all the problems of XR and solve all the marketing and problems of adoption and make it go mainstream and all that kind of rhetoric and talk. And yet I come back to hyperreality, your prophetic piece around a world where we do have this far end of capitalism mixed with these surveillance technologies to control every aspect of our lives, to overlay these different stories about our lives, but in a way that is really around automation and control and taking away of our sovereignty and our right to self-determination, our cognitive liberties, all these things that I feel like AI is an automating technology is also a part of that. of consolidating that wealth and power. So I know that you're someone who's been thinking deeply around these issues and creating these speculative futures as a cautionary tale. But sometimes those cautionary tales become a roadmap for people to say, oh, yeah, well, let's build this. This looks really cool. So the intent of it was to critique, but then it becomes like a source of inspiration for people to build. So I feel like I'm in this place where I wouldn't be surprised within nine years if we start to unconsciously start to live into this kind of hyperreality world where, yes, we've had the iPhones and ways that we've used a smartphone to escape into these portals of other realities. Maybe XR will get us out of that, but yet the risk is that it just creates another immersive portal of control and reality manipulation that is at the world scale rather than in the scale of looking through a 2D screen. So something you've thought obviously a lot about and where you're currently thinking around if it's really through these AI agents and trying to create out of the monolithic, monotheistic approach and more of a polytheistic approach that's much more on the metaphor of a puppy rather than a metaphor of a god. But yeah, just curious to hear any of the other thoughts or reflections on trying to dream into a future that we actually want to live into.

[00:34:15.580] Keiichi Matsuda: Yeah, it's a great question. I think I started off being like when I start to understand about AR glasses and what they can do, realizing that they are essentially a kind of perfect surveillance and manipulation machine that they can have cameras and microphones that are always on. They know which way you're looking. They can see what objects are in your house. They can deduce a lot of things around you and your life through the conversations that you have, your daily routines. But then we also have the screens and the speakers that allow the manipulation of that reality, right? the layering in of information that is provided by this third party, which can sometimes be unseen and act in very subtle ways to modify our behavior, maybe outside of what we can understand ourselves. So thinking about a world like hyperreality, where we consider that surveillance is the norm and that all reality is mediated, kind of led down this path to think about what agency do people really have in that. And I think it's very easy to get jaded, disillusioned, frightened about what these technologies will bring and therefore shut them off. But I think it's actually also a false dichotomy that these big AI companies, these very powerful individuals, hold all the keys to this future. But it's really not true. I mean, our engine, for example, we can simply click on one of the nodes and change it to OpenAI, Google, DeepSeek, Lama. Some of those models can be very small. 1 to 3 billion parameter models can now run on smartphones. And we can securely host stuff locally as well. And therefore, even thinking about things like environmental impact of using these things is much more known. We're able to run those things on our own devices. So the only thing that's heating up is the phones themselves. So I think rather than trying to sort of fetishize these big companies and think that they're creating these all powerful gods, we should know that those possibilities are available to us in an open source or open weight format already. And actually we can really bypass all of this stuff and build it ourselves. I kind of struggled working in big technology companies because I felt that the sort of power that they had was difficult sometimes to control or predict. But working on smaller projects, which are much more ambitious, much more radical, helps us to see actually that this future is not decided. some of those big technology companies don't have a really strong idea about how these technologies are going to benefit people. So I feel that if we can create stories, if we can create prototypes of technologies that are working in a different way, then I think we have a real shot at being able to change the way these technologies are perceived and adopted.

[00:36:51.875] Kent Bye: And finally, what do you think the ultimate potential of all these spatial computing, immersive technologies, AI, and what they might be able to enable?

[00:37:01.428] Keiichi Matsuda: I mean, from an interaction point of view, it's taking the focus away from the object, the fetishized rock pebble or whatever we think of it, and pushing it out into the environment around us. And it's also doing the same with our interaction. Rather than having to have these discrete inputs and trying to describe to the computer what you want the whole time, the computer can kind of know. It understands us because it can see through our eyes. So potentially this allows us to be able to remove a lot of the effort of being able to operate a computer or a machine and allows us to be able to hone in faster and more deeply into the things that we actually want to achieve, focusing more on the value rather than the interaction itself. I think that has potentially some of the privacy pitfalls that we've discussed already, but there's nothing to stop us from developing it in a way that can be more humane, more ethical, with respect to people's dignity, people's privacy, and the environment as well. So my hope is that we can usher in this new age of contextual computing in a way that doesn't compromise us.

[00:38:04.784] Kent Bye: Awesome.

[00:38:05.104] Keiichi Matsuda: And anything else left unsaid you'd like to say to the broader immersive community? Well, obviously, just thank you for your work, Kent. Sticking in here through the good times, through the bad times, reporting on what you see. It's really important that this industry is seen, is understood, that the conversations that people are having here are recorded. I really think being a witness to this thing is an important job. So I really thank you and actually all of the listeners as well for listening.

[00:38:31.129] Kent Bye: Awesome. Well, Keiichi, you're someone who always is on the bleeding edge of some of the future trends of this technology and sometimes ahead of your time in terms of seeing where some of this might be going. Hopefully, we don't go down some of those darker paths. But yeah, I think just thinking around these agents as not these gods, but more of these more pets or spirits, familiars, whatever metaphor you want to use to have us engage with the world through these agents in a way that is extending our own agency through these entities and so yeah I think that's a nice metaphor and I think it's a guiding metaphor rather than having like a all-knowing single entity more of a pluralistic approach of having them maybe even debating against each other or competing for different perspectives and motivations but yeah really appreciate also just the aesthetics of the different pieces that you're creating that are also embodying these philosophies and design principles and really pushing forward again moving beyond just like speculative futurist films into these interactive experiences that also have a lot of deep thinking around where this is all going so i always appreciate seeing what you're making and being able to talk about it so thanks again for joining me here on the podcast thank you Thanks again for listening to this episode of the Voices of VR podcast. And if you enjoy the podcast, then please do spread the word, tell your friends, and consider becoming a member of the Patreon. This is a supported podcast, and so I do rely upon donations from people like yourself in order to continue to bring you this coverage. So you can become a member and donate today at patreon.com slash voicesofvr. Thanks for listening.