I did an interview with Norm Chan at Meta Connect about the Meta Ray-Ban Display Glasses and Meta Neural Band. Be sure to watch Chan’s full 58-minute Tested.com report including an interview with Meta CTO Andrew “Boz” Bosworth as his hands-on impressions of the biggest announcement at Meta Connect 2025. You can also see more context in the rough transcript below.

This kicks off my Meta Connect 2025 coverage, and I’ll be including about a dozen interviews that I did on site that will also be unpacking different news and reactions to Meta’s emphasis of AI-driven wearables, and what’s happening within the broader XR industry and VR gaming ecosystem.

Here’s links to all of the interviews that are a part of my Meta Connect 2025 coverage:

- #1652: Kick-off of Meta Connect Coverage with Meta Ray-Ban Display Glasses Insights from Norm Chan

- #1653: XR Analyst Anshel Sag on Meta’s AI Glasses Strategy

- #1654: CNET’s Scott Stein’s Reflections on Meta Ray-Ban Display Glasses Implications

- #1655: Meta Horizon Studio News and Virtual Fashion with Paige Dansinger

- #1656: Kiira Benz Part 1: “Runnin'” Large-Scale Volumetric Music Video (2019)

- #1657: Kiira Benz Part 2: “Finding Pandora X” Bringing Immersive Theatre to VRChat (2020)

- #1658: Kiira Benz Part 3: Immersive Storytelling Career Retrospective (2025)

- #1659: VR Gaming Career Retrospective of Chicken Waffle’s Finn Staber

- #1660: Enabling JavaScript-Based Native App XR Pipelines with NativeScript, React Native, and Node API with Matt Hargett

- #1661: State of VR Gaming with Jasmine Uniza’s Impact Realities and Flat2VR Studios

- #1662: Meta Connect Highlights & Meta Horizon News with JDun and JoyReign

- #1663: ShapesXR Updates & Neural Band Design Implications of Transforming Your Hand into a Mouse

- #1664: Resolution Games CEO on Apple Vision Pro Launch + Gaze & Pinch HCI Mechanic in Game Room (2024)

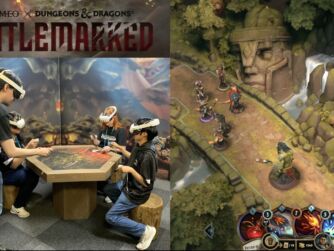

- #1665: Resolution Games’ “Battlemarked” Blends Mixed Reality Social Features with Demeo and D&D Gameplay

- #1666: VRChat CEO Graham Gaylor on Exploring Various UGC Monetization Strategies

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Podcast: Play in new window | Download

Rough Transcript

[00:00:05.458] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR Podcast. It's a podcast that looks at the structures and forms of immersive storytelling and the future of special computing. You can support the podcast at patreon.com slash voicesofvr. So I'm going to be diving into my MetaConnect 2025 coverage, starting off with an interview I did with Norm Chan, who's the executive editor and co-founder of testa.com. He had an early look at the biggest announcement hardware-wise at MetaConnect this year, which was the brand new Meta Ray-Ban display glasses and the Neural-Ban, which is the EMG wristband that was made by Control Labs, which Meta acquired. And so these two things represent this pivot towards what they showed last year, which is the Orion AR glasses that is their eventual form factor they're headed towards. But starting with this TD plane and this HUD, with this 600 by 600 display in the lower right hand side. And also this neural band, which I think is probably the most interesting new announcement out of MetaConnect this year in terms of a vision of human computer interaction, where you're using small, subtle gestures with your fingers. They're starting off with transforming your hand into a TV remote, essentially, where you have a two-axis D-pad where you can use your thumb on your index finger to kind of swipe left or right, up or down. And then you pinch with your thumb to index finger to accept or the middle finger to thumb to go backwards in the UI. So you kind of have your mouse of sorts, but I think of it more as transforming your hand into a TV controller. And so I had a chance to talk to Norm about it because at this point at MetaConnect, it was before the keynote, it was before it was formally announced, I did have a chance to attend some of the demos in the press day, but was not invited to do an early access to the demo. I only got a chance to see it after the keynote where I had a chance to see what ended up being a little bit more of an abbreviated demo, but also like they didn't ask my prescription. And so I have negative 5.5 prescription and the meta AR display classes only go up to negative four or positive four and they didn't add the inserts. So if you do end up going to see a demo, you can only buy this if you go like the best buyer to one of their meta lab stores that they're doing. They're going to be launching September 30th. It's going to be like $800 and you get it bundled both with the meta AR display glasses and the neural band. So just a couple more thoughts on this, just because I do think that this is like moving towards this vision of AR glasses, but they're also calling these AI glasses because they're seeing the primary use case as artificial intelligence. And so they're likely subsidizing these at $800 that probably cost a lot more. They're doing this loss leader to try to get movement into the market to have more and more people using the form factor of these glasses that have cameras and microphones and speakers so that you can start to interface with artificial intelligence as you're going in and about your day. the biggest question that wasn't answered until the second day of the meta connect was whether or not developers whatever actually get any access to this at all and they did announce a wearables device access toolkit at the end of the developer keynote that is going to be in preview access and only available for like the existing ai glasses with the ray-ban meta glasses and we'll be giving access to like the camera the speakers the microphone nothing around them made a remain display like getting access to the hud or anything like that so basically if you're a mobile phone app developer you can start to give access to the wearable ai glasses to start to do more conversational interfaces for them to take a photo and to identify it to take over access to the speakers and to play back different audio pieces. A lot of people that I've talked to ended up using their existing Ray-Ban Meta glasses as like more of an audio device, which is what Norm does. And I'll be sort of diving into some of their other announcements that they made with Vanguard Oakley glasses and, you know, more around this kind of movement of VEA glasses in the next interview with Anshul Song. basically unpack different aspects of what I saw to be some of the most notable news throughout the course of my dozen or so interviews that I did at MetaConnect. So I think it does represent like a larger movement and pivot towards what seems to be taking off. They're called the reband meta smart glasses, like one of the most successful consumer launches of new technology in like a long time. So they see a lot of momentum and crossing over into the mainstream where people from completely outside of the XR industry are finding utility and having these glasses that have access to the camera and to use them as a Bluetooth speaker and microphone, but also just do more hands-free types of computing. When I listened to what Boz and Zuckerberg are saying in interviews, they're saying like they looked at the top 10 things that people do on their phone And how can they start to create devices like the Meta Ray-Ban display glasses to add those first-party apps that allow you to not take your phone out to look at it? So everything from taking photos and videos. And it's nice to have a little preview of what you're seeing because in the previous versions, you have no idea what the framing is or anything, but also text messaging and communicating. and they did show in the keynote and also i think victoria song is the only reporter that actually got to try this out with the neural band which was turning your finger into like typing out letters writing with your index finger and then that motion is able to be translated into communicating and mark zuckerberg said he can type around like 30 words per minute or so so it's something that over time they're going to be adapting to these more and more kind of user interface innovations with the neural band so that's probably like the most significant announcement in terms of like moving forward this is something that's going to persist so they're seeing this as like the first step towards a larger vision and a bit of a pivot away from vr there was vr demos that are there they didn't invite any of the vr content creators in terms of youtubers that they usually have and i had to honestly fight for access this year to even get invited to go and to then get a press badge so i managed to be there and to cover it like i normally do but that wasn't a sure thing and so i'll do my best to try to cover all the other aspects that were at metaconnect this year through the series of these interviews so that's what we're coming on today's episode of the voices of vr podcast so this interview with norm happened on wednesday september 17th 2025 at the metaconnect conference at meta's headquarters in menlo park california so with that let's go ahead and dive right in

[00:06:25.936] Norm Chan: Hi there. I'm Norm Chan. I'm the executive editor of Tested.com, the YouTube channel Tested, and have been covering XR, VR, AR headsets for, gosh, almost 14 years now. - Great.

[00:06:39.695] Kent Bye: Maybe you could give a bit more context as to your background and your journey into the space.

[00:06:43.870] Norm Chan: Yeah, so I tried VR with the Oculus DK1, definitely wasn't in the PC gaming space beforehand, so really interested in how those games adapted to HMD. And then since then I've been very fortunate to chat with a lot of companies, making the sense to try as many headsets as possible. Even go, I was invited to Reality Labs Research to try some of their prototypes a couple years ago as well. But what's funny is, of course, this year's MetaConnect is not about headsets. And it's not really about VR. It's about glasses. They're smart glasses.

[00:07:14.914] Kent Bye: Yeah, we've definitely been seeing a shift over from XR, VR, mixed reality over into these AI-driven smart glasses. And so, yeah, you said that your glasses that you're wearing now are basically the Ray-Ban Meta smart glasses you've been wearing for the last two years. So maybe just talk about your own experiences of using these glasses.

[00:07:33.777] Norm Chan: Sure. I think everyone remembers the Ray-Ban stories were not very successful, right? So it was a great partnership, and it looked like Ray-Bans, and they got the form factor right. There were camera glasses, and they were fine, but there were more sunglasses. And two years ago, along with the launch of the Quest 3, they launched the Ray-Ban metas. And I was there for a press briefing, and it became the first time seeing the Wayfarer frame for that, and the features that they decided to put in, a little bit of live streaming to their social platforms. the higher quality video recording and the speakers, it made me feel like maybe I want to try these for a little bit. And it just turns out the glasses I was wearing at the time, and I've been wearing for a long time, you know, the ID, the visual ID looks a lot like the Wayfarer glasses. So I'm like, okay, I can change one style of black room glasses to another style. And I started wearing the Ray-Ban Metas, ended up not caring about the live streaming at all. And even like the video recording, photo taking, the photos are fine. You know, you go through this phase in reviewing a product where you overuse it for the first couple weeks, took a ton of photos, and over the past two years, I take a photo every now and then, but it really has been, for me, essential as an audio device to listen to music, to listen to podcasts, to make phone calls. I love, maybe it's cliche now, but I love walking my dog and listening to podcasts and having it not be something plugged in my ear like a pair of AirPods. They're not bone conduction either, but they're just kind of on-ear directional speakers that point right at me that don't take me away from the outside world. Apple has something similar, right, in the AirPods. They have the, I forgot what they call it, but it's like the pass-through feature where it lets some of the outside world sound in so it doesn't do noise cancellation the whole time, so you can feel a little more present. And my analogy that I like going to is that, you know, what AirPods, what the AirPods Pros do with noise cancellation and their pass-through audio is kind of like the equivalent of pass-through video for a VR headset. And what the Ray By Meta's and on-ear speakers, that's like the equivalent of pass-through optical video for an AR headset. Like I feel more connected to the real world. I feel less isolated, I should say, wearing these glasses while still being able to have the content that I want.

[00:09:43.311] Kent Bye: Yeah, and so this year at MediConnect, we're about to have the keynote that's starting here in about an hour or so, where Mark Zuckerberg's going to be announcing a lot of things. And we've had press demos all day for a bunch of stuff that's going to be announced. And you've also had a chance to see some closed door demos of some of the products that only a small handful of folks got a chance to see, which is more of a monocular display with also the Control Labs EMG wristband. So love to hear a little bit of your impressions and first thoughts of a little bit more than smart glasses, not quite as mixed reality, not quite as much as like the VR, but kind of on the road towards like more of an Orion form factor. So love to hear some of your impressions.

[00:10:21.448] Norm Chan: Yeah, it's so funny because last year there was Orion, which is clearly their high end, their North Star, all the technology they can put in a pair of real augmented reality glasses because those do SLAM world tracking, you know, inside out tracking. They recognize the world. They're going to align holographic images to the space. These are not that. These are heads up display glasses. And I don't know if we have a consensus on the name for them. smart glasses ai glasses certainly ai glasses is popular because all of these whether it's google you know samsung meta here and maybe eventually apple they want these to be a way for you to connect with their services their ai services but right now what they're putting out is something that's basically the ray-ban metas but with a heads-up display on the right lens they're calling them the meta ray-ban display so ray-ban meta this is the last gen and this is meta ray-ban they've swapped the name around but sells the ray-ban branding They're a little bit bulkier, a little bit thicker rim. The side arms are taller to accommodate the processing and the battery. And now most notably is that they have this wave guide on the right side. 20 degree field of view, 600 by 600 pixel. They're calling it a 42 pixels per inch. So it's not necessarily the pixels per degree, but it's high. It's readable. And they've created a whole new OS to show the information that you previously would have gotten only audio now in a pop-up HUD.

[00:11:43.070] Kent Bye: Yeah. And so if you were to try to describe where they're at in the frames, are they lower? Are they in the middle? How would you describe where it's actually located on the right lens?

[00:11:50.996] Norm Chan: Right, right. So it's 20-degree field of view. And while the display is like 600 pixels by 600 pixels, the content really fits more of a narrow, I would say, a 3 by 4 aspect ratio. And you're definitely looking a little bit down. If you're looking forward, it's not right in your center field of view. You are eyeing it a little bit lower. 20 degrees does feel like it's not like a sliver of content it actually is meaningful in terms of how much text or an image like if you put up a full like a live camera preview like live camera view it's it's vertical photo it's 9 by 16 aspect ratio you can make out details in the presentation of that right so it's more readable than like the google glass that you got years ago and there's definitely more opportunity to present not just graphical data information but you know Flowing conversations, tech data, and I think a lot of applications that they've developed first party service those. So messaging, live camera preview, navigation even, music playback, your album that you see. There's no web browser or RSS feeds or anything like that. I think they're starting primarily with first party stuff.

[00:12:50.652] Kent Bye: And can you walk through what kind of demos you saw in order to experience this product?

[00:12:55.322] Norm Chan: Yeah, yeah. And I guess that's a good point to bring in that along with these glasses are the EMG bands from Control Labs. So this is definitely part of the almost exact same idea as what I saw in Orion last year. And they really hinted last year that this was a thing that was going to, you know, they're really confident with and proud of that could make their way into a product. And it's funny because it's two wearables that they're bundling together and the wearables almost can. function independently like the glasses technically don't need the wristband to operate there's a touchpad on the side a four-way touchpad two-axis touchpad that you can use to navigate and the wristband technically doesn't need to be a control interface for glasses it could be a control interface or all sorts of computing right but the fact that they want to marry these things together says a lot about how they think about one where their strengths are and two about what type of experience you know the total being greater than the sum of its parts and so the demos are tied to popping up the hudding getting glanceable information by using these micro gestures by tapping your thumb and index finger tapping your thumb and middle finger doing like the equivalent of a touch pad using your thumb on top of your your fist and then uh so you say your fist you mean like your opposite hand you use your No, no, sorry, sorry. You make like a small fist as if you're making a thumbs up with your dominant hand that you're wearing the wristband in, and then you rub your thumb across the top of your index finger as if it was like a touchpad surface, you know, go up and down, left and right. And then one new gesture, which was a tap and pinch, and then rotate your wrist as if you were turning like a volume knob, which acts like a granular slider. And the best thing I could say is all of these worked. They just worked. No training, no calibration. You put the wristband on. You have to put it on pretty tight, because that's the contact points across your wrist. Everyone's wrist is shaped differently, where the muscles and the nerves are and the bones are, right? But the accuracy rate, I felt, was really impressive on this. And so you use that to navigate, and then you, again, like you said, you go through a bunch of the standard things you'd be pulling out. I would say not just your phone, but you're like a smartwatch for, right? Messaging, right? Video calls, you can video call work with WhatsApp. They don't have an Instagram Reels app yet, but they had a shortcut that let you basically preview a Reel. Navigation was a thing I didn't expect for them to have, but navigation, you use the AI assistant, the AI to search for the nearest coffee bar or something, and then it'll provide you walking directions in the HUD.

[00:15:11.944] Kent Bye: Just a quick question on the location. Does it have a GPS receiver in it, or is that all coming from a phone?

[00:15:17.669] Norm Chan: I think that's coming from the phone. I think it has a compass in it, but I think that's all streaming from your phone. This is that Meta AI app. So while it's not technically a, the compute puck is your phone, essentially, right? There's processing that's done on the headset for, on the glasses for display, but it's sending the queries to your phone, to Meta AI, then getting that stuff done. There's also captioning, so I think live translation is a part of it, because they are part of Rayman Metas, but what they demoed for me is live captioning. So with their directional mics, if you're sitting across the table from someone or at a restaurant, they simulated for me a noisy environment, so a lot of other people chattering in the vicinity, and the person I was facing and talking to talked to me, and what they said popped up, kind of like in a live transcript, as if you were dictating to your phone. So that was neat. And then I guess that was, that might have been it. And you're saying like AI features, right? You know, take a picture of this, tell me what you're looking at, right? As opposed to hearing the response, you're seeing the text prompt as a response, as well as some follow-up queries, you know, the way Facebook does with like, with posts, like, would you like to know more? Here are five things we think the AI thinks you might be interested in. So you can do that deep dive. There's a home screen of sorts, eight icons to launch applications from, a control center, brightness, volume control as well. It got pretty bright, worked outdoors. These are transition lenses, so they will go darker, but transmissibility was really good.

[00:16:42.866] Kent Bye: Now pixels per inch is usually what they use for phones, and so how do you translate that into pixels per degree, just so you get a better sense of how it's relative to other headsets?

[00:16:51.451] Norm Chan: Yeah, I got to double check on the fact sheet for this. I want to say, I got to check whether they meant pixels per inch or pixels per degree. They were really proud of the pixel density, is what I can say. If we do the math of 600 pixels wide by 20 degree field of view, that doesn't really get us to 42 pixels per degree. But I can say in terms of readability, text was very legible. It was bold text, white text on dark background. You know, there was some contouring on the text to give it more definition. It wasn't as sharp or crisp as looking at a phone, you know, or high resolution tablet. It did feel like maybe because of the way the waveguide is designed that, you know, there's a little bit of that glow behind it. And certainly things were bright, but not opaque. I don't think you're going to get fully opaque with any type of waveguide unless you're introducing some blacking out, you know, like the extra glasses do of the lenses.

[00:17:41.118] Kent Bye: Well, I know that Meta for a while was working on their own operating system, and did they basically keep working on that for this project? Or do you have a sense if it's like a fork of Android, or what do you know around what the operating system is that's running this?

[00:17:53.941] Norm Chan: They didn't confirm any of that. My suspicion is that this is probably not the OS that they were rumored to be developing on their own before they were also reported to have axed it. If I was to guess, I would say a fork of Android. I think Orion as well was probably a fork of Android.

[00:18:07.850] Kent Bye: And curious to hear your thoughts on the overall experience of these two paired together with this EMG wearable wristband with AR glasses, and if this is something that you could see yourself wearing all day and starting to use.

[00:18:21.980] Norm Chan: Yeah, I mean, I definitely want to. I mean, the form factor was much more palatable than Orion. As proud as they were of Orion getting closer to a socially acceptable form factor, I think that was certainly ways away from that. And this, while being thicker and bigger than the Ray-Ban Metas, felt like something I could definitely see wearing as an everyday wear of glasses. Slightly heavier, 69 grams versus, I think, 50 grams for the Ray-Ban Metas. But... It feels like a watch, right? It feels like the first gen Apple watch of the type of things you'd be doing. I think they're going to figure out what people want to do with a HUD. And the big question, which I don't know if they're answering today, is if any developer ecosystem, they'll open for this because everyone wants to use glanceable information for different purposes. Personally, I would love sports, right? All the reasons I pull out my phone in the middle of the day or on a weekend, if I can not have to do that and get that in a pop-up HUD and not break a conversation flow or interactive moment with friends and family, I'd welcome that. I also felt that the MG band was really intuitive, and I want to see it used elsewhere. I think, again, they have to find the right fit for why does Meta want to make a control band for other products when what makes money for them is Facebook and Reels and Instagram and all these services? Why would they make essentially a controller for computers? They have to find the business sense for that, but I think they probably have a sense that this is a really cool interaction paradigm that they want to put somewhere. If the only one's doing it or the first one's doing it, they don't want to lose that lead.

[00:20:03.793] Kent Bye: Yeah, and I guess what were some of the other impressions you got from being able to talk to other people around this? Because you're going to be releasing nearly a 60-minute video as soon as the embargo lifts. So I'm just curious to hear some of your other insights that you got from being able to get early access and to ask the team all about it.

[00:20:21.468] Norm Chan: You know, I think I'll say two things from the people, other press who've used it, like everyone agrees about the potential of the EMG band, right? That seems like they got something there. They have something, you know, it's not a dedicated accessory on its own. It's not a fitness tracker. It's not a watch, but it could be. We've seen the research videos from Control Labs about typing on a Phantom keyboard with two of these bands, just doubling the input. What does that mean? That seems to be, again, a lot of potential. I don't know if, again, it makes business sense for Meta. But on the other side, the pricing is going to be really interesting. So $800. The kind of subtext I got is that they're really happy about this pricing. And happiness that like they're really proud of the fact that they got it to this price, which tells me that probably either they're doing this at cost or taking a loss or the tech is probably either a low yield or high cost product for them. But I don't think the market can bear $1,000, $1,500 first gen product. And so it's that tough point of like, is $800 too much? It still is a luxury product. So I think they have a lot of momentum with $200, $300 sunglasses that they're selling right now. And Ray-Ban and Exalura Luxottica makes luxury products. So they have some buy-in there. But $800 is a lot of money.

[00:21:36.305] Kent Bye: Is that for the glasses and for the EMG band?

[00:21:39.607] Norm Chan: Yeah, it's a combo. I think they're only selling it that way. And again, it tells me that the cost is probably in the glasses for them to manufacture that. So it's not a dev kit. It's an early adopter piece of niche tech. And it could be the beginning of a mainstream thing. Again, so much of this reminds me of the first gen Apple Watch. You had people who didn't buy watches, never spent $500 on a watch before. And now they're ubiquitous. And so I think Meta looks at that and probably sees like, OK, this is our potential to get people buying hardware and spending premium money on hardware. But for them, of course, they don't make money on hardware. They make money on services. So there's the whole Meta aspect to that, right?

[00:22:19.982] Kent Bye: Well, I know that in the video they sort of showed someone that was like sort of writing on the table, like almost like if it was able to detect handwriting. And there was rumors that they had this as sort of a demo for handwriting. I don't know if you saw that demo or heard about that.

[00:22:33.140] Norm Chan: I saw the demo and I wasn't able to experience it, but the person giving the demo did that handwriting demo. Assuming it wasn't pre-scripted and it was a real-time demo, they put their hand to their lap and pretended to write. Didn't look like it was in cursive, but they wrote a sentence in a messaging app and I was able to get it without any typos. They also said no additional training required. It wasn't specifically trained to their handwriting. I think what they're going to do is probably open that up in early access beta. It's not going to be a default thing, it's an opt-in. But again, this goes to the ceiling of what EMG can do. Because if it was just tap gestures, you can get good tap gestures on an accelerometer on a watch today. There have been some cool stuff we've seen at AWE with reinforcement training and trained models on just gyro data to capture taps. So this is more than just taps.

[00:23:20.839] Kent Bye: So they did the demo, but they didn't let you do it?

[00:23:23.119] Norm Chan: No. No, I didn't get to do any of the handwriting. But looking forward to it.

[00:23:26.200] Kent Bye: So do you expect that you would ever see people using two EMG handbands? Because typing is a lot faster. That seems to be the way that people are the highest level of communication. And so just curious if you expect that at some point they may add a second EMG band so that you could do more two-handed interactions or something that you're actually typing and doing input that would be a lot faster.

[00:23:48.004] Norm Chan: I think just based on the research we've seen come out of Control Labs, that's a no-brainer. Whether they'll productize it, again, goes to them having to make a decision about are they in the business of making input accessories? But assuming that their cost on the wristbands is comparable to peripheral costs, $100 keyboards, gaming mice, there's no reason I couldn't see $100 wristbands, a pair of wristbands, or $150, or buy them a la carte. Utility with one, but... extra utility with two but you're also talking about software investment in interface right and they might realize that non-dominant hands you get much less utility with the input right so it's what the incremental utility is versus the costs yeah

[00:24:31.482] Kent Bye: Now, battery life seems to be, in terms of the other lines that they're upgrading, seems to be updating that. Do you get a sense of what they're expected battery life for once there's a display there? How's it going to impact the battery?

[00:24:43.286] Norm Chan: They said six hours. And they said with the charging case, 30 hours. They didn't say how frequent you pop this up. I guess one question people didn't know is whether the display is always on, and it's not always on. You wake it, notifications wake it, but presumably with regular use, I'm sure things like live view camera probably consumes more power. That's all happening internally in the glasses. But even at six hours, that's like right now with the Ray-Ban Metas, my big thing is battery life. I know the newest gens have longer eight-hour battery life, and the Houston's have longer battery life than the first-gen Ray-Ban Metas, but I actively turn mine off on the physical switch, and I turn off the voice activation just to not consume battery life, to stretch it out. And that's only for my primary use case of listening to audio and using it as a microphone. If I'm starting recording video, record three-minute video, battery just zips it out of there. And it's not like you can swap out the battery, replace the battery, right? I'll be using these for two years. You know, you think of how long people keep consumer products, like phones, you keep them over multiple years. And when the battery is that small, you don't want battery to be 50% what it was three years down the line on an $800 product. So that will always be a thing. Yeah.

[00:25:55.584] Kent Bye: Great. I know you had a chance to see the Hyperscape demos. Any other demos you want to mention?

[00:25:59.995] Norm Chan: Hyperscape I thought was really cool. People who haven't tried it, they should try it just to see what it's like to explore a Gaussian splat in first person. The capture of that opens up a large opportunity, right? Like for me, I'm like, I want to capture my kids' bedrooms as they are now, as those change, those spaces, those physical spaces that I love and associate with change over time. This is another way quickly in five or six minutes for me to capture those spaces. But obviously they want to funnel that into Horizon Worlds. That's a big bet, right? And in chatting with the product designers there, they foresee a future where it's Gaussian splats as environmental capture to live alongside mesh objects and mesh capture and also being a social space. So those aren't available now, but it feels like more of this type of content is essential to that vision of a virtual metaverse.

[00:26:45.163] Kent Bye: Any other reflections on this kind of pivot that seems to be going down these AR glasses and smart glasses and a little bit less emphasis this year on VR, even though there are a lot of VR demos here, but kind of the big marquee news that's coming out this year is more on the frontiers of AR and AI. So just love to hear any comments on the larger ecosystem of XR as you've been covering the space very, very closely over the last 14 years.

[00:27:06.860] Norm Chan: Yeah, I think everyone following Meta and the Quest ecosystem is kind of on edge, because you see this feels like a pivot, and the hope is that they can tap their head and rub their belly at the same time and do multiple things. They have all this money. invested in reality labs research presumably in solving some of the hard physics problems of a headset some of that stuff will converge but gaming alone is not a meta-sized business for them so as long as there's still gonna be some investment there which looks like there will be with contents like with james cameron and lightstorm entertainment like i feel like they're still gonna start releasing quest headsets and that's my hope at least and finally what do you think the ultimate potential of xr ai might be and what it might be able to enable Oh, God, I know you ask this question every single time. It doesn't fluctuate. Every single answer has evolved so much over the years. I mean, I think we're seeing mixed realities becoming more and more likelihood. The SLAM tracking is so miniaturized and it's almost a solved problem. I remember when SLAM world tracking on VR headsets required multiple cameras and the fact that AI... and machine learning for input, for tracking, and the ability to get those in mixed reality devices, and also the benefits those things bring to VR, that's what gets me excited. The merging of the physical and the digital world, it feels inevitable.

[00:28:29.949] Kent Bye: Anything else left unsaid you'd like to say to the broader XR community?

[00:28:32.987] Norm Chan: No, I mean, we're all on this journey together, ups and downs, and things have pivoted. I love that it's still new and emerging technologies that hopefully people are interested in. I know there are diehard VR enthusiasts who maybe are a little disheartened. But I would say there's a lot of great stuff. The rumor was that Valve was going to put out their long-rumored Deckard headset today. And while that isn't the case, as of right now, I feel like they're not out of that game yet. And the reality of what consumers want to use these headsets for is going to raise the floor and raise the tide for everyone. And there will still be space for the hardcore VR simmers and the room scale enthusiasts. And it's not exactly what we thought it would be 10 years ago, 12 years ago, but there's still cool stuff happening out there.

[00:29:17.270] Kent Bye: Awesome. Well, Norm, thanks so much for joining me. Really look forward to your hour-long video deep dive into all the latest announcements that are coming here from MetaConnect. And yeah, I just always appreciate your technical deep dives and you really understand the technology and you've got a very trained eye and a sense of where the technology is at now and where it might be going here in the future. So I always appreciate hearing your reflections on the latest announcements. So thanks again for joining me here on the podcast.

[00:29:39.261] Norm Chan: Great to see you, Ken. It's been 10 years at least. Yeah. Hopefully another 10 years more.

[00:29:45.302] Kent Bye: Thanks again for listening to this episode of the Voices of VR podcast. And if you enjoy the podcast, then please do spread the word, tell your friends, and consider becoming a member of the Patreon. This is a supported podcast, and so I do rely upon donations from people like yourself in order to continue to bring this coverage. So you can become a member and donate today at patreon.com slash voicesofvr. Thanks for listening.