I spoke with VoxelKei and R_Tone with RIKU_VR as the Japanese translator about Tonevok: Sonic Sky Journey as a part of my Raindance Immersive 2025 coverage. See more context in the rough transcript below.

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Podcast: Play in new window | Download

Rough Transcript

Note that this is an automated rough transcript using WhisperX that is translating all Japanese into English

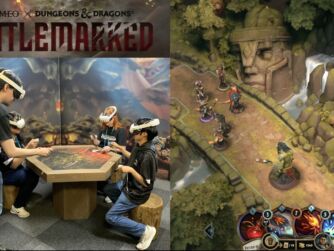

[00:00:05.458] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR Podcast. It's a podcast that looks at the structures and forms of immersive storytelling and the future of spatial computing. You can support the podcast at patreon.com slash voicesofvr. So continuing my coverage of Raindance Immersive 2025, today's episode is with a real-time audio-reactive music piece called Tonevok, A Sonic Sky Journey. So this is a live music piece that was out of Japan. In fact, all of the different live music pieces this year were out of Japan, per what Training Things was saying. But this piece is by a group called Tonvoke, which is Voxokai and R-Tone. And so I was working with the translator Riku VR, who was able to translate what Voxokai was saying. And then R-Tone was actually typing in chat. And then so she's reading the Japanese and then translating it into English as well. And so I just wanted to air it as I experienced it. And the other thing I just wanted to mention around this piece is that, you know, with Apple Blossom, she does have this shader that is able to basically use video to encode pixel information. And so like to take each individual pixel and to encode information and then using like the regular video compression, which is really sometimes the most efficient way of sending vast amounts of data quickly. they're able to do a real-time translation of this data and then have access to it within different shaders and that's kind of what voxel k is also doing here but rather than spatial data and the screen effect that apple blossom shader is doing voxel k is doing something similar with midi data so there's a challenge between like the latency between like getting access to the MIDI data and then actually listening to it. And so by combining the audio into like a video and then also having the MIDI output encoded in real time into that same video and then taking that video and streaming it into VRChat, then he's able to basically do a real time decoding of this live performance by R-Tone that then is providing MIDI information to like these different dials that he's able to do like real time visual representation of the music. So it's like real time audio reactive. visualization of the midi data that he's able to do within the context of vr chat so by covering all that and more on today's episode of the voices of vr podcast so this interview with voxel k and our tone with japanese translation from riku vr happened on wednesday july 2nd 2025 as part of my broader rain dance immersive 2025 coverage so with that let's go ahead and dive right in

[00:02:43.282] RIKU_VR (translator): Could you briefly explain to the two of you what you are doing in the VRChat world? We are a music unit called Tonebok. We are called a real-time audio-visual performance unit. We are a music unit called Tanevac, and we call ourselves a real-time audio-visual performance unit.

[00:03:33.676] VoxelKei: Yes. So what we're doing is, Tone, R-Tone, I think it's R-Tone, is playing in real time with a synthesizer, a hardware synthesizer. That sound comes into this world, With the help of this information, I was able to create visual effects in this world and control them. After watching the video, Mr. Tony is playing in real time, so he adds changes to the performance. It is a performance that progresses with the combination of sound and visuals.

[00:04:29.687] RIKU_VR (translator): Okay, so our Tom plays the synthesizer live and improvised music in real time. And the MIDI data is sent with the audio. And in response to that, I generate visual effects. And while Artan watches the visuals, he changes the way he performs. And when that happens, I also change the visual effects accordingly.

[00:05:02.895] Kent Bye: And maybe you could each give a bit more context as to your background and your journey into virtual reality.

[00:05:11.912] RIKU_VR (translator): Can you tell us a little bit more about how you came into the virtual reality world? Yes, I'll start with Voxel. I used to develop applications for Oculus Rift before coming to VLCHAT.

[00:05:41.149] VoxelKei: I used to make and release content like flying in the sky like Oculus, but I wanted to implement multiplayer. It was a content where I flew alone, so I wanted to fly with a few people, with my friends. When I was thinking about what to do, I learned about VLChat. I learned that you can create your own world and bring it to VLChat. I came to VLChat because I wanted to do that. I came to VLChat first because I wanted to create a world where I could make Japan Red three-dimensional and fly over it.

[00:06:36.820] RIKU_VR (translator): Okay, so I'm VoxLK. I will start. Before coming to VRChat, I was developing apps for Oculus Quest, and I had released a flight simulator where you could fly through the sky, but that was only a solo play, and I wanted to make a multiplayer game app so that friends could fly together. but the technology was too high that it was too difficult for me to realize. But then I found out that it was possible in VRChat. So that's what brought me here to make that dream come true. And then first thing I created in VRChat was Japaneland.

[00:07:24.475] VoxelKei: Yes, Tone-san.

[00:07:33.281] RIKU_VR (translator): He will write in the chat. 私は音楽とゲームが趣味の友達からVRチャットを勧められて遊びに来ました。 A friend of mine who's into music and games recommended VRChat to me, and so I came to check it out. Yes. Okay, is that it? Or is there more? それでまだもう少しありますか? トネさんはありですか? Yes, that's it.

[00:08:05.294] Kent Bye: Okay. Okay. And then maybe you could talk a bit about the original idea for this performance that was at Rain Dance Immersive. Where did it begin? Yes.

[00:08:31.958] VoxelKei: I started this style of performance around 2019. I came to VLChat in 2018, and at that time I was making Japaniland and other experimental worlds. One of them was called MIDI Particle World. It was a music-related world.

[00:09:05.952] RIKU_VR (translator): Yes. Okay. So first, we started Tonevog in 2019. At the time, I was working on Japaneland, and one of the worlds I created was called MIDI Particle World, and that was the only world that was music-related. Yes.

[00:09:26.059] VoxelKei: Yes. In that world, you connect to the media, come to this world, the VH chat, play the keyboard, or tap the electronic drums, and you get an effect. That's the kind of world it was. I made that world and had my friends come and play with me. That's when Tone-san happened to come and play with me. That was the starting point for me to start this style.

[00:09:59.562] RIKU_VR (translator): And in that MIDI particle world, when you connect a MIDI device and play the keyboard or electronic drums, particles would appear in response. And I was inviting my friends to come over and play. And that's when Artan visited me. And that encounter is what started TANVOC. Yes. And then to the rain dance performance, right?

[00:10:33.450] Kent Bye: Yeah.

[00:10:45.626] RIKU_VR (translator): Can you tell us more about that?

[00:10:48.767] VoxelKei: Yes, well, there are a few things that we made special for the Rain Dance this time. One of the most special features is the project of reproducing the terrain of the Japanese archipelago that I had originally created in the VR world and Tony Bok's music performance. at the same time in this world was a new challenge for Tony Vogue.

[00:11:24.019] RIKU_VR (translator): Yes. Okay. So for Rain Dance Immersive, the unique part was that we combined the Japan terrain recreation, the Japanese land world, with live Tony Vogue performance. It was a new challenge for us to present both at the same time. Yes.

[00:11:45.114] Kent Bye: Hmm. And so wondering if Rtone could talk a little bit about the process of making music live using the tools within VRChat that are within this world that Voxel K created.

[00:12:04.392] RIKU_VR (translator): I have a question for Tone-san. Can you explain what you think about playing music in the world made by Voxel K? Ima kaite masu! He's writing now, so please wait. Voxel-san no tsukutta world wa MIDI ya oto ni hannou shite iroiro na fuukei wo misete kureru no de, totemo tanoshiku ensou sasete moratte imasu. Voxel Sound's world reacts to my MIDI and sound and creates all kinds of dynamic effects. So it's always a joy to perform in it.

[00:13:10.471] Kent Bye: And Voxel K, maybe you could talk a little bit about how are you translating the MIDI into these shader effects or these visuals that you're doing?

[00:13:23.639] RIKU_VR (translator): Yeah. Technically, or...

[00:13:48.624] Kent Bye: Oh, yeah. Just a little bit more around the system and if it's fully automated or if there's decisions he's making in real time. Okay.

[00:14:05.453] RIKU_VR (translator): Can you tell me if it's automatic or if Voxel is doing it on its own?

[00:14:19.668] VoxelKei: First of all, in terms of automation, it's more than half. The concept is that humans are in control of this world. I think it's interesting that humans are involved in this world. In this game, I'm the one controlling the game. However, I'm making a gimmick that allows the game to move automatically. That's it for now.

[00:14:55.266] RIKU_VR (translator): Okay, so what I find interesting about our performance is that humans do the controlling, but I think that is not enough. So I use the automation system also to create the performance.

[00:15:15.365] VoxelKei: Mm-hmm. I've been experimenting with MIDI system for about five years now. Yes, the first thing we tried was to connect a MIDI device to a local machine that functions as a VLChat, pick up a MIDI signal directly, and make it respond. That was the first system we made.

[00:16:01.115] RIKU_VR (translator): Can I ask you one more time about VRChat?

[00:16:05.238] VoxelKei: The first thing I did was use the function to pick up the MIDI signal that exists as a function of VRChat.

[00:16:14.365] RIKU_VR (translator): It's a system to pick up.

[00:16:18.889] VoxelKei: Yes. However, there is a problem with that method. The input path and the input path of the sound are different, so the problem is that there is a deviation in the sound. Yes. At the next stage, we tried various other methods to improve it. Yes.

[00:16:45.481] RIKU_VR (translator): And then the first thing I tried was using a MIDI detection system that was available in VRChat, but there was a problem. The MIDI signals and the audio were coming through different paths, so it caused a delay between the visuals and the sound. So I started experimenting with a different approach. Hai.

[00:17:13.148] VoxelKei: Hai. My goal is to make Tone's sound and effects match perfectly at the right time. So I came up with a different method. In my current system, I create an application that visualizes MIDI information and use it on Tone's PC. The sound of Tone and the visualized MIDI information are coming into this world as a video stream. The sound of Tone and the MIDI information?

[00:17:54.882] RIKU_VR (translator): Yes, the sound of Tone and the MIDI information are in a visualized state.

[00:18:09.028] VoxelKei: Tone-san's PC is entering this world as a stream video. Yes. So, in a nutshell, it means that the media is being visualized and sent to this world. Yes, it's being visualized and sent to the world. That's right.

[00:18:25.838] RIKU_VR (translator): Yes, it's being sent. Yes, it's being sent through the stream. Okay, so my first goal was to make out-toned sound and the visual effects perfectly synchronized. That's our goal. So I built a system that turns MIDI data into visual images. And so currently, I let Alton read the MIDI data. I sent the MIDI data to Alton, and then he sends both the audio and MIDI signals to Voxel's world, and they are turned into a video stream, and then it is sent to the world together, the MIDI and the sound. And that's our current system, how it works. And it is all custom made by Voxelcade.

[00:19:28.035] Kent Bye: Just to clarify on the audio, because I know Apple Blossom's shader is able to translate data directly from the video. And is the information about the visuals in the video, or is it being generated by a shader within VRChat?

[00:19:48.900] RIKU_VR (translator): That was very difficult. Oh, hold on.

[00:19:52.402] Kent Bye: Are the visuals coming from the video or are the visuals coming from within VRChat, from a shader?

[00:19:58.425] RIKU_VR (translator): Do you understand? I'm sorry.

[00:20:02.548] VoxelKei: It's probably different from the visualized video that Apple Blossom is doing. I think people are asking if shaders are being generated in this world. Oh, something like that.

[00:20:23.047] RIKU_VR (translator): I think people are asking if shaders are being generated in this world. That's right. I've talked to Apple Booth, and they've shown me the system.

[00:20:41.161] VoxelKei: The way I do things right now is that I don't generate effects externally. So the media data that I just mentioned that I send to this world as an image is just a piece of pixel information. Based on that pixel information, all the effects are in this world. generated and then I control it with this hand controller.

[00:21:17.711] RIKU_VR (translator): Hi. Okay. So I have once talked with Apple Blossom and so I saw the system and I don't generate the effect externally. And instead I send the MIDI data as pixel information inside the video. So based on that data, I generate the effects within the world using my own hands.

[00:21:50.872] Kent Bye: OK. OK. So the data from MIDI is coming from the video. Yes. Yes.

[00:22:07.361] VoxelKei: Also, the effects you see in this world are MIDI information plus audio information. We also analyze and react to audio at the same time. So the concept is to treat the effects that react to audio and the effects that react to MIDI at the same level.

[00:22:37.642] RIKU_VR (translator): What you are seeing in our performance in this world is a result of analyzing the audio data using MIDI. And I treat audio reactive effects and MIDI reactive effects at the same level. And I give them equal importance in the performance. That's what I heard. Okay.

[00:23:25.986] Kent Bye: Thank you. Yeah. And for our tone, wondering if you would be willing to talk a bit more around your process of making music in this world.

[00:23:38.757] RIKU_VR (translator): In this world means meaning VR chat?

[00:23:41.740] Kent Bye: Yeah, the world where there's sometimes when you make music, you use like a keyboard or an instrument. Here in this specific world that VoxOK made, the instrument is the VR interface where there's dials being turned. So you're... In VR, making the music. Or maybe I'm confused. If the music is being made inside the VRChat world, or if the music is made completely external, piped in. Just to clarify.

[00:24:13.142] RIKU_VR (translator): Yes. Regarding R-Tone's music, in VRChat, Are you playing that music, or are you playing it outside? When performing, which one is it? Okay, so I play externally, not inside the VR chat, just outside of VR chat. In the physical world, I'm playing a real synthesizer.

[00:24:57.376] Kent Bye: Okay, and Artone, can you talk about your process of making music? How do you describe what kind of music do you make?

[00:25:06.576] RIKU_VR (translator): Yes, I would like to ask Mr. Alton. What kind of music do you make? What kind of process do you have to make music? The performance process as Tonebok started with a rhythm machine such as TR-8S, and while watching the production of the world created by Voxel, pad ya keyboard wo tsukai ensou shi hardware no shikensan ni omoe ukan da melody na do wo kirokushi saisei wo shite imasu. For tonevox performance, my process usually starts with a rhythm machine using a machine called TR-8S. And then as I watch the visuals voxels are created in the world, I play in real time using pads and keyboards. while also recording the looping melodies that come to my mind on a hardware sequencer.

[00:26:36.909] Kent Bye: Hi. And just a clarifying question, does Artone play on desktop in VRChat or is he immersed in a virtual reality headset while he's playing?

[00:26:51.212] RIKU_VR (translator): Ah, yes, okay. Are you playing on your desktop in VRChat, or are you playing on your headset in VRChat? Yes! When I play, I use a lot of equipment, so I play on my desktop without a controller. Okay, so I play on desktop mode because when I perform, I use a lot of hardware, so I don't have space for VR controllers. Yes, so that's why I play on desktop.

[00:27:32.953] Kent Bye: Okay. Vox OK, part of this world is that there's Mount Fuji in the background and that there's like a movement of the entire world as we watch the experience. And so just wondering if you can explain around the importance of Mount Fuji and why you wanted to have in this performance the whole world traveling towards Mount Fuji.

[00:27:59.699] RIKU_VR (translator): In this Rain Dance performance, Mt. Fuji appears, but what is the purpose of the performance that leads to Mt. Fuji, and what is the meaning of Mt. Fuji?

[00:28:27.419] VoxelKei: Yes. Well, in the Rain Dance, the performance time was set for 30 minutes or 45 minutes. Unlike Toneboku's usual performances, the start and the end are somewhat clear. I had a theme that I had to create a performance that was easy to understand. In that sense, I thought it would be nice to have an easy-to-understand starting point and a goal point. I'm making terrain data for the entire Japanese archipelago, but as a work of mine, as an easy-to-understand landmark that symbolizes Japan's terrain,

[00:29:35.316] RIKU_VR (translator): Okay, so for this Raindance immersive performance, we had only 30 minutes performance where we usually spend more time, much longer for our usual live shows. So since the time was limited, I wanted to create a clear theme in this performance. So I decided to have a starting point and an ending point, which was the Mount Fuji. And as a recognizable landmark of Japan's landscape, we chose Mount Fuji as the goal.

[00:30:12.986] VoxelKei: Tonneboku's original concept and performance There is no such thing as one song after another, so as long as Tone-san's performance continues, it can be continued for a long time, and since the sequence of all performances has not been decided, it is as long as possible. When you're making a 30-minute show, you need a certain amount of story, but that's not the case with Toneboku. This time, I wanted to travel on top of the night view of Japan Red VR, so I used a stage like this.

[00:31:14.556] RIKU_VR (translator): Yes, the meaning of Mt. Fuji. The reason why Mt. Fuji was chosen was the question.

[00:31:20.738] VoxelKei: That's right. It's the most easy-to-understand terrain. It's the most easy-to-understand terrain for people overseas. That's the biggest reason. Yes. Of course, there is also the meaning of being a sacred mountain. As a landmark, I think it's the most distinctive in Japan, so I used it.

[00:31:55.598] RIKU_VR (translator): Okay. So the concept of Tonevok doesn't follow the idea of one song or the sequences of songs. Usually our performance continues as long as Altan keeps playing. So that whole live show is one music and everything happens live in the moment. But for this rain dance immersive, we needed it to work as a structured show, 30 minutes show. So flying over a nice night scape. I thought it would be a deeply moving experience for everybody, especially from people outside Japan. So we decided to use Mount Fuji, which we chose Mount Fuji because it is a recognizable landmark and very famous, well-known, even for people outside of Japan. Mount Fuji does have a secret meaning to it, but it was more like, because it was distinctive shape as a landmark.

[00:33:09.587] Kent Bye: Okay. And Kayroon told me that I should ask you around Gaussian splats. And it doesn't look like you were using Gaussian splats in this experience, but just curious what kind of other experiments you've been doing with Gaussian splats within VR or VRChat?

[00:33:28.020] RIKU_VR (translator): Kaelun said that it would be good to hear about this, but gaussian splats are not used in this performance, but can you tell us about the activities about it now?

[00:33:49.981] VoxelKei: Yes, we are currently working on bringing Gaussian Splatting into VLChat. This is not only Gaussian Splatting, but also photogrammetry. I think that's what you're talking about. Yes.

[00:34:27.566] RIKU_VR (translator): Okay, so I've been working on bringing Gaussian Splatting into VRChat currently, and as well as photogrammetry, I also coined my own term spatialography altogether to describe the range of activities I'm exploring and experimenting.

[00:34:53.527] Kent Bye: Cool. And finally, I'd love to hear what each of you think is the ultimate potential of virtual reality. In other words, where do you see it going into the future and what it might be able to enable?

[00:35:14.335] RIKU_VR (translator): I'd like to ask you about the possibility. Where will this virtual reality lead us in the future? Can you tell us what we can or can't do in VRChat?

[00:35:32.632] VoxelKei: Yes, I'm very happy and honored to be able to talk to Kento-san about this, but it's going to take a long time to talk about a lot of things, so I don't know what to do. That's it for now.

[00:35:51.658] RIKU_VR (translator): Okay, so I am honored to have this conversation with you, Kent. There are so many things I'd love to talk about, but since it will become very long, I don't know what to start. I'd say take your time. It's okay if you take your time.

[00:36:14.349] VoxelKei: I've been making VR content before I came to VRChat, so I'm very interested in VR spaces, interactive content, and content using real-time CG. Ever since I came to VR, I've been thinking about I think it's a very good and dangerous possibility to be able to instill experiences and memories into people unconsciously. It's an image like that of the movie Inception. Yes, that's it. Yes, it's Inception.

[00:37:11.577] RIKU_VR (translator): So before coming to VRChat, I was creating content using real-time CG. So I've always been interested in this kind of field. And what I find fascinating after joining VRChat is that you can implant experiences and memories in people. And it's both very powerful and potentially, but at the same time, dangerous, I think. In a way, it reminds me of the movie Inception. Yes.

[00:37:47.566] VoxelKei: Also, when I was making VR content for one person, After I came to the world of art, I felt like my world had opened up to me. Of course, there are other people, but other people can make their own things as they like, and they can do it with their own imagination. At first, I didn't even imagine that the imagination would be born, and it was very likely that it would spread more and more. When you make content, the designer will experience it in one content. I think that's what most of the content is about. But when you come to this world, it's not closed. Even if you're a world creator, you can't control everything. It's a gimmick, isn't it? A gimmick? Yes. What's a gimmick?

[00:39:22.195] RIKU_VR (translator): Are you talking about the gimmick that was incorporated into the avatar?

[00:39:27.438] VoxelKei: Yes, it's a gimmick, but it means that it's not just closed to the world. It means that there is a possibility that it will change more and more depending on who comes there. Yes.

[00:39:47.382] RIKU_VR (translator): So compared to when I was making a single user content, I feel like the whole world, after coming to VRChat, my world has expanded because now I am surrounded by other creators. And also they are incredibly imaginative. And their presence creates a kind of synergy that pushes everything to a higher level. And what's interesting about creating world is that even as the world creator, I can't control everything. For example, a user will come and enter the world and they can activate gimmicks built into their own avatars and enjoy the experience in their own way. So I think that's one of the unique and powerful aspects of VRChat. Hmm. はい。 And the future of VRChat?

[00:40:54.575] Kent Bye: Yeah, the future of VRChat or future of VR, yeah. The ultimate potential and where it might be headed in the future.

[00:40:59.879] RIKU_VR (translator): はい。 VRChatの持つ可能性とVRChatが将来どの方向に向いていくのかということに関しての考察をお願いできますか?

[00:41:15.772] VoxelKei: It's hard to say. I don't know if it's a virtual chat as a platform, but I believe that the world will continue to expand with the content created by users like this. Well, in terms of the future of VR, I think the evolution of hardware is necessary in some way or another. Headset is very heavy and troublesome, so I think the breakthrough of hardware is necessary in some way or another. OK.

[00:42:28.231] RIKU_VR (translator): I think VRChat will be evolving rapidly through user-generated content. And I believe it will continue to expand. But its future also depends on the advancement of hardware. Right now, things like headsets being too heavy, that is still an issue. So if the headsets become lighter, It will likely to evolve more and even greater transformation will be able, we can able to see.

[00:43:05.970] Kent Bye: And then I don't know if our tone has any thoughts on VR or the future of VR.

[00:43:17.754] RIKU_VR (translator): Artone, can I ask you the same question? Can you tell us what you think about the future of VRChat? I think it would be nice if there was a concrete plan to make the technology that could influence the real life of home appliances in English a little more abstract and difficult. I think if we see further advancements like smaller headsets and improved hand tracking, things will evolve even more. Ideally, if VR devices could start to influence real-world appliances or daily life, I think that would make everything even more exciting. For example, turning off lights or controlling the air conditioner or using the microwave oven. So, okay.

[00:44:45.705] VoxelKei: It's not just VR that's closed, but something that merges with the real world will make it more convenient to use on a daily basis.

[00:45:03.928] RIKU_VR (translator): Okay, okay. That's right. So VR, okay.

[00:45:12.356] VoxelKei: Sorry. It's a connection, isn't it? If the connection between VR and the real world is more fulfilled, it will become more independent in the VR world, rather than a closed world, and the possibilities will expand even more.

[00:45:32.965] RIKU_VR (translator): Okay, so what he meant was if VRChat and our real life world become more integrated, everything will become more enjoyable and full of new possibilities.

[00:45:53.703] Kent Bye: Nice. And is there any final thoughts or message that you have for the broader immersive community? コミュニティの皆さんに何か思うことやメッセージなどはいただけますか?

[00:46:13.593] VoxelKei: Yes, I'll go first. I had the opportunity to participate in Reign of the Immersive, and I had a very good experience and had a lot of fun. I've been doing my activities for quite a long time, but I've only been doing slow-paced activities. I've always wanted to see more people from overseas. So I think I've had a great opportunity. I was able to spend a very happy month. I was able to interact with fans from overseas, and I was very happy.

[00:47:10.311] RIKU_VR (translator): Okay, so for doing the rain dance, being part of Rain Dance Immersive nominee was a great experience for me. Tony Valk has been active for quite a while, but we weren't able to perform very often. So we've always hoped more people from overseas would see what we do. people outside Japan to see our performance and I'm really happy about how this past month went I had a great time and we were able to connect with fans from around the world which was such a joy for me and I definitely want to keep performing more for international audiences in the future I

[00:48:10.054] Kent Bye: Any final thoughts from Artone?

[00:48:13.176] RIKU_VR (translator): How about you, Artone? Yes, yes. So, thank you, Rain Dance Immersive. We had such a great time. And those who couldn't make it this time to see our show, please look forward to the next Tanevok performance.

[00:48:48.726] Kent Bye: Awesome. And just some final thoughts for me is that I really enjoyed this performance and I really thought it was beautiful and awe-inspiring in the moment watching it. And I really enjoyed the journey to the mountain, but also appreciate it even more after hearing the technical sides of how it's done in the back end of live performance with MIDI coming over the video. a real technical achievement and also just a beautiful overall performance. And so, yeah, if you just want to share that and then thank you again for joining me here on the podcast.

[00:49:24.341] RIKU_VR (translator): Thank you!

[00:49:53.590] VoxelKei: Thank you very much. It takes a lot of time to say technical things, but technical things are important, but I think it's important to look at it and see that it's beautiful and that you can only experience it in this dynamic world. I would be happy if I could have such an experience. I will continue to work hard with that as my goal.

[00:50:27.668] RIKU_VR (translator): So, talking about the technical side can take time and it is a bit difficult to understand. But for me, if people can take one look at our performance and feel, wow, this is beautiful and dynamic, or this is something I can only experience in this world via chat, that alone brings us so much joy. Thank you very much.

[00:50:58.539] Kent Bye: Awesome. Thank you. Thank you.

[00:51:02.082] RIKU_VR (translator): Thank you.

[00:51:03.983] Kent Bye: Thanks again for listening to this episode of the voices of your podcast. And if you enjoy the podcast and please do spread the word, tell your friends and consider becoming a member of the Patreon. This is a, this is part of podcast. And so I do rely upon donations from people like yourself in order to continue to bring this coverage. So you can become a member and donate today at patreon.com slash voices of VR. Thanks for listening.