Here’s my interview with Jason Marsh, Founder and CEO of Flow Immersive, that was conducted on Thursday, June 12, 2025 at Augmented World Expo in Long Beach, CA. This is part 3 of 3 of my conversations with Marsh, you can see part 1 from 2018 here and part 2 from 2019 here. See more context in the rough transcript below.

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Podcast: Play in new window | Download

Rough Transcript

[00:00:05.458] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR Podcast. It's a podcast that looks at the structures and forms of immersive storytelling and the future of spatial computing. You can support the podcast at patreon.com slash voices of VR. So continuing my series of looking at AWE past and present, today's interview is part three of three of my series of conversations with Jason Marsh, the founder and CEO of Flow Immersive. So I had a chance to run into Jason again at Augmented World Expo, as I have a number of different times over the years. And he showed me the latest demo on X-Real glasses and pulled it out of his pocket. I was able to see not only these data stories of, I think it was like looking at energy use across all these different contexts, but also like looking at what Caitlin Krauss was doing with quantified self, taking all this data that's coming from your body and being able to analyze it and understand more of what's happening with these different patterns of your data over time. And so that's a completely separate application that I had a chance to do a deep dive with Caitlin into other projects that she's doing in the broader sense of mindfulness and digital wellbeing. And I'll be digging into a couple of conversations with Caitlin near the end of the series. But in this conversation with Jason, it was very quick just because it was the beginning of the day. He had like a full schedule of back-to-back-to-back demos that he had booked for himself. And so I was able to get a demo and squeeze in an interview just to get a sense of his continued journey within this XR space and using all these different latest technology platforms and continuing to use WebXR approach of doing like JavaScript-based development, but being able to do that with a context of a tethered phone that's connected to this birdbath smart glasses from Xreel. So we're covering all that and more on today's episode of the Voices of VR podcast. So this interview with Jason happened on Thursday, June 12th, 2025 at Augmented World Expo in Long Beach, California. So with that, let's go ahead and... Dive right in.

[00:01:59.462] Jason Marsh: Hi, I'm Jason Marsh. I'm the founder and CEO of Flow Immersive. We do data visualization in augmented reality. Specifically, how do we have the best possible conversation around data and data collaboration that you can imagine. Just being able to get the ideas into your audience's head in a much more consistent and powerful way than PowerPoint, which we all forget the minute we leave the room, taking advantage of the way the brain's spatial reasoning and understanding to really have that great conversation to help drive their business decisions that help solve problems in the world. MARK MIRCHANDANI- Great. Maybe you could give a bit more context as to your background and your journey into the space. Oh, so Flow Immersive, I founded nine years ago, worked on the space a couple of years before that. So it's been kind of a long journey at this point, exciting along the way. And before that, I actually got my career started at Apple Computer in 1991, working on speech recognition. So I have been programming for quite a while and always, so far, always been in the enterprise space.

[00:03:04.505] Kent Bye: And so we've had a number of different interviews that we've had over the years. And as a part of this conversation we have at AWE, my intention is to go back and to air all these as a sequence. Because we've got from VR to AR, different devices, WebXR. Now we're on the XREAL. It has some sort of 6DOF type of use. It feels like a really good form factor from what you're doing. And so just curious to hear some of your thoughts on the latest iteration of using the XREAL glasses in order to show some of these different data visualizations.

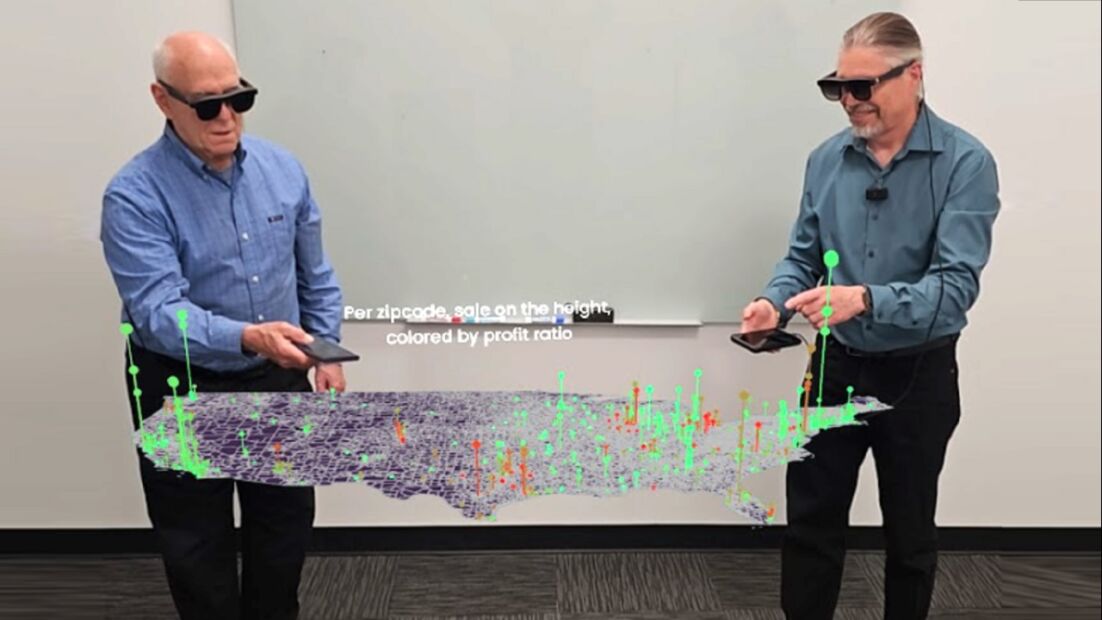

[00:03:32.250] Jason Marsh: That X-Reel is really nice, a little bit ahead of the curve, but also indicative of where I think the industry is going. For our use case in data visualization, we're looking at conference room tables around the world. And in that environment, the larger headsets just don't feel human. They just don't feel like a natural way to interact with each other and be in that space together. where if you've got more of this glasses form factor, glasses are part of the human technological experience, actually in a very intimate way. We have been putting something hard on our faces for hundreds of years. We are very, very comfortable with that environment, with that space, with that way of using technology in the world, and glasses have that resonance with our human way of being in a very different way than a larger headset.

[00:04:26.812] Kent Bye: In years past, there's been everything from the 6-DOF controllers to maybe some hand tracking in there in terms of different systems that could support that. With the X-Real glasses, you've got basically a tablet to a Samsung phone where you're using the phone as a 3-DOF controller to point, but you're able to also render all the content from the phone to the glasses. And so it feels like there's a broader trend here of like, offloading the compute onto these glasses onto something like a puck or a phone. And so it feels like in some ways your demo is showing the future of where this is all going, but I'd love to hear some of your reflection on this latest form factor of dealing with some of the limitations of three-DOF tracking instead of six-DOF tracking, but at least it's very self-contained, in your pocket. You can give someone a demo pretty much anywhere, not a lot of setup, and you're just bam, you're right into these different data visualizations. So I'd love to hear some of your latest reflections on this latest form factor of using the phone and collaboration that's being tethered to the X-ray glasses to give you this more spatial experience of the data.

[00:05:23.579] Jason Marsh: This is a generation of XR glasses that are tethered, whether it's a phone or POC, as you say, and there's so many technological advantages to that, to lighten the load, battery, processing, et cetera, especially with a Samsung phone or iPhone have so much processing power on them. To put that onto a device on your head is still a really difficult technical challenge. This generation, what pretty much we're hearing from the market, it seems like it's going to be the norm for the next six to 18 months. Then we'll get more to the wave guy things, you know, Meta Orion and the glasses that are meant to be untethered and basically worn all day. So this is a transition device. However, in relationship to a Quest, in relation to a headset, where it's still a dedicated device that you put on in order to have that experience, as opposed to wear all day, man, it's so much more convenient and, like I say, comfortable. Even when I ran into you in the hallway here at AWE, I could walk up to you, I'm wearing a suit jacket, and I could say, would you like a demo? And my hands are completely free, and I can reach into my pocket and pull out a pair of glasses. That alone, it just feels so like, oh, natural. And you're right, you don't have to worry about dealing with a boundary setup or all of those things. You could get into the experience just very quickly.

[00:06:43.854] Kent Bye: Yeah, I feel like there's still a lot of room for the vertical field of view, I think, was the biggest limiting factor. Previous iterations, even though the experience of the data was more immersive, let's say, this was good enough to be able to have it a little bit further in the distance to have all the data that you need to see. But you are continuing in this pattern of looking at different issues and trying to visualize different data points. And so you have these graphs and these immersive graphs and also the ability to click on these different buttons to compare and contrast. different types of data relative to each other. So maybe you could just elaborate a little bit on this energy use case that you're doing. What was the context under which this came about? And how is this being used in a professional enterprise context?

[00:07:23.100] Jason Marsh: So one of the large consultancies had us put together a analysis of EV adoption for just looking at all the different aspects of it. Where does the power come from? What is the generation lines? Where are all the charging stations? How do you deal with range anxiety? What are the costs of ownership in terms of cost of electricity? How do all of those different elements interact? And being able to put all of those onto maps, with an incredible amount of interactivity to filter different size transmission lines, different types of power plants, filter in and zoom in to certain geographic areas, and then layer it actually all at once on the same visualization where you can turn on and off each layer, just enables you to see relationships that are actually really vital Where you generate the power and where it gets used, there's so many pieces of that puzzle. When, even when the power is being used, when are people charging their cars, actually has another important piece. If we're moving more and more to solar and wind, you would want to charge your cars during the day so you don't need to battery backup, worry about the evening, but then everybody else is using power during the day at the same time. So there's a lot of interesting relationships and being able to just see it, interact with it, have it float over the table between you and your audience, that really is the future of what we're working on. We'll drop into AI in a moment, but I want to really also emphasize this multi-user nature of what we're doing. Collaboration and communication is the missing piece in our data landscape now. Dashboards, Everybody gets excited about them, but they get created and not used. And they certainly aren't getting used by the upper management the way you need them to make really detailed decisions. So if you'd like, I can describe some of the reasons why 3D is really a better way to do data visualization in augmented reality versus 2D.

[00:09:16.755] Kent Bye: Yeah, one quick follow-on. When you say the collaborative social, does that mean that some of these experiences are networked, so you could have multiple people looking at the same data at the same time in the same shared virtual space?

[00:09:26.283] Jason Marsh: Absolutely, so with our system, they can be on an XREAL, they can be on a Quest, on an HTC, on different headsets, or on phones, whether in AR or not, or on a laptop, so it's a full, it's native in web and native in C-sharp in Unity, and everybody sees everyone else, everyone sees everyone else's laser pointers. When anyone interacts, they click the filter. We have legend systems where you click and it isolates based on what you've selected. all the users see that same interaction at the same time, and they all can also interact. It just feels like you're working with a concrete expression of symbolic information together in the same mental space, in the same physical space at the same time. It feels like the movies. It feels like we've seen it all in the movies, right? There's a lot of technical pieces that have to be in place for all of that to work, And all of them have to be there. I just can't imagine having a great data visualization system without collaboration.

[00:10:27.736] Kent Bye: Okay, now why 3D? Why is 3D such a compelling use case for looking at data analysis?

[00:10:35.279] Jason Marsh: One of the core ideas is that on a flat screen, it's very difficult to overcome visual crowding when you get into the details. But the details matter. The details are where the risk is. So for example, your typical thing, you're going to take a look at the sales results in a PowerPoint with a bar chart. Well, you just over-summarized all the risk out of that visualization. You drill into the daily view, or drill into the monthly view, or the weekly view, and then the daily view, and then all the way down to the transactions. You're not going to do that on a flat screen because it's mud. The visual crowding just means you can't see anything. But with your stereoscopic vision, and with being able to lean in, as well as real-time filtering, now you can see those outliers. You can drill in and realize, oh, the last week saved our quarter. Oh, there was three transactions. Now you see those three transactions rising off of the map or rising off of the scatterplot. Realize that there was three transactions that saved your quarter. You better, as a CEO, you probably want to call those guys and make sure they're happy and also understand that That's a pretty risky setup for that quarter. Maybe that's the way you run your business, but chances are you'd prefer to have a more steady flow. Being able to understand that risk, being able to see the relationships around risk is a key beneficiary of 3D.

[00:11:59.440] Kent Bye: So I had a great conversation yesterday with Caitlin Krause talking about digital well-being. And it sounds like you've been collaborating with her and Mindwise to do all sorts of interesting data visualizations of this quantified self type of approach of gathering all this data of what's happening on your body, being able to translate that into different spectrums of emotions. just trying to get a sense of the story of your body and your health and your digital well-being. And so I'd love to hear a little bit more context of this collaboration with Katelyn, looking at this mind-wise and quantified self data visualization of data from your body.

[00:12:31.641] Jason Marsh: It's actually a really fascinating more consumer use case for us. We've been in an enterprise space for quite a while. And also a great use case for these glasses. So these glasses form factor. There's a lot of organizations releasing this form factor in the next months to year here. And for them to be able to have a daily use case is pretty interesting. Now, essentially what we're doing is taking the data from your smartwatches, from your Bluetooth scales, we'll even look at the Oura Ring, all these quantifiable metrics about you. But instead of just looking at that data and saying, oh, it's going to be cooler because it's in 3D, yeah, it is cooler, it is interesting, add an AI. So now you can talk to it to interact with it. Again, other people have done that. And then Caitlin's magic comes into the picture where being able to bring that together with your mindfulness practice or think about it and yourself in a really different way where it's grounded in science, yet also expresses that joy and wonder that we have in being alive and so she's helping us understand with her digital wellness books and talks in the world and together feels like a unique combination that could really have some legs and a really nice contribution to the xr community and maybe to the larger community to see the value of why these glasses give you a different experience than you could have just with your phones

[00:14:05.600] Kent Bye: So this morning, the context of our collision was that I was just posting to LinkedIn this feeling of being at AWE and seeing how everybody's kind of leaning upon AI as this savior that's going to save us all in terms of XR and its eschatology is going to either kill us all or it's going to save us all. It feels like this peak of a hype cycle that feels like a bit of a collective delusion that I'm going to be arguing against some of this AI hype. So then you come up and you're like, I want to show you a demo about how I'm using AI for data visualization. I'm like, ah, OK. So yeah, I'd just love to hear some of your thoughts on that.

[00:14:36.192] Jason Marsh: Right now, the way we're using AI is just a fabulous tool. What happens is you can ask the AI to process the data any way you can think of. Basically, show me this time frame, filter to this, do this processing, do this technical analysis, do a MACD analysis of the stock indicators, do a random forest regression in a pharmaceutical use case. It writes the Python code, it processes it, it throws it back into 3D space and helps you understand it in a way that just you really couldn't do without a tool like that. So it's not a savior, it's not a threat, it's just a really fabulous tool in our situation and I think that's where it will find its greatest value in the short term for sure.

[00:15:20.475] Kent Bye: Yeah, and I think when the context is bounded with the data, it's leveraged and does well. And when it's unbounded, it goes on to hallucinations. But I think if you are able to ground it and ensure that it's not hallucinating, then interfacing with large learning models, there's always that risk of that. So I think that's the persistent concern I have around how people are using the technology. Just finally, any final thoughts, any sense of where this is all going here, and what you hope to do with Flow and play a part of the unfolding future of immersive technology?

[00:15:46.801] Jason Marsh: It is really interesting to be ahead of the market for so many years and to see I think really how it's all coming together now. For us specifically, it feels like we're at that point where you're an overnight success after working on it for 10 years. So that is very exciting and I guess I would also say I didn't realize how wonderful the AI feels. I didn't know that we needed it, but now that you start to use it, you realize, oh, this really is that Jarvis experience. This is that Iron Man experience where it just feels more human than high-tech. And that's a twist. I didn't realize the humanity of the end results. I'm excited about that and to share it with the world.

[00:16:34.189] Kent Bye: MARK MANDELMANN- Awesome. Well, Jason, it was a pleasure to get a chance to check out your latest demo and catch up with you again. And yeah, thanks for all the work that you've been doing for the last nine to 10 years to continue to push forward the dream of how XR technologies can give us much more insight as we navigate these patterns within data. So thanks again for joining me here on the podcast. JASON MILLER- My pleasure. Thanks again for listening to this episode of the Voices of VR podcast. And if you enjoy the podcast, then please do spread the word, tell your friends, and consider becoming a member of the Patreon. This is a supported podcast, and so I do rely upon donations from people like yourself in order to continue to bring this coverage. So you can become a member and donate today at patreon.com slash voicesofvr. Thanks for listening.