I spoke with Jun Young Ro about planned improvements of VRChat’s Trust & Safety processes. See more context in the rough transcript below.

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Podcast: Play in new window | Download

Rough Transcript

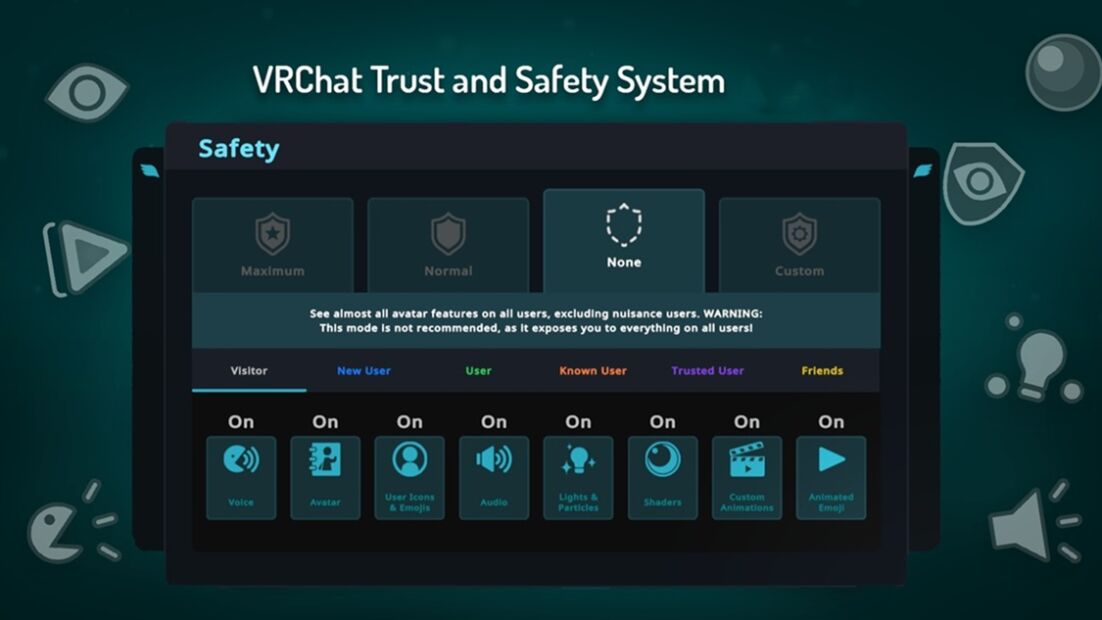

[00:00:05.458] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR Podcast. It's a podcast that looks at the structures and forms of immersive storytelling and the future of spatial computing. You can support the podcast at patreon.com slash voicesofvr. So this is the last of my trilogy of different conversations with VRChat, and then I'll be diving into my broader coverage around RainDance Immersive that happens to feature a lot of VRChat artists. And so today's interview is with VRChat's new trust and safety lead, Jun-Young Roh, who's been there for a couple months when I chatted with him in mid-July. And I got catalyzed with this conversation that I started with Harry Axe, where Harry had discovered that there were some gaps in the processes for trust and safety, where he was going into these different areas. public avatar worlds and finding these not safe for work avatars that had these prefabs that enabled different sexual acts, but also not safe for work avatars with genitalia. So these were publicly available so anybody could get access to them. And he was reporting them and they weren't taking fast action on them. They were lingering there for a while. And then they were being moved from being public avatars to private, but they weren't being removed from the system. So if you already had those avatars, you would still be able to have them and then potentially still be able to share them with other users. And so I ended up having a chance to chat briefly with a couple of VRChat folks around the avatar marketplace. I had asked them questions around these claims around trust and safety, and they gave an answer, but they really weren't experts in that area and so they actually recommended that i talk to june because he's newly hired there and basically going to be doing lots of different reorganization so in the process of this conversation there's a lot of like mixed messages around like what is the status around not safe work avatars within the context of vr chat and it sounds like that they're going to be undergoing a process of trying to come into more clear alignment between what their terms of service is what their community guidelines with all those things saying the same thing because right now there's some like conflicting messages as to what the exact status of not safe work avatars are and they're also in the process of trying to you know implement these new trust and safety processes over the next like three months six months year two like this is basically like a two to three year plan and so part of that plan is to have more community engagement to get feedback from some of these different ideas and so Just because we're talking about these as a concept or idea doesn't mean it's actually going to be implemented because they're still in the process of taking feedback from the community and going through their normal process of trying to get everything in alignment. So we talk about where things are at with trust and safety within VRChat, and it is a bit of a sensitive topic where most social VR platforms don't usually have much in-depth conversations around these topics just because there is a bit of a cat and mouse game that happens with people who are trying to abuse the platform in different ways. And so There's lots of different mitigating strategies that these platforms have in order to create a safe and trustworthy environment. And there's already all sorts of trust and safety processes that VRChat has, but there's lots of stuff that is behind a veiled curtain that they're not talking specifically around. But just generally, they're trying to come up with some better professionalized processes that they can be more responsive and come into more alignment with some of these different issues that were being raised. So... Anyway, we'll be covering all that and more on today's episode of the Voices of VR podcast. So this interview with Joon happened on Monday, July 14th, 2025. So with that, let's go ahead and dive right in.

[00:03:34.676] Jun Young Ro: So my name is Joon Young Ro. I am leading the trust and safety operations and the policy for VRTED. I joined about three and a half months ago right now leading the trust and safety team of course however i'm in the process of two of my major or biggest priority right now is doing an assessment as to where we are and then what's the strategies that we are going to have for next one year two year and three year how are we going to transform our program into next level so that way we we are making sure our community becomes a vibrant place. It becomes a creative place. It becomes a safe place for all of our users, right? So that is one of the biggest mission that I have. And second part is in order for me to get there, what do we need, right? How do we need to basically turn around our program into that's kind of similar to the assessment, but basically the second part is a people part. So in order for us to get there, what, for example, areas that the improvement that we need to make, for example, What kind of expertise externally can we bring in internally to look at the areas that we never looked at or we never really, really carefully looked into before? So we want to kind of flip those. And the other part, we want to do more outreach with the users. We want to talk to more with the communities. We want to talk more with the small or large or medium communities to learn and hear about what their pain points are and how can we basically resolve some of the pain point they have. So those two are something that I'm heavily, heavily focusing on right now.

[00:05:04.440] Kent Bye: Right. Maybe you could give a bit more context as to your background and your journey into this space.

[00:05:08.881] Jun Young Ro: Yeah. So I very briefly, I used to work in the government right after college, done some national security work, and then I moved to the banking. That's when I did anti-money laundering, counter-terrorist financing, typical money investigations work. And then I joined TikTok back in 2019. This is when no one knew what TikTok was. Like if you had a 13 year old, they knew what TikTok was, but no one knew what TikTok was. So I was very few member to kind of build out the trust and safety operations for the tech top. And thereafter I went to Google, um, doesn't trust and safety work. And right after I joined the company called neighbor Z as a pedal, it's a metaverse company. So it is somewhat similar to what the VR set does, except that it doesn't use a headset, but it's a mobile based. It's a metaverse. It's a 3d SS. This is when. post-pandemic when the metaverse was skyrocketing everywhere, right? So I joined there to lead up the trust and safety team. And that's when I kind of really, really got into interest in the trust and safety in the metaverse or 3D areas, right? Because traditionally in the 2D or social media, at the end of the day, like a lot of contents are kind of creator to mass, like creator to population or the people view, where metaverse, it was not so much of, there's a one influencer and there's a massive mass looking at them, but it was a lot of one-on-one interactions that is a more meaningful and it almost feel more lively. Right. And the other part is before the metaverse kind of kicked in, we used to see a gaming and the social media separately, but with the coming of the metaverse, it kind of combined everything together and started to really put everything in together as a kind of social game areas, right? So in Zepeto, however, it has more gaming component to it because you use the mobile. For me, VRChat was very, very, very interesting for me. And then also it aligned with a lot with my interest because in the VRChat, a interaction is much more lively. You almost seems like somebody's right next to you. You're not just looking at the mobile. Of course, we have a great desktop in the mobile version. But as soon as you put the headset, you are... Just falling into the world that you interact with people, you just meet people. And I think that amazed me. That's what kind of brought me to kind of join here and here. So, of course, it has a much more layers, much more problems when it comes to abusive vectors. I will say that. However, love solving the problem. So that is one of the biggest reason why I decided to join VRChat.

[00:07:44.540] Kent Bye: And can you speak to just broadly, what was some of the larger context for why VRChat wanted to bring you in to head up kind of reevaluating all the existing trust and safety processes and just kind of give me a state of the union in terms of what's happening with trust and safety at VRChat?

[00:08:00.273] Jun Young Ro: Yeah, so basically, it wasn't anything that bad happening that decided to turn the ship around, right? However, given the businesses, whenever the business grows, typical status flows are companies start to focus a lot on monetization, growing the community, a lot on the kind of business side, the sales side, right? And then they will kind of roll back and thinking, all right, what are we missing? There's a legal component to it. There's a user support function to it. There's a trust and safety component to it. they kind of roll back a little late as to our, we need to kind of build that to build it up. One of the things is we had excellent trust and safety. Some of the team members who's been working, whether it's an engineers, whether it's a product, whether it's a operations has been working on trust and safety and to really help out to build a team around. However, we never really had a kind of leader to strategize this and then also there's a product roadmap and how do we embed the trust and safety into the product roadmap and kind of grow together right i think those are the conversations that has been had between the senior management team so my boss Jeremy who is also general counsel decided to kind of bring in this role to bring in and then basically leading the program into the next level So coming from more of just an operational-based team, how do we make sure this team becomes operational to more of a program, more become the strategy that really is scalable and has a larger impact to the users, right? Because at the end of the day, when we do a lot of stuff reactively, when we leave this at the operation level, the impact that we make is a bit lower than the impact that we could make at the program level. So what I mean by that is user empowerment. Something that I told you about the community outreach. How do we work with the community? How do we embed the safety, not at the moderation level, but at the product level, at the feature level, right? All that needs to kind of come together. So in order for us to bring this into the more of a large set of the program side, I think that is where VR tech kind of decides to say, hey, let's bring in somebody and Very fortunately, I was picked as one of the candidates to join the company and rebuild this program.

[00:10:05.646] Kent Bye: Nice, well, I've talked to folks like Britton Heller, who has made the distinction between content moderation and conduct moderation, where there's the content that you can take a look at and evaluate independent of any sort of moment in time and actions, but then there's how people are actually acting in context. And so it seems like that the unique challenge within the context of a platform like VRChat is that there's both a content side of the moderation issues, but also conduct side. So how do you start to approach the differentiation between content and conduct?

[00:10:38.230] Jun Young Ro: Yeah, thank you. I mean, that's great. And then, you know, when you differentiate between content matters and conduct moderations, it almost looks like a different one, but it's sometimes the same thing. So I want to give you one example. So of course, I can't throw my previous company. So an ex company that used to work for a comment says there was a comment under the video says, love your chicken dance. love your chicken that's it so when you read the comment love your skin this there's no derogatory term there's no negative term so terms looks perfectly fine right however when you look at the video basically it is a video with the person with this disability so it doesn't have an arm and basically moving around looks like a chicken right so it is degrading an individual So what that does is that at the end of the day, when you are looking at the content, content have this indirect impact of a contextual targeting towards individuals. So at the end of the day, content and conduct is, unfortunately, is intertwined together, right? And that is what makes the trust and safety much, much, much difficult because one is there's a conduct that is doesn't use any derogatory terms, but use very indirect terminology to penetrate the people. But also there are areas where it could perceive as completely different. For example, in the US, LGBTQ content can be perfectly fine. In the Middle East, it is not. It's legally not allowed, right? Then how do we deal with that, right? So there are not just the content that how we view and not just the difficult content, but also depending on the geolocation you are in, depending on which country compliance that you're following, Also, the policy and then also some of the behavior that you see or tolerance towards those policies on the behavior may be very different. So that is what makes this job very, very difficult.

[00:12:26.400] Kent Bye: So I had a user reach out to me, Harry X, who had been making a number of different reports of terms of service violating avatars, basically avatars that had adult content that when you went to the expressions menu, you could either get them naked, it would expose genitalia, there's essentially a number of prefabs, like SPS, PCS, DPS, TPS, live pop, all of these are in the erotic roleplay context that, you know, some of these different packages were available on public avatars. And so There's been a little bit of confusing gray area when it comes to VRChat's not safe for work policies. And I found a comment from Tupper in a forum where he says, creation or usage of not safe for work avatars is prohibited by the VRChat terms of service, no matter the instance type. And he has a little footnote. And he defines the not safe work avatars as defined by objectional content, which refers to the terms of service that says user content could not be deemed by a reasonable person to be objectable, profane, indecent, pornographic, harassing, threatening, embarrassing, hateful, or otherwise inappropriate. But then sometimes as I go through, like the culture of VRChat over time has had some conflicting information where if there's a complete ban on not C4 content or avatars or conduct, there'll be like a community guidelines that say no matter what, our terms of service are the final word. for the VRChat rules. However, these community guidelines are meant to help you understand how we expect you to behave. And then number two is keep private things private. If you have avatars or other content that might be sensitive, intimate, or provocative, keep it in private spaces with users that agree to see it. So it's a bit of, somewhat conflicting information in terms of like, hey, if it's private, then it's okay. Similarly, in the creator guidelines, it says private avatars may contain some of this content, either controversial, sensitive, intimate, provocative, extreme horror, but they cannot be used in public spaces. See the community guidelines, which kind of reiterates this kind of public private. And then finally, there's been a tool tip that's been in the interstitial for VRChat that has said, these actions can lead to bans. And it says, quote, not safe for work, avatars, behavior, or content stream are shared from private worlds, invite plus or friends, or not safe for work, avatars, behavior, or content in public worlds, public or friends. So basically, don't stream any not safe for work content. But there seems to, again, be a differentiation between public and private. Just curious to hear if you have any comment in terms of some of these conflicting messages in terms of like the status of not safe for work, especially in the context of some of these terms of service violating avatars end up in a public listing where children can get ahold of them.

[00:15:09.015] Jun Young Ro: Yeah. Thank you. Thank you so much, Ken. I think there's two parts that I can answer. One is the policy that we just laid out, and the other part about the actual, the avatars, the NSFW. When it comes to policy, I think we could definitely do a better job rewriting those policies. And that says, in fact, what we're going to be doing as an initiative for the rest of the year, this year, we are going to be working on to refine the community guideline, as well as working on terms and services. make sure some of these provisions are a bit more clearly articulated to the users, right? So that way, when the users like you and users who are reaching out to you, and then when you are reading it, it makes much more sense, it's much clearer. So for example, at the end of the day, terms and services are legally binding documents. So it has a very hardened words that is sometimes hard to read. So that is actually something that I spoke to my legal team to thinking about, all right, when we are presenting this to the users, we want it to be user friendly so how can we of course has to be a bit more controlled but how can we also make this more accessible for the users so that is definitely part that we're going to be working on to revamp it so that way the confusion is minimized that is something we're going to be working on and when it comes to nsfw i just want to iterate here that we are really, really deeply committed to fostering a safe and welcoming environment for the VR test. And we take the online abuse very, very, very seriously. So the user who basically identifies some of these experiences, one is that, you know, we are sorry for such things to kind of happen. But I think that that is just not something that we tolerate on our platform. However, because the trust and safety issues and all these online abuses are significant, Constantly evolving and constantly basically become a sophisticated to penetrate the platform and constantly happening. There are times that some of these what we call internally leakages are happening. Some of them basically becomes leaked and it goes to the public platform where the other users can take a look at it. Now, for the VR chat, we've been working kind of around the clock to make sure that some of these abusive materials, when we identify them or when we get a user report, we basically get to them and start removing them. However, because we're working with the limited resources, there has been some delays to it. Right now, we're going to turn that around. So our senior leadership team decided to put much more investment on the trust and safety. So we are going to be basically increasing the investment. what that means is that we are going to change how we and what we do with trust and safety so some of these contents will be removed much faster and then also we are going to use much more high-end technology to identify them and then basically proactively remove them from the platform so it's a long way step it just takes a long time to get there so for example This is the comparison that I use is NYPD pours millions and millions of dollars in the police force, right? But there are still a lot of crime happens in New York City. Doesn't mean we should justify the crime, right? But the message that I'm trying to get to is at the end of the day, as much as resources that we pour in there will still be some subset of the abuses that happening there will be users who will constantly try to penetrate our programs right so one is it's not just a investment it's not just the pouring the money and the resource into the detection mechanism that we're going to be doing but we're also going to work with the communities and users to earn the trust What that means is that we're going to be rolling out more user empowerment and then also more of the transparency, the works between us and the community. So that way we could kind of earn the trust from the community. And then also we could get an assist from the community to work on basically identify these type of user out there and then get help from the community. So this is kind of collective action that we need to work together. So in order for us to get there, we need to earn the trust. So that is basically what we are aiming to do for the second half of this year.

[00:18:58.615] Kent Bye: So one of the things that rolled out recently on the VRChat platform was age verification. And one of the things that Harry X said was that at this point, there doesn't seem to be any explicit functionality that's toggled to that. Like there isn't anything that is either enabled or prevented at this point with that age verification. It's essentially a flag that is on your avatar, but that there isn't any functional difference between getting that or not. Do you expect that as you move forward, that some of these more not safe for work features may be added to a functionality for that type of age verified? Like it's okay to do that if you are verified to be 18 plus. Just curious to hear that.

[00:19:36.545] Jun Young Ro: Yeah, I can. And of course, I need to be very, very careful when I talk about NSFW. NSFW has a lot of tolerance to it, right? There's a spectrum of really something that we just do not tolerate at all to something that we may tolerate it. So there's a threshold to it. And as you nailed it right now, our age verification process is the program is evolving and then we're actively working. Our product team is actively working to figure out how do we basically turn this into the more of scalable product roadmap, right? So that is definitely what you mentioned about. We're going to utilize this to minimize some of the exposure to the years user is definitely in the roadmap. And of course, there are content that we do not tolerate will still be removed regardless of the age of verification. However, if there are policies where we can still allow this to the, for example, older users, we are going to put that into the bucket of the age of verification and maybe allow to the people who go through age of verification. So that is definitely in our pipeline. And that's something that the VR chat is committed to building upon.

[00:20:34.144] Kent Bye: So it reminds me of like a famous Supreme court case where they were asked to like define pornography and the response is sort of like, I know it when I see it type of thing where it's still kind of ambiguous, but yet it sounds like as you're going through this process where there is this gray area that has existed, where there is content, there is people. that are specifically into the realm of erotic role play in private instances. There's a whole sub community of folks that are using a lot of these different tools. So do you have any sense of like how you're going to start to like address this issue of like essentially defining pornography? Cause you have to make a line. So how do you, how do you approach that?

[00:21:16.851] Jun Young Ro: it takes a very long time to do. And then it can't, if you remember, I talk about the trust between us and the community. Why I think the trust is very important is it takes a moment of second to, for example, go VR to from, okay community to become a full of the pornography community, right? It takes a moment to go for VR to go from okay community to censorship community. People may see as, oh, you're Chinese, right? Chinese government, right? So at the end of the day, it's not just the policy that you stand by, but also how do you communicate this policy standard? How do you communicate where you stand with the community, how do you work with the community kind of matters, right? That's where Tupper comes in, where there's a lot of like community hours kind of comes in and is basically, I don't want to say negotiate, but we kind of work with our community understanding our existing policy to understand where do we feel comfortable. That is why I love to using word iteration. Like policy is never a set of stone for me, right? So for example, about seven, eight years ago in the famous social medias, if for example, any female comes out to the social media, have recording, upload a video, and for example, have see-through clothing where they can see, for example, nipples, right? That used to become a violation for a lot of different platform, right? That evolved, right? That evolved with the society. So now you see that a lot. that is not right for example micro bikini even 10 years ago now i don't that doesn't even 10 years ago even six years ago market bikini was not a lot on a lot of social media now it is right so basically all this kind of definition and all where we stand as a what do we see as a policy is constantly evolving based on not just what the policy decisions but also what kind of content is created by so for example is a vrc The VR test micro bikini content is full of, for example, explicit sexual content. Then we may not be ready yet, right? However, we have plenty of creators that will love to create a really amazing asset, amazing content, fun, engageable. The asset, for example, maybe towards the sales, like variety of content that created based on some of the policy that we said no before, then we may have to revisit those policies and have to kind of flip that around, right? So as I said, like, unfortunately, as you said, like pornography, a definition that we do not tolerate. For example, anything that related to children, we do not tolerate. There's no room for the negotiation. We do have a zero tolerance policy that we do. But when it comes to the accepted policies, we're happy to always open our heads and then also open our arms to constantly review where we are at the time of the year that we're living, at the time of the society that we're living, at the time of also what the community want us from, and then basically evolve that as we go on.

[00:24:00.086] Kent Bye: Yeah, and I think everyone can agree in terms of children having access to these tools and experiences is something that I don't think anybody wants. So one of the things that Harry X was bringing up in terms of the threats of predators who are on the VRChat platform, and you mentioned a couple of points. One was that it seems as though that VRChat may be in this kind of gray area where predators predators may find a child and then maybe they go off platform and exchange videos and photos. So it's kind of off of whatever's happening from whatever control that VRChat could have, but maybe they come back in and that, you know, a predator may have an avatar that they allow the child to clone. And then a part of that avatar could be some of these different types of prefabs that allows them to have this more explicit sexual encounter with erotic role play. And so the concern is that for one, if there's the ability to clone these avatars that have these prefabs, but also, you know, most of this is happening on the Quest platform. And so, you know, is this something that you can mitigate this by just looking at the capability of some of these things on Quest versus this becomes a PC VR only thing? So just curious to hear, like, if there's any types of strategies that you have in terms of like the unique problem of, well, maybe it's not so unique in terms of this probably happens on other social platforms as well, but it seems like there may be unique things to consider when it comes to trying to mitigate some of these predatory behaviors on a platform like VRChat.

[00:25:28.817] Jun Young Ro: Yeah, Ken, thank you so much for that. And I think when it comes to child safety, minor safety, as a father of two, it touches a lot. And then previously, I used to serve as the board director for the nonprofit called SOSA. And basically, it is there to help some of these younger users who may become a potential victim of the sex crimes that happens online. And at the end of the day, as much as This may seem so, for example, if you just sample out a one case saying that, hey, here's a case, no doubt. Why did you do anything about it, right? Yes, in that case, it looks like a platform failed. But if you kind of step back and if you look at the sense of the entire abuse factor that happening, the reality is it is really really difficult for us to identify right and how this kind of weights in is also the privacy too so as much as our users wants the privacy from us but also wants the security from us or safety from us how do we wait between and then make sure we find a sweet spot to provide this protection right i think that is one area that makes a minor safety child safety very difficult for industry sense for example for all around the industry so for example meta we'll do end-to-end encryption on the messengers, right? And as soon as that kind of rolled out, a lot of, for example, the conversation is around, hey, like, what about some of these system images being exchanged on your platform? Some of these, like, the grooming behavior that happened on the platform, what are you going to do? So there's always that balance between the safety and the privacy always in battle. So kind of roll that back to us. And we take that very, very seriously. And at the end of the day, a lot of those vectors that we see, for example, a lot of children or we do not allow children in our platform. Some of these minor kind of come on and maybe they are used as a medium for these predators. doesn't mean the content is solely used for them too, right? It could also be used by the perfectly normal users for perfectly normal cases, right? Now, the problem is how do we identify that this is a potential, for example, criminal or abusers versus this is perfectly normal. I think that is hard to develop. So yet I cannot share a lot, but we are working towards kind of building the model internally to basically develop some of the internal system to identify those type of predator behavior that are happening, right? So we're in the process of doing it. But as I told you, from industry perspective, it has a very high false positive because it's really hard to identify and Plus, some of these people who are coming in to do penetration, they know platforms are looking. So they use very indirect manner to carry out the manner. So it becomes more and more difficult for us to identify. However, we are going to be working. So in another initiative that we're going to be doing is Without naming it, we're going to be basically participating, working with the bigger alliances that cares about this type of behavior, specifically the minor safety and specifically regarding the sex crimes happening against children. And we are going to put our heads together from an industry perspective. And we're also want to learn from other industry peer to bring in some of the know-hows and knowledge to kind of implement in the VR team and provide that safety net for our users.

[00:28:38.211] Kent Bye: And it seems like that VRChat is such a small team. And yet at the same time, there's a ton of concurrent users, like over a hundred thousand concurrent users, sometimes at peak. So millions of users overall, but like in any given time, there could be over a hundred thousand people on the platform. And so heading up the trust and safety team, just curious to hear, some of your thoughts on the strategy of mixing both the manpower of things that can only be done by humans, but also like these automated systems that are able to help you really scale up to really address the problems that are really creating a context and an environment to really enable trust and safety to thrive on the

[00:29:14.395] Jun Young Ro: Exactly. Thank you so much, Ken. And again, I love how you put your question because a lot of time people will ask you, hey, what about the community moderation? And usually my reaction to the community moderation is I think the community moderation is very, very important. But if you don't have a fundamental infrastructure, so for example, You let community police themselves in the community. But if there's actually somebody coming, you know, the people with the gun coming to the neighbor and start shooting, you need a police forces come in and then respond to it. Right. So there is the fine between fine balance. So when it comes to trust and safety program, I think there's a three core programs that you need. As you said, there's a human component to it. There's a community component to it. And there's a AI technology or technology component to it. Because at the end of the day, there are contextual cases that happen. However, when it comes to the contextual, there's a decision making that requires context to figure out. But what we need from AI is how do we flag this behavior, right? When you look at the trend, there are a consistent or repetitive behavior or repetitive attributes that is happening consistently. So what we need to do is what we are going to do is we are going to develop the model. We're also going to basically look for the models or use the model to identify those signals and surface them to us right and we're going to spend more time training our smes to identify those signals and make an action as soon as possible so those three community the models or the technology and the human part all three need to kind of balance it out so our goal is to basically pour resource and investment all those three areas right

[00:30:43.257] Kent Bye: So in talking to Harry X, one of the things that he also mentioned was that to really file a report, you often need to be recording and submit evidence to really show this kind of terms of service violating conduct from other users. And so I know that Horizon Worlds and other platforms that have experimented with the ability to kind of report data in line so you maybe push a button and it has taken like a rolling average of the recording that's already been there and so just curious to hear some of your thoughts one of the things that harry x said is that when he was reporting things of terms of service violating avatars that he would report it and he wouldn't see any action taken or it would just kind of go into a void but then when he would make a ticket on ass.drchat.com on the more official zendesk system then he could follow up and see that action was taken and so Just curious to hear some of the thoughts of how to really strengthen the ability for people to, while being in VR, still make some of these different reports around trust and safety.

[00:31:40.722] Jun Young Ro: Yeah. Yeah. Thank you so much, Kent. And in fact, that was actually a meeting that I had today with my engineering team. So we were actually discussing, and then I think I do agree. Like for example, in a lot of these metaverse or 3D SS, the companies like when you use, when you use your report, it will record sessions and then send it to the moderators to review. Horizon definitely does it. Where we are right now is we have a technology that is in the testing that we are going to be able to do. But however, there's also the privacy concern that we cannot ignore, right? So for example, it could falsely report somebody and it could be there. And then also because it's such a wide video that could capture, it could have people that have interest with, they have no issues with the user report, but they're being captured through the video recording, right? Also, how are we going to handle that video recording is another thing. The other part is video recording itself is just a large amount from a data perspective. How do we store this effectively without making sure that we are not wasting a lot of money, right? So all that needs to be played together. So we are actively working on that to address the part and then potentially roll out something that is, one, is effective. So that way we could capture some of the abusive behavior that happening without relying on users to provide such a lengthy comment of just explaining what they saw from a visual to the text. The other part is how do we also make sure we respect the privacy of all the users, right? So which will require our legal review and then other, some of the work needs to be done. So all that needs to be kind of coming together to basically roll out the feature. So we're working in the progress and hopefully we will deliver something that is somewhat convenient for the users soon.

[00:33:18.058] Kent Bye: Awesome. And finally, what do you think is the ultimate potential of virtual reality and what it might be able to enable?

[00:33:26.334] Jun Young Ro: Yeah, I'm a trust and safety person, right? So this is something that I love to talk about it. And thank you. Well, Kent, for the last question, thank you so much for not throwing a hard question to me so that I could kind of talk a little bit more freely. And this is something that I truly believe in. And hopefully something that I want to do is when I... It's not just me. Anyone who's in the trust and safety industry want to build trust and safety. We don't just think about bringing more people, bringing more AIs to police the platform. That's not what we're thinking about, right? What we do is as a policy. So a lot of times, how do you work with some of these nonprofit, some of these civil society organizations? to kind of validate check and balances to hey is what am i doing is right thing right sometimes people will onboard youth council people will onboard external advisory council to do a check balance of your platform right so what that means is it's such a large program that you need for the trust safety what that also means that there's a lot you can do so if you ask me what is one thing that i want to see in a few years for the vr tip is for example If you go onto the Google, TikTok, or any platform, say, I want to kill myself, right? What you're going to see is you're going to see something called PSA, the public service announcement. What it does is that, hey, there's a help. Please call this number and reach out to them. But if you think about it, it stops there, right? In virtual reality, I feel like we can do more. So what that means is this. This is a concept in my head, right? I never talked to my product. I never talked to my CEO. So please, please don't think this is in the road. This is my personal belief. Let's just say, Kent, somebody comes in and say, hey, I want to die. I want to kill myself, right? What we do is we ask them, hey, do you need some help? And if the person says yes, for example, we will call that person to a special world. We will just zero out your ID. There's no ID. You become just like one individual. And what we do is we partner with some of these hotlines, right? And basically when that triggers, we will notify them. We'll provide a VR headset for them. And basically we ask counselor to join the VR chat in the world one-on-one and provide a counseling in the world, right? what can we do bigger maybe we could work with some of these groups that is made up of survivals made up with violent tears that really really passionate like me right and what we do is we actually pay for a training so we bring in the civil society organization non-profit to train our community and whatever user for example show the dress sign up but hey I want to die. I want to kill myself. I want to harm myself. We ask them, do you need help? We send them to the call. Maybe it's not the civil society organization. It's not the nonprofit that we call, but we actually call some of our users to come in and provide that, hey, I'm here for you. Talk to me. Let me help you. And if you need further help, please call them. Do here, right? How can we help our community to look out for each other? Because the VR, because the virtual reality is immersive. What the immersive meaning is, there's no physical component to it, but still we're connected very, very closely and we're connected much closer than the social media. So can we leverage this to build a true community? And for me, a safe community is something that I want to do. And that is one of the big ambition that I have.

[00:36:51.880] Kent Bye: Nice. And is there anything else that's left unsaid that you'd like to say to the broader immersive and VR chat community?

[00:36:57.662] Jun Young Ro: Yeah, first of all, thank you so much for listening. I'm three months into my job. So please, if you are the VRT users, please bear with me. We are growing and please don't send me any threat emails, please. But me as a user, I also am a user. I also have kids in my home and I do know and I'm aware about some of these abuses happens in the platform. Just bear with us where it is not easy to do. It's not an easy job. It is sometimes very, very difficult to make a policy decision. Sometimes it is very difficult to identify those blind spots. Sometimes it is very difficult to just fight against abuse. And we have, you know, I really don't want to compare ourselves to all these service members, but You know, there are people out there constantly, consistently fighting against crimes or terrorism or all that. And it just takes one second. It takes one turnaround for everything to collapse, right? And the reality is trust and safety is very similar where it just takes one second that we step back. It takes one second that we turn our eyes into somewhere that it just destroys everything that we built, right? So we are really, really committed to fostering a safe environment for the VR tech. And what we want to do is we want to do above and beyond of trust and safety program that you never experienced in any other platform. Why? Because we are virtual reality platform. What you experience in social media, We want to do more. We want to go above and beyond. And that's the ambition that our company and our leadership and the VR tech company has. So please bear with us and please understand. And we are going to do our best to protect you and protect the community. So my last bagging or word for you is help us. Help me so we could help you. We could keep this community safer and we could make this community much more healthier and vibrant place for all of us.

[00:38:59.209] Kent Bye: Where's the best place for people to continue the conversation for feedback or if people do have thoughts or want to reach out, what's the best way for them to be a part of this ongoing conversation?

[00:39:10.603] Jun Young Ro: Yeah, yeah, yeah. So one is definitely we are going to start opening up our conversation online. through the communities and through the groups that are happening. That is not yet prepared yet. We are working with the community team to do that. That is being planned out. And the second part is in case if there's any feedback that you like, please come to our support center and submit a ticket. Submit a ticket and any trust and safety issues will route it to me. Please don't spam us, right? You don't want our system to flag you as a spam, right? write it and send it to us i do want to take a look at it and i do take a look at some of these feedback that comes in so i'll love to take a look at it and if needed we'll be in contact let's talk right because at the end of the day our doors are open and we want to hear every feedback that you may have please note that i don't receive one or two emails i receive thousands of emails a day right so there will be some delays and there will be some potentially i may not be replying to all the messages but please any concerns any feedback love to hear that

[00:40:07.153] Kent Bye: Awesome. Well, June, thanks so much for taking the time to share a little bit more around some of your aspirations for how to bring about some changes there at the VRChat platform and responding to some of these more direct concerns that were brought up to me by a concerned user who was running into some of these different frictions. And so it sounds like there's going to be lots of different changes and that you've got a real vision for how to transform and change and make a little bit more consistent trust and safety. I'd say from my perspective of the VRChat community, there's a bit of like what happens in private spaces and the culture in the private VRChat. And then there's the public VRChat, which is kind of renowned for being like filled with toxicity. So hopefully, you know, some of these broader changes will be able to be applied to both contexts, but especially just creating a safe platform for regardless of where you go, whether it's private or public. So I'm really excited to see where you take this all in the future. And thanks again so much for joining me here on the podcast to share a little bit more around your vision for where you want to take it all.

[00:41:00.658] Jun Young Ro: 100%. And Kent, hopefully by next summer, we'll do another podcast, talk about some of the accomplishments that we made. So that way I could kind of showcase, maybe the user may not be able to tell, but at least I could share some of the numbers, for example, or some of the programs that we launched to kind of boast about what we did to protect our community.

[00:41:19.117] Kent Bye: That'd be great. Yeah. We'll pimp you in.

[00:41:22.200] Jun Young Ro: Thanks again. Thank you, Kent. Bye.

[00:41:24.585] Kent Bye: Thanks again for listening to this episode of the Voices of VR podcast. And if you enjoy the podcast, then please do spread the word, tell your friends, and consider becoming a member of the Patreon. This is a supported podcast, and so I do rely upon donations from people like yourself in order to continue to bring this coverage. So you can become a member and donate today at patreon.com slash voicesofvr. Thanks for listening.