The Metaverse Standards Forum was announced today on June 21, 2022 as it “brings together leading standards organizations and companies for industry-wide cooperation on interoperability standards needed to build the open metaverse.” There’s lots of key standards organizations involved including The Khronos Group, World Wide Web Consortium (W3C), Open Geospatial Consortium, OpenAR Cloud, Spatial Web Foundation, Academy of Software Foundation, and others. There’s also a critical mass of key XR companies including Unity & Unreal Engine at the game engine level, but also major players including Meta, Microsoft, Qualcomm, NVIDIA, Sony, Adobe, & Autodesk, as well as many other companies who have been involved in previous XR standards efforts in the past.

I had a chance to speak with President of The Khronos Group Neil Trevett as well as Vice President of the Khronos Group Martin Enthed, who also works as an Innovation Manager at IKEA. We unpack this announcement a bit to talk about at what level of interoperability the Metaverse Standards Forum will be starting at, and whether it’s at the level of 3D assets like glTF or at the level of game engine formats and scene graph representations like USD. Will this be an effort to get assets in a format to be input into either Unreal Engine or Unity to have consistent render outputs? Or exporting projects between them? Or will the focus be on figuring out how to add behaviors to glTF objects? There are many places to start, and this group of companies and standards organizations will be listening to the use cases from companies and coordinating between different major Standards Developing Organizations to see if there’s domain expertise amongst any of their individual standards or if there are higher-level Open Metaverse Interoperability standards that can start to be defined.

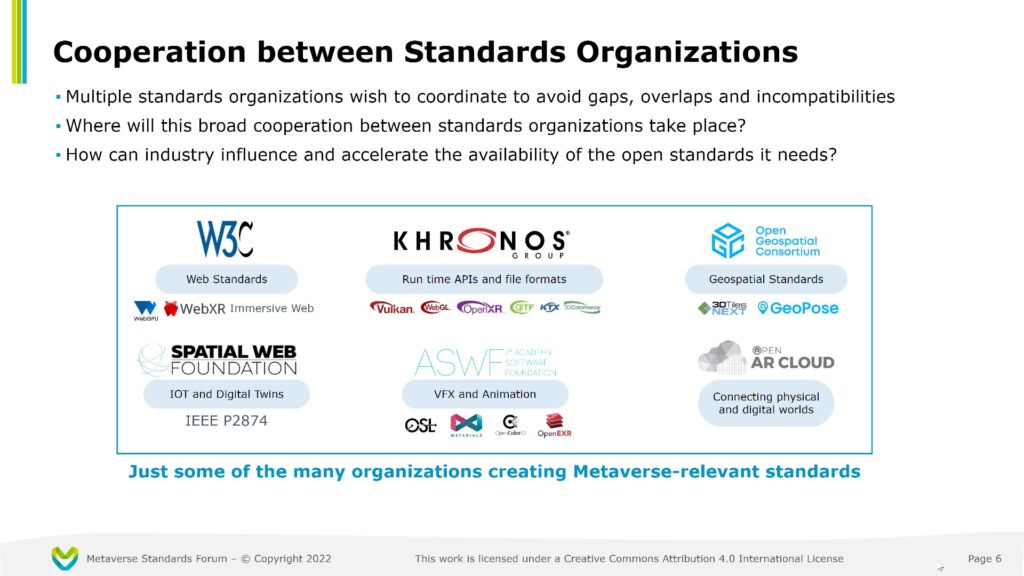

The cooperation between the standards organizations is also really exciting news, and slide #6 from the Metaverse Standards Forum Launch slides shows how there are web standards with the W3C, run-time XR APIs and file formats from the Khronos Group, Geospatial Standards from the Open Geospatial Consortium, IoT and Digital Twin formats from Spatial Web Foundation, VFX & Animation standards from the Animation Software Foundation, and Connecting physical and digital worlds with the Open AR Cloud. So it’s exciting to see how all of these different Standards Developing Organizations will be collaborating and cooperating with each other, especially as more spatial information is being included and where that information will live and in what standardized format.

We cover a lot of latest exciting announcements in this podcast conversation, but also ground it within the real use cases that IKEA is aiming to leverage. IKEA is in the business of selling physical products, and so the virtual representation of these objects are merely a means to an ends for customers to eventually buy the physical product. IKEA has been taking a very pragmatic approach of wanting to find persistent spatial file formats that can have uniformity in how that content is rendered out, and a lot of their involvement in these standardization efforts have been to push forward the XR community with 3D commerce standards within the Khronos Group to push forward consistent rendering of spatial content, but also with the Metaverse Standards Forum to help create persistent spatial formats to make it easy to deliver spatial information to their customers.

Trevett is resistant towards overly ambitious efforts that try to boil the ocean, and emphasizes the utility of iteration around the biggest problems and use cases in a minimum viable product approach. He says that this effort is trying to bake the individual interoperability bricks for the Metaverse rather than trying to imagine and build whatever the Cathedral of the Metaverse may become in 20 years. They’re focused on the near-term interoperability challenges that help to slowly build out the foundations for whatever the metaverse may become as it starts to blend the best aspects of the open web with the new affordances of XR and spatial computing. I’m excited to see where this effort goes, and I expect a lot of behind-the-scenes conversations.

Trevett says that there may be a lot of business-level decisions exploring to what degree it makes sense for companies like Unity or Unreal Engine to open up, but that one measure of success for open standards is that it increases competition within the industry. Many of the founding companies have been on the record supporting different visions of the Metaverse, and this is an opportunity for them to take action towards those areas of interoperability to ensure that the Metaverse is built upon an open foundation and avoiding the temptation to bake in their proprietary software at the core of spatial computing.

Trevett says that figuring out the right level of abstraction at the right time is part of the art of open standards, and so stay tuned into updates from the Metaverse Standards Forum to see how the dreams of an open Metaverse either continue to make viable progress or if it ends up being a “honeypot trap for architectural astronauts” as John Carmack has claimed. Given the critical mass of key players in the industry and Khronos Groups’ past successes with previous standards like OpenXR, then I’m holding out hope that this effort will help ground the hype and ambiguity of the Metaverse into some tangible interoperability standards that makes an open Metaverse a reality.

LISTEN TO THIS EPISODE OF THE VOICES OF VR PODCAST

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Rough Transcript

[00:00:05.412] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR podcast. So today is Tuesday, June 21st, 2022, and there was an announcement from the Kronos Group about the Metaverse Standards Forum. So this is a brand new forum that's an interdisciplinary collaboration amongst a number of different standards organizations, including the Kronos Group, the World Wide Web Consortium, the Open Spatial Consortium, Open Air Cloud, Spatial Web Foundation, Academy of Software Foundations, and many others. as well as a lot of big players that have been a part of the OpenXR discussions as part of Kronos Group. Creating different open interoperability standards in the context of XR. They're trying to take it to the next level. Bringing together all these standards organizations, as well as all these companies, to start to look at specific use cases to see how they can start to bake in different levels of interoperability into whatever the future of the Metaverse may become. So Metaverse, by the definition from Neil, he kind of thinks it as the open web with spatial web computing on top of it. So there is a lot of open standards in terms of OpenXR to be able to load level APIs, to be able to have interoperability amongst the XR technology. Then there is WebXR, which is looking at how to put spatial content onto the web, which hooks into different aspects of OpenXR. But this is trying to create another layer of interoperability. Say you wanted to take something from, Well, let's say perhaps the Unreal Engine and be able to export it out and put it into Unity, you know, is that level of interoperability that we're talking about here? I think that's part of the discussions that are starting to happen is that at what point are they going to start to have even more layers of interoperability amongst existing game engines and ways of rendering the spatial content? But generally, I think the idea is to come up with the next layers of the open standards that are going beyond any of the individual open standards organizations, or to start to think about how they can start to more closely cooperate and collaborate to be able to build this future of the open metaverse that all these companies want to do. Let's start to impact this from both the President and the Vice President of the Kronos Group, Neil Trevett, who works as the Developer Ecosystems at NVIDIA and has been helping to lead the Metaverse Standards Forums, as well as an Innovation Manager at IKEA, who happens to also be the Vice President of the Kronos Group, Martin Enthed. Martin helps to ground this conversation by looking at what IKEA's interest is and looking at this Metaverse Standards Forum of saying, as a company like IKEA, wanting to create these spatial objects and create opportunities for their customers to be able to render these virtual representations of these physical objects of furniture that they're selling. What are the ways that they can most seamlessly get this content into the hands of the customers? From Kia's perspective, there's a lot of need for creating it just an easier way of whatever those standards are so they can build something now and still use that 5, 10, or 20 years into the future. So that's what we're covering on today's episode of the VsVR podcast. So this interview with Neil and Martin happened on Thursday, May 16, 2022. So with that, let's go ahead and dive right in.

[00:03:16.308] Neil Trevett: So my name's Neil Trevitz. My day job is at NVIDIA. So I do developer ecosystems there. I'm here primarily today, though, with my Khronos hat on. So I'm currently the elected president of the Khronos group. And I actually have a third hat, I guess, because Kronos is helping to bootstrap the Metaverse Standards Forum. So we're going to be talking about that more today.

[00:03:40.049] Martin Enthed: And I'm Martin Ante. I work as an innovation manager at IKEA over in Europe, based there. I'm working with something we call spatial computing. So anything three-dimensional into this AR and VR world. That's what I'm doing and I'm also joining Neil on the effort in Kronos. I'm vice president there.

[00:04:00.557] Kent Bye: All right. Well, Neil, I know I've seen you do a number of different talks about the metaverse and the future of the metaverse, and there's a number of different standards that you've already been working on with Kronos Group. And so maybe you could help set a broader context as to what this metaverse standards form is and how it might be bringing together additional standards developing organizations together to be able to start to address some of these more interoperable standards and maybe help set the picture for what this metaverse standards form is.

[00:04:28.995] Neil Trevett: Yes, absolutely. So the basic idea behind the forum is to create a venue where the standards organizations that are working on metaverse relevant standards can come to cooperate and coordinate and also engage with the industry companies that want to use those standards by feeding good requirements and coordinated use cases into the standards organizations. The basic idea is to get better standards faster for the metaverse. And one of the core driving principles that we're trying to put behind, and we'll go into more detail what the forum is, but one of the guiding principles is We don't want it to be a philosophical debate around what the metaverse is going to be in 20 years' time. Instead, we want to come bottom up, because the one thing is certain. We may not know what the metaverse is going to be in 20 years, but I think most people have some kind of inkling that it's going to be a mixture of the connectivity of the web with some spatial computing goodness mixed in, in all kinds of interesting ways. But we don't need to know what the final destination is to start adding value today because there are clear interoperability issues in many different domains that we can begin working on that are going to need interoperability standards. Let's start working on those today. I like to say, you know, we can bake the interoperability bricks to build the metaverse. We don't yet have to start designing or building the cathedral. We know we're going to need the bricks. Let's start making those today and know the forum is intended to be a venue where we can do that productively.

[00:06:14.075] Kent Bye: Okay. And maybe before we start to dig into some of the ways that you're involved, Martin, I'd love to get maybe a little bit more context from you, Neil, in terms of the different standards development organizations. As I read through, I see a slide here that you're going to be releasing on the 21st. And on that, you have a number of different standards development organizations from the W3C, the Kronos Group, the Open Geospatial Consortium, Open Era Cloud, the Academy for Software Foundation, and then the Open Spatial Web Foundation. So each of these are different layers of the stack, whether it's on web standards or runtime APIs and file formats with Kronos Group. web standards with W3C, the geospatial standards with the Open Geospatial Consortium. Open Air Cloud is connecting the physical and digital worlds, the visual effects and animation from the ASWF. And then the Spatial Web Foundation is the IoT and digital twins. And so maybe you could set the context for how each of these different standards organizations, how you see they're going to start cooperating and collaborating in the context of this metaverse form.

[00:07:19.112] Neil Trevett: Yeah. And you went through many of the important standards organizations that are working in the field of metaverse technologies. But again, the thing we can be sure of is that there is going to be lots of interoperability needed. All these different technologies are going to be being brought together to interrupt in new and novel ways. As you say, it's going to be hardware talking to software. It's going to be native stacks talking to web stacks. It's going to be new classes of servers talking to clients. It's going to be wireless communications. It's going to be geospatial data sets being streamed and AI being used to create geospatial data sets from all kinds of data sources. The list goes on and on. So the key thing is There's so much interoperability needed that it's beyond any one standards organization. So Kronos, in our own group, we do the hardware APIs and the file formats to feed those APIs, but we're not experts in IoT or geospatial or web stacks. All the other groups that you mentioned, they are the domain experts. in those. And so all these standards groups are in the industry, they're doing good work. They're all doing good work and they're all progressing their standards. And sometimes those standards organizations, they will have one-to-one liaisons, but it's kind of sporadic. So if you put yourself into the position of a company that's saying, okay, now I'm a company, I want to ship my metaverse experiences. I believe in open standards. You've convinced me, open standards are the way. Where do I go, right? Where do I go? there is no one place right now. So a company wanting to really engage with standards for the metaverse, you end up going down the list of 24 different standards organizations, trying to understand what they're doing, trying to understand yourself, where the gaps are. Are things compatible or not? Are things working together or not? The standards community wanted to do better in its engagement with industry and have, for the first time, and we think it is the first time, have a venue where all the standards orgs that are working in the metaverse are going to be. and we can have the companies there too. So for the first time we'll all be around a single table coordinating and figuring out what are the critical problems that we can usefully solve today, which of the standards organizations are able to best solve those problems, which other standards organizations can leverage that work or assist that work in some way, And even some of the companies that have already joined the forum have said, we want to run PlugFest and hackathon type projects to not just talk about interoperability, but actually help standards organizations test the interoperability in the real world. And they're willing to potentially fund that kind of project. So I think many of the standards organizations are looking forward to much closer engagement with industry. And I hope that this forum will be a good vehicle for the standards organizations better serving the industry, which is, of course, is our mission after all. So hopefully, if we can make this work, it's going to be a win-win for everyone.

[00:10:35.877] Kent Bye: And maybe one more context setting question here is that, you know, the thing that has been really striking for me of watching the Kronos group over the years is that just the way that you've been able to bring together so many different industry leaders with OpenXR to have such buy-in across the industry. And, you know, when you look at some of the founding members of this, just as I'm looking at it, some of the big names that jump out to me are like, Adobe, Meta, Sony, Unity, Epic Games, Autodesk, Kia, which is here today, Microsoft, Qualcomm, Otoy, Nvidia, Wayfair. I mean, the list goes on and on and on in terms of all the different major players within the XR industry. And so maybe you could speak to the sense of the cooperation and collaboration and buy-in that you're getting from across the industry from so many different existing members that have been traditionally involved in the Kronos Group. And if there are more that are coming in with this new Metaverse Forum initiative?

[00:11:32.500] Neil Trevett: Yeah, we have been pleasantly surprised by the level of support and interest as we've been working to do this rollout on Tuesday. The fundamental motivation though is the same, whether it's members in an individual standards organization like Kronos or bringing in some cases the same companies together in this form. It's just a different level. Now it's working within a standards organization or working between standards organizations and coordinating multiple standards organizations. The fundamental motivation for the good companies that get involved is its enlightened self-interest. Now, they realize that having the right standards at the right time are vital to their business. And if they can help get the right standards at the right time, their business is going to grow along with the greater industry. And it is exactly the same dynamic. I think people are realizing that a lot of the standards orgs are doing good work, but the time has come. We need to have this extra layer of coordination on top. A lot of the initial members of the Metaverse Standards Forum are coming from Kronos because of course, a lot of them are naturally involved in spatial computing in all kinds of different ways. But it's not just Kronos members too. Now we have been working with some of the other standards organizations like W3C and Open Geospatial Consortium and IEEE, and they have been working to bring their members in too. And of course, many of the larger companies are in multiple standards organizations. So it's really been an industry-wide effort to bring these companies together. And Martin, you were one of the first supporters of this initiative, and you were one of the companies that were encouraging Kronos to be a bootstrap host. What was your interest? I mean, I'm interested to hear.

[00:13:23.005] Martin Enthed: The basic thing is that even if we are in Kronos, there are a lot of things going on inside Kronos. The standards we do there is not enough to do all of this. Working like we do, we are one of the uses of the standards. We are not making them, but we are one of the use cases and one of the more futuristic use cases. Maybe the games and what's going on in that interactive content is already here almost. The things that we are talking about is more of a bigger experience where things like our products are coming out into the world and should work everywhere and should be interoperable and so on. And just an easy thing like placement of things. If I place something persistently in one place in the world, will it stay there when I go back half a year later? And that doesn't really exist today. So it's an easy thing like that. Sounds easy. Will probably take a long time before we figure it out. Those kind of things are interesting. And for us, trying to get the standard organizations to work together, trying to get the big companies working together, and also then be part of this to give our problems into the mix of everything that needs to be solved is super interesting. And that's why we want to be part of it.

[00:14:44.325] Neil Trevett: I was just going to amplify on something that Martin was saying, is what he said at the end was vital. Now, getting IKEA's, for example, IKEA, of course, is just one member, but getting IKEA's needs into the mix. If we can use the forum venue to really get the companies to cooperate and discuss and come with requirements and use cases that we can feed into all of the standards organizations that are in the forum. That is like gold for any standards organization. It's always surprisingly difficult to get the industry to tell you what they need. for any standards organization in our experience. So if we can create a concentrated and coordinated stream of requirements and needs flowing into the standards organizations, then that is going to be great. And any standards organization is going to jump on that data and hopefully make it very actionable and it'll accelerate the development of the standards that they're working on.

[00:15:46.159] Kent Bye: Well, I know that the term itself, the metaverse has gone through a number of different hype cycle waves, and there's still a lot of uncertainty as to how exactly to define what the metaverse is. And so maybe Neil, I'd love to hear your take on that. And then Martin, we can sort of dive in into IKEA's orientation into all of this a little bit more to help brown it into some of the specific things that we're catalyzing. this cooperation. But Neil, I'd love to hear your thoughts because, you know, the metaverse, I know that there's Neil Stevenson's definitions that have been going out. There's been the whole cryptosphere, which I think is in some ways maybe diluted the meaning of what the metaverse means for most people who are coming from the XR or spatial computing perspective. And then there's Matthew Ball, who has been writing a series of essays, trying to map out a number of different tiers, which to me feels like probably the best approach of trying to have a framework for understanding the metaverse. But I'm wondering if there's any foundational orientation to help all these different companies make sense of how they're working together to create this new, what I think of as more of XR or spatial computing, but has been more widely referred to as the metaverse. And that's, of course, what you're deciding to call this initiative, which we know that with the OpenXR initiative is sort of like calling the XR term. So there's a possibility of having this be firm down into having a little bit more strict definitions as to what we exactly mean by the metaverse. So love to hear some of your initial takes for what the metaverse is in this context for the Metaverse Standards Forum.

[00:17:11.286] Neil Trevett: Yeah, that's a great question. As I say, we don't want the forum to turn into a debate shop as to what the metaverse is going to be in 20 years. But of course, you need to have some kind of framework and some kind of idea of the domain that the forum wants to operate within. So it kind of goes back to what I mentioned before, which is, I think, the best definition. It's It is the mix of the connectivity of the web with spatial computing. And of course, both of those things are very complex in their own right. And so you can combine them in all kinds of interesting ways. But a spatial evolution of the web, if you force me to put it into five words, I guess that would be what my definition would be. And of course, the spatial user interaction is going to be a part of that. And that brings in a whole XR stuff, both augmented and virtual reality. But I don't think the metaverse is going to be limited to just that. There are many use cases for the metaverse where you wouldn't want to use augmented or virtual reality. And it doesn't lessen the need for having all kinds of different interfaces, you know, a factory manager, managing a digital twin of their real-time factory is going to want to sit at a 49-inch monitor. No, they won't be roaming the shop floor all the time with their AR glasses on. And that's fine. I think the metaverse will be much broader than many of the more VR-type dystopian futures that are being imagined. We've talked about dystopian futures before. But again, it does come back to connectivity and spatial. So when you come to connectivity, it is going to be all kinds of new networking, 5G, wireless, edge servers, all of this stuff, and spatial computing, not just the UI, but the input, the semantic understanding of our spatial surroundings. AI is going to be a vital part of all this mix too, understanding our semantic surroundings. And now where the intersection is creating content, user created content in high quality 3D, that's I think going to take, it's a big opportunity for AI and machine learning to really enable users there. So whether it's grabbing 3D or generating 3D and then communicating and collaborating, I think that's what I think is kind of the essential mix. Whether or not we'll be using metaverse, the text string in five years time, I don't know. It may go the way of information superhighway. Now that got replaced as a text string. I don't think the text string is actually that important. It's the concepts of connectivity and spatial computing is the thing that will be the continuous thread.

[00:19:58.295] Kent Bye: Yeah. And Martin, I'd love to get you into this conversation in terms of maybe you could give a bit more context as to the innovation unit that you're working on at IKEA and how you've already started to maybe work with or experiment with some of the XR and spatial computing technologies that have led you to be one of the first members of this new initiative to try to facilitate this larger conversation. I'd love to hear a bit about the backstory of what was happening to kind of lead you to be involved in this effort.

[00:20:27.157] Martin Enthed: The short version is that we started with using computer graphics 15 years ago to really do JPEGs, to do images, marketing images towards customers. So I've been leading that part on the technical side. And today we do that in large volume and we have a big library. We have all our 3D models and all that. And seven years ago, I pitched the idea on looking into a future where we don't create JPEGs and save them. We actually create images and throw them away directly, real time. That's really what we're talking about. And since five years ago, I had the team looking into special computing and a special computing lab looking into the next 10 years in the future. And while doing that, the reasoning behind it is that a company like us, almost every company, I would say every company wants to transfer some kind of information over to another human. And humans are a three-dimensional being. Even if we don't have any three-dimensional input to us, we create a three-dimensional world in our head. And that means that we want to save information in 3D. We want to understand the world in 3D. That's how humans behave. And as we work with trying to make a better everyday life for many people, that's actually our vision. We want to transfer information over how we can do that. And if 3D is better at doing it, then of course, we should use 3D. And 3D is definitely better than a 2D or a movie. They have their place. But if you want to show a thing, If you can do it in a physical space and you can hold it up and walk around it, then that's great. If it's a virtual thing and you can do the same thing, then that's good. So this is the reason why we want to work with 3D and that's what my team is looking at and spatial computing. Then for a company like us and for any retailer, this is a side thing. We are not selling the experience. We are not selling what we do in 3D. We sell something else. That means that we want to do this as optimal as possible. We want to make it once, use it everywhere. And to be able to do that, we need to be taking part in the open standards discussion. Because that's where that is happening. Because everything we do will live in somebody else's device. We don't do headsets or phones. That's other people's doing now. But we are using it to reach our customer with the information we need. Does that all make some kind of sense, Kent?

[00:23:03.157] Kent Bye: Yeah, yeah, it makes a lot of sense. And you know, as I look at the companies that are involved here, and I think about the spatial computing industry, generally, I see that the Unreal Engine from Epic Games and Unity are kind of really dominant in terms of what most of the companies are going to in order to produce any type of immersive experience. And the challenge there I'd say is that if you're in any one of those two systems, then it ends up being kind of like a walled garden of information that you end up using whatever method that you use from within the context of that game engine to be able to produce that immersive experience. But it sounds like, at least from what I can tell, is that what's also very encouraging to see both Epic Games and Unity involved in this process is to see how to either export some of these experiences from these game engines into something that's more generalizable that could be maybe out onto the open web. Or I don't know if there's another intermediate format that everything could compile out to or read in. I guess I'm trying to make sense of, you know, as some of the minimum viable product first steps of an initiative like this, where you even begin to start to bring everything together when everything has been so locked into these two game engine perspectives, especially when you have something like WebXR and you have Google who has been doing a lot of implementation of that spec within the Chromium browser, but we don't see that implemented in Apple Safari. And I don't see Apple listed in the initial founding members here. So it ends up being like, well, if there is going to be some other method to distribute this within the context of these open web browsers, then one of the big major companies that could be holding back these alternative distribution plans are also not listed here. So Neil and Martin would love to hear any reflections on that. And if that makes sense for what my initial read on this might be, and if there's any other context to as you're moving forward, how you make sense of all these companies coming together and at what level of the stack you start operating at.

[00:25:01.130] Neil Trevett: Yeah, no, it's a great question. I mean, and how precisely the interoperability at the deepest level is going to take place and how the players involved in various business models are going to find a path forward that increases their opportunity. It's a complex question. And I don't think there's an easy answer right now. It's going to take that Darwinian stew to ferment for a little while before some directions really become clear. And if you go deeper into the runtime stack, you know, things get harder. You know, do you want literally to be able to take, you know, an Unreal Blueprint and take it into Unity? That could be too much, but actually that's kind of what people are wanting. it could be potentially that the asset formats can be a bridge between different runtimes. So people can innovate on their runtimes, runtime engines like Unity and Epic, two good examples you use. Trying to get binary compatibility at the runtime level could be a step too far, but maybe having interoperable assets, things like avatars or other 3D assets that you can take from one Metaverse experience into another metaverse experience, regardless of whether it's in Unity or Epic or some other engine, or the web engines too. As you say, all the web engines are out there as well, 3JS and Babylon and others. If you can begin to take assets around, that's a level of interoperability that could be kind of a beachhead for bringing more connection between these disparate environments. But even there, I think a lot of the challenges are going to be business model challenges, as well as the technical and engineering challenges. Many of the leaders are, I think, going to find natural commercial pressures to not open up That's one leading environment where lots of people are generating user content. Do they want that content to be able to be portable to other environments? It's a non-trivial business question. So I'm fascinated to see the direction that the forum takes. Is it all going to be engineering level interoperability or is it going to be business level discussions to kind of figure out where are the beachheads where it makes sense for everyone to have some degree of interoperability and what kind of interoperability is that going to take is going to be a very interesting discussion. I mean, the standards like OpenXR, they kind of have it easy. from the adoption point of view, because you have a bunch of hardware, great run times. It's an obvious win-win that everyone can tap into the OpenXR library of content and we don't have fragmentation. And that's why OpenXR is getting such wide adoption. The asset format level of interoperability, it's less clear where the money flows if things open up and things become interoperable. It's going to be a very interesting discussion.

[00:27:58.540] Kent Bye: Yeah, Martin, I'd love to hear any perspective from your front from IKEA in terms of, you know, if there's any specific things that you feel like you're run into in terms of limitations for things that you can't do in terms of like, just say, writing something once and distributing everywhere or how you start to navigate the real-time engine duopoly that we kind of exist in right now. And if it makes sense to start to build up standards to go beyond that, to have maybe export paths for each of these game engines, to be able to export to the open web, or, you know, if it makes sense to stick with the native apps, just to have the optimizations that are there. So yeah, I'd love to hear any reflections on some of those dynamics as you start to enter into these broader discussions, given some of the problems that you're specifically trying to solve there at IKEA.

[00:28:42.040] Martin Enthed: You're starting with this standards metaverse forum that we talk about. My main thing around that one is that to see to or be part of seeing to that the different standards organization are not doing the same standard or doing standards that don't connect. Because from what I've been thinking, at least, that most of the standards will still happen in the standards organization that already exists, not really in this metaverse forum. The forum is to connect things. But then to your question what is it that we are lacking or what is it that we can't do that the first step is just to finding a format where we can start storing the things that we want to show the information we want like to convey over to the customers what do the people outside. And we have the base for those, like in GLTF that we have now and USD and so on. And the reason why we started 3D Commerce inside Kronos is to look into render consistency. So how do we know that a green sofa comes out as a green sofa in another viewer? So how do we get the viewers to want to render the same? Like Neil was saying, they might not want to, because they want to be different. One wants to be better than the other. from the use case we are coming with, we want it to be the same. We want it to behave like in the real world because that's what we are trying to portray with the 3D we have. So it depends on the use cases, of course. But then you can just add on stuff like how do we tell how far you can open a drawer? How do we tell where to put the shelves in a bookcase? like how do we tell this so it doesn't only work inside our own tools that we have full control over but actually can push it out in an ad or give it to somebody making a game or how do we make that work so it doesn't have to be a special team do it in for every different thing we would like to push it out to because again we are not earning money on this We want people to see our things so they can see how well they are performing in the virtual world. So they might buy them in the physical world. That's what we want to do.

[00:30:50.716] Neil Trevett: Yeah, and I agree with that. And I think your question is a good one, Kent, because really it speaks to one of the fundamental challenges you always have creating open interoperability standards. It's vital to pick the right level of abstraction, because any successful open standard, it shouldn't depress the level of competition. It should encourage healthy competition between, for example, different runtimes, to use your example. with the right open standards, Unity and Epic and a bunch of other companies would be enabled to compete on quality performance of their engines. But access into those engines, if we can find the right level of abstraction, maybe can be standardized to remove the friction points of content developers like Martin having to code stuff a million different times, remove that friction point. And that typically is a non-trivial exercise, figuring out where that right level of abstraction should be. But any successful standard is the right level of abstraction at the right time. So if you can hit that crosshairs, it's not always easy to do, but if you can, that's normally a good start for a successful standard.

[00:32:10.377] Kent Bye: Yeah, I guess just to ground it into an IKEA example of when you buy a piece of furniture that you have to assemble, there may be a set of instructions that have traditionally been printed off on a piece of paper that you have to follow. But if that was standardized in some sort of spatialized format, then you could potentially automatically deploy it out into an AR, how to assemble your piece of furniture with either a phone app or a head mounted augmented reality display. And so it's that, I guess the word that comes to mind is the archivability of information in a way that the open web has been able to create a standard, have a format that people can deliver that data and people can have access to it in the future because it's an open standard. But with a lot of these game engines, with both Unreal and Unity, they don't always have backwards compatibility, which means that if you get locked into a certain version, then it's not always a guarantee that you'll be able to do an easy upgrade or, you know, it just be more ideal to have an export to USD if you wanted to. I guess this is the example, like you wanted to export all the the scene and all the information into Unreal Engine, if they both were implementing the same kind of scene graph, like USD is an example, composable scene graph, to go in between those two different game engines, that would increase the interoperability between those, but also potentially have other tools that could allow you to have a web-based version Um, there's trade-offs with all these in terms of being on the bleeding edge of technology often means that you are not supporting the backwards compatibility, but once you get to a certain level of stability, then you have what the open standards are. And then I guess the model of having extensions or modules added in to have that ability to evolve and grow over time. But that, you know, you want to, for a lot of companies, I imagine not have to continually deal with the thrashing of. outdated versions of the spatial content that you have to re-engineer time and time again. And that the benefits of the open standards is that you do it once and then you could use it for the next 5, 10, 20 or 30 years.

[00:34:16.540] Neil Trevett: Yeah, absolutely. And you're right. That's actually an often overlooked advantage of multi-company governance open standards. People overlook it until it really bites them. And suddenly the format that they've invested in disappears. I mean, the most obvious recent example, of course, is Flash. So much Flash content just evaporated. when the flash pair disappeared. Whereas any open standard under multi-company governance, it doesn't matter whether any one company business focus changes. Now there's a thread of continuity through the benefit of having multiple companies continually investing. The players can change, but the thread of continuity can continue you know, for a significant amount of time. And of course, even to the extreme, and actually GLTF is about to do this, so I'm going to mention it. Becoming an ISO standard is an extra level of diligence to make sure that, you know, Kronos could evaporate tomorrow and GLTF will still be here. No, Kronos isn't going to evaporate tomorrow, please. But in 20 years time, there might not be a Kronos, but now GLTF will still be here. And so Kronos is putting some significant effort into making sure that every version of GLTF is safely uploaded into ISO through the PAS process. And that's a guarantee that people can bank on.

[00:35:40.797] Kent Bye: Yeah. And Martin, I'd love to hear any other reflections of the use case I spelled out. And if that's part of the motivation is to have the ability to write something once and have metaphorical responsive design. So depending on whatever output you're selling it, you're able to display the information, whether it's a 2D web, whether it's an AR, whether it's a virtual reality and have more of a spatial format that you can lock into and be able to build on top of without having to, you know, like you said, you know, generate and waste JPEGs, now all of a sudden you have a spatial format that could be a lot more flexible to do all these other use cases that you want to do in the future.

[00:36:16.268] Martin Enthed: Yeah, definitely. So like we are also a company who design all our furniture ourselves. We sell things for a very long time. We have products that we made in 1978 that we still sell. So that means that if we create a 3D model today, I would like to load that into a new and very nice new experience made by somebody in 2032. Like, that's really hard to do if we are not basing it on something that will still exist in 2032. So the whole thing with us, the thing we need is to try to figure out the ways we don't have to redo things. Because every time you have to do that, the cost goes on for someone. And we are a company who try to be for the people who don't have that much money. So it shouldn't cost a fortune to get a nice home. That's the whole idea with us. That means that we want to make one's use forever if it's possible. That's, of course, really hard. But and most other companies want to do the same. It's not only us. So and again, it's the information that is important. keeping the information. Then you can have different kind of, if you say so, three-dimensional browsers. You can call them game engines or whatever you want to call them. They should be able to evolve, change, do fancy new things. But if they load an older thing, an older web page or a 3D thing, they should still work. It's like the web browsers we have today. today, not like in the 1990s, then you had trouble. You have to do different versions for every web browser. But today, you can almost do one version and it works similarly. And you can store small interactions in them with the JavaScript. You can trigger on events. You can do all these things. What I think we need to do is do the same, but for 3D. Somehow make it work. Then What three-dimensional web browser, if you want to use that analogy, is seeing it and consuming it should not be the big problem for the content creator in the best possible world.

[00:38:20.269] Kent Bye: Yeah, that makes a lot of sense, Martin. Neil, there's a slide here about communication, coordination and cooperation that is looking at remixing and distilling of the authoring from USD into the transmission through GLTF. But there's these higher layers of either metadata, there's avatars like for like GLTF, there's the VRM as an open standard, as an example. There's materials, there's audio properties, behaviors. Behaviors, I think is one that's interesting just because you're starting to talk about the code that's associated to things and like there's different programming language from like Unity's using C sharp and you have Unreal Engine that uses both the blueprints but C++ and so there's different languages that are being attached and so love to hear any reflections in terms of as you start to have these conversations how do you start to give dynamic behaviors to the scene graph and these objects that you start to have and if that's an initial part of the conversation or if that's like something that's already so well entrenched into the different platforms that there may not be a good way of kind of abstracting that out aside from actually writing a lot of that stuff within the code of that specific platform.

[00:39:27.060] Neil Trevett: Yeah, no, that's a great question. And that is kind of one of the most topical questions for, I think, both USD and GLTF right now. Both of those standards are right in the middle of, I like to say, converting themselves from a 3D asset format to a metaverse asset format, where a 3D asset format was going back just looking at the visual. the part of an asset, the geometry, the textures, the materials, the animations, but it was just a visual aspect. But a metaverse asset, you need more, exactly as you were just saying, behaviors, properties. So if I have a 3D asset and I take it from one metaverse experience to another metaverse experience, and I drop my 3D asset into a swimming pool, does it float or not? And that should be an inherent property of that asset and behaviors. So again, as you say, should assets have inherent behaviors and interactions coded into the asset themselves? So any runtime that understands that asset format can understand how that asset is going to behave and implement it in their own runtime. Kind of goes back to what we were saying before, this asset interoperability might be a good way as a beachhead, a way of getting interoperability, because the runtimes can be completely different. As long as they agree on what the behaviors and the properties encoded in the asset formats are, and they know how to process them, then you can get consistent behavior. without forcing everyone to the same runtime. So you get the competition thing that we were talking about. So this is a very active area in both USD and glTF. I can talk about glTF. I was in the meeting this morning, and there's a lot of discussion around How much complexity do you put in? Because in any design exercise, of course, there's the dynamic tension. If you make it more complex, you can do more. If you make it simpler, you can do less. But maybe it's going to be easier to deploy pervasively. So where do you want to land on that spectrum? And we have some very good contributions coming in from many of the members. And, you know, that's precisely the discussion we're having right now. Do you want to have relatively, you know, simple action lists? So, you know, you can do these five things with this asset, and this is what happens if you get close to it or push it or touch it. Or do you want to go all the way up to blueprints and programming languages in your assets? And it's an interesting discussion, personally. I'm a big believer in shipping minimal viable products quickly and then evolving rather than trying to get something too complicated before you ship and get experience. So I'm hoping that we can find a path through here that we can ship something relatively on the simpler end of that scale that maybe satisfies 70% of the use cases that we know about today. And it's not too complex, because that's going to let us roll it out faster across all of the engines in the industry. And then once we're proven to need more, now we can go towards kind of more of a blueprint type structure. But that's complex. And that's putting a lot of onus on all of the engine vendors to implement pretty complex machinery. So I'm hoping we can build it out gradually rather than going for a big bang. But that is exactly what the working groups are discussing right now.

[00:43:01.873] Kent Bye: You know, one of the things that I noticed here in the list of different standards development organizations, there's a lot of geospatial type of organizations, whether it's the open geospatial consortium or the official web foundation, one of the founders, Gabriel Rene, which I have an unpublished interview with him. digging into some of the more internet of things or digital twins. And so starting to bring in more of the architectural design or creating maps of physical spaces that may be having things on top of in terms of a virtual layers or virtual information. And then OpenARcloud, which is all about connecting the physical and digital worlds. And so each of these have XR components, but I'd love to hear from each of you how the geospatial information may start to be fed into here, or just the wider Spatial Web Foundation, IoT, and Digital Twins, how that starts to fit into these larger discussions here as you mix them in with the existing Khronos standards and W3C and other scene graph stuff.

[00:44:01.578] Martin Enthed: My philosophical thinking here is that I don't see any difference between Metaverse and the universe, the one we are in all along. It's only different versions. And as I said before, it's only really interpretation on how a human sees it. But of course, a lot of the things that we are doing is connected to a space. The products we sell in the physical world has a place in the space. And if you're planning before you are buying, that virtual thing will have a space in the physical world, in the space where it's going to be when you have bought it and so on. So there are so many things that connect, at least in our world, things between this virtual, purely virtual, and then augmented and real world. So all of this needs to work together. I don't see one where you do only the one or only the other. It's connected. So that means that all of these things need to connect somehow. And we also sell IoT products at IKEA. So that means that we have lamps, we have blinds that goes up and down automatically and so on. And they have a physical place in the real world. And that means that they will have a need to be communicating with the AR space. So we know where they are. So all of these are fitting together. But if you think purely virtual reality, then I can understand that they don't really fit. But for me, it connects.

[00:45:24.419] Neil Trevett: Yeah, it's interesting, kind of going back to the file formats, which again, in many respects is kind of the beachhead for many types of interoperability that does connect many of these geospatial initiatives. I mean, GLTF from Kronos wasn't originally designed just for geospatial by any means, but then people layer on top. So the Open Geospatial Consortium has now 3D tiles, which is basically geospatial management of data, all based on GLTF. some of the work that the Spatial Web Foundation is doing. They're doing a lot of awesome work, which is completely separate to anything that Kronos is an expert in, semantics and spatial awareness. But eventually, in many of their use cases, they want to get to a leaf node on their semantic tree and they need to have a 3D model. And they selected glTF because that's, why not? Because it's a 3D model. They don't need to solve all that problem again. They can just leverage the work that's already being done. And that's a good web of interconnectedness going between all of these standards organizations. And it's not just into GLTF. For example, the OpenAI Cloud and the OGC, they've done awesome work on a standard called GeoPose. which sounds very simple in concept. It says, you know, where are you on the earth? You know, in terms of direction, position, and elevation. Sounds easy. It's super complex, particularly when you want to start animating and tracking stuff in real time. And the GeoPose, they've had some of the awesome expertise applied to really deep diving into that problem and solving that whole issue in ways. nuances I would have never imagined. And that's awesome too, because now, you know, GLTF and Fronos and ODC, now they can all start referring to that. So it kind of brings us back to the overall picture, the value of bringing all of these different standards organizations together. We do all have different expertise and very often we don't realize what the other groups are working on. And if we can, save each other work and increase the strength of the links between all of these various initiatives. That just helps the wave front of metaverse technologies to move forward faster through cooperation. It's a win-win-win for everyone.

[00:47:51.687] Kent Bye: Yeah, one other thing that jumps out to me as I'm looking through these slides is that I hadn't realized that the Kronos Group has a whole 3D commerce effort as well. That seems very relevant here, especially because, you know, when I look at a lot of the applications that are on these XR devices, you don't see a lot of commerce, mostly because there's usually 30% tax. So I would imagine that there's going to be something that would be browser based that would start to facilitate open commerce in a way that doesn't follow the existing rules of a lot of the different with the mobile phone standard of being able to have something that's sold on a native app. And then there's like a mission cut that's taken from either iOS or Android. And so if we move into a future that has the same than Meta and Apple and any other XR device creator would potentially have as an option for whether or not they're going to take these cuts. So it seems like either having an open standard or open XR, I'd love to hear just a little bit more context of 3D commerce, and then we'll just kind of get some final thoughts.

[00:48:50.959] Neil Trevett: Sure. Martin, that's you.

[00:48:54.021] Martin Enthed: No, it's not. I was very much proud of starting it though. Yes, for sure. The reasoning behind 3D Commerce was to get people who are like us in the retail space together to put in our needs into among the tech people. So they understand what's most important for us. And the first thing we started doing was looking into, like I said before, render consistency. So if we make an asset, does it look the same in the different platforms and how to do certification and easy things like or it's not easy to agree but it's easy things to do technically is adding what metadata you do you put into a an asset and what do we call that metadata so everybody knows and can build tools on top of that to do things and so on so That was the short version of the reasoning behind it, because everybody wants to use this 3D thing as it's easier to get people to understand what they see. If they can spin around and do whatever they want with it and place it in a room, then that's really the use case and the reasoning behind 3D commerce.

[00:50:03.492] Neil Trevett: And I think the 3D Commerce initiative at Kronos has really been awesome because it sets a template, I think, that we will find gets repeated in different verticals. So GLTF tries to be quite general purpose. But of course, every vertical market and 3D Commerce is definitely one of the most prominent early beachhead use cases for GLTF, that they do have specific needs. And the way it gets deployed has specific nuances. And there's things that a cooperative group like the 3D Commerce Group inside Kronos, they can create the tools, they can create the guidelines, they can help create the GLTF extensions that they need, you know, and generally just move that whole opportunity forward. And I think we're going to find other verticals. And actually, you've already mentioned one of them, geospatial is, I think, probably going to be the next. where the geospatial applications, some of them use point clouds, other use different very large polygon models. So streaming becomes important. Now interaction with the 3D tiles like standards from organizations like OGC. That's another whole set of vertically focused actions. It's building on the foundation of the GLTF standard, but You can do real positive work if you're focused on that particular use case. And I think there's going to be others. Medical. I was actually, it's ironic because I caught COVID at AWE. Lots of people were coming to talk to me about medical. And for example, in that use case, it was very fascinating. Synchronicity. Suddenly five people come and say, we want to use GLTF, but we have to have data privacy. So encryption at the asset level is the make or break for us. Can you help? Actually, one of the guys saying, well, the problem is there's a bunch of standards organizations that are very hard to talk to, you know, HL7, they do good stuff, but is there a way that we can bring together all these standards organizations? And so we can actually have cooperation and discussion on how we can solve this problem for 3D asset formats. I go, hmm. Yes, we should have a forum. We should have a metaverse standards forum. That was actually a kind of a perfect example of a domain that we haven't spent a lot of time on yet. They're finding exactly the same problem. There's a bunch of great standards organizations doing great work, but there's not enough communication. And bringing together standards organizations that don't normally talk to each other, I think is going to really trigger a whole new round of opportunities to move this whole metaverse thing forward.

[00:52:35.153] Kent Bye: Yeah, one quick follow-up on that standards developer organizations, because as I look through the list, I don't know if the Academy of Software Foundation for the Visual Effects and Animation, if they've already been a part of the discussion in the context of USD or if you're talking about like all the different physics-based rendering and all the different shaders and whatnot. Has that already been part of the conversation in terms of GLTF or are the newcomers into this, adding new things into the discussion that haven't been integrated yet?

[00:53:02.062] Neil Trevett: Yeah, no, ASWF was one of the first organizations that were interested in the forum. And yes, they do a lot of good stuff, not just USD, but they do a lot of the good open source work around USD. And yes, no, absolutely. They're very much going to be, I hope, involved in the whole asset format, 3D asset format discussions. It'll be great to have their input.

[00:53:24.327] Kent Bye: Okay, well I think we covered a lot of the metaverse standards form. I'd love to hear any final thoughts as to what you think the ultimate potential of all this XR spatial computing and if we get all these standards together for the metaverse standards form and have this interoperability, what that might be able to enable.

[00:53:43.308] Neil Trevett: Well, I think to me, I'm motivated and excited about the forum. I'm interested to see how it evolves, but the potential is there, I think, to make a real difference. If we can use this opportunity that these companies and organizations have given to us to come together, we need to make sure we don't squander this opportunity because it's not just the engineering, the engineering interoperability that's important. we need to have open standards at the right time. And there's some urgency, you know, because I think we've talked before, the way to avoid dystopia is to have an open and inclusive metaverse. And if we don't have the right standards quickly enough at the right time when they're needed, there is the danger that proprietary technologies are going to get baked in at fundamental levels in the whole metaverse stack, whatever that ends up. being. So it's not just an engineering responsibility that standards organizations have. No, it is a responsibility to ensure that the metaverse can be the open and accessible platform that we all hope it can be and reach its full potential.

[00:54:53.458] Martin Enthed: I think you said almost everything, Neil, but the thing I just want to add is this is just the start. There are so many things that we need to do. And these guys, if we can do it together, we don't do overlaps. There won't be gaps. It will be compatible and that will make it go faster. But on the other hand, there's so many things to do the next 10, 15 years. So don't expect everything to be done in a year. It will take time. But as you say, Neil, it needs to be the important Lego pieces first and then building on that and then building on that. And if you do that right, the Lego pieces you put down first, hopefully you don't have to redo them and shake the whole building. That's the whole idea with this.

[00:55:38.873] Kent Bye: Awesome. Is there anything else that's left unsaid that you'd like to say to the broader Immersive community?

[00:55:43.636] Neil Trevett: Keep doing what you're doing. You're doing awesome work.

[00:55:48.719] Martin Enthed: Agree. And test and try and fail and succeed. That's the only way to move things forward.

[00:55:56.313] Kent Bye: Awesome. Well, Neil and Martin, thanks so much for joining me today to give me a little bit of a sneak peek as to what's coming with the Metaverse Standards Forum. I think it's really exciting to see all these companies working together. And like you said, Neil, there's probably a lot of fundamental business model things to sort out as we move forward, but hopefully we'll start to see some common points of collaboration amongst all these different things to make it a little bit easier to have these. open standards and formats so that you can either interoperate, take your assets in between these different game engines, or at least have other ways of distributing them as well. So very curious to see how this all plays out. And there's a lot of really big names and big players here. So you've certainly gathered a lot of the different stakeholders that are going to be key people that are committed us to this point, but I'm really excited to see where this goes. So thanks again for taking the time and for putting this all together and look forward to seeing where it all goes. Great. Thank you for your opportunity, Ken. Good to see you, so that was neal trevett. He's the president of the kronos group the vice president of developer ecosystems at nvidia And he was talking about the metaverse standards forum as well as martin ented He's the innovation manager at ikea working with spatial computing as well as the vice president of the kronos group So i've never front takeaways about this interview is that first of all Well, Neil is saying that they need to have the right open standards at the right time. It seems like it's the right time to have this big consortium of different companies, as well as open standards organizations, come together to start to think about how they can start to better cooperate and collaborate in this vision of building the open metaverse. We have everything from the Kronos Group to the Worldwide Consortium to the Open Geospatial Consortium to OpenAIRcloud, Spatial Web Foundation, Academy of Software Foundations, and many others as they're coming together and starting to see at what point are they going to have things that they have unique expertise in and that they need to go off and work on, or are there things that they need to collaborate amongst all these different organizations to come up with higher level degrees of open standards. To me, the big news is that there's both Unity and Unreal Engine that are part of this conversation. They're some of the biggest players in terms of Producing spatial content, and you know there's others as well in terms of 3gs Babylon JS other companies that are going to have other engines, but in terms of the big walled garden proprietary engines Those are the big companies that you want to see as a part of a conversation like this And so what is the outcome of that is it going to be that there's going to be some sort of? Standard that's supported between the two of them say if you wanted to export a unity project and import it into unreal engine or vice versa, or if there's a standard format that either one of them could input. So that there's some layers of USD and GLTF, you know, those are the baselines in terms of the assets that they're starting with. And then the question is, to what degree do you start to add this other metadata and behaviors? Each of these different platforms have their own method of trying to render out all this content and determine all the behaviors. And so Neil's not expecting that there's going to be a bit level, identical Experience so that you know and the other end essentially making the unreal engine and unity equivalent and what they're outputting I think there's so many differences between how they're building their engines that that's pretty much off the table I think the big question is whether or not you're going to be able to input things into each of these engines and to be able to get a similar output to have that kind of interoperability so that you could seamlessly go between them. And so that's something to watch as things go on. Neil did say that there's going to be some engineering challenges, but there may be at the level of business level decisions as to what degree are some of these companies going to open up and make it easier for content to go seamlessly between these different engines. Neil said that a successful open standard will be able to encourage more competition. So it could benefit each of these different organizations if there's a way to have this interoperability standard between them. So that's one of the big things to keep an eye on. Another thing is, you know, to what degree is Apple going to be a part of these conversations? They haven't traditionally been a part of the OpenXR, these other discussions, they tend to go off and do their own thing. But hopefully, if there's enough cooperation and collaboration amongst all these different entities, they'll be able to start to build the foundations of standards that are out there that make it easier for companies like IKEA to put out content in a way that They don't have to worry as much about, are they going to dedicate themselves to either Unity or Unreal Engine to be able to render out this spatial content? Martin is basically like, hey, I just want to be able to have consistency in the rendering to be able to have the same format. Because for them, their main product is a physical product. It's not to create these virtual representations. It's mostly to be able to make it easier to transmit information in a spatial context to their customers. And so they just need the open standards to be able to do that. It's just interesting to hear both from the very specific use case of IKEA, but also amongst all the other collaborators of the Metaverse Standards Forum, to be able to have more and more of those specific use cases are going to be driving these minimum viable beachheads of interoperability, as Neil said again and again. The other thing to note, I think, is just that glTF is becoming a way of having lots of different interoperability in terms of geospatial and other things that are being integrated into other existing open standards. And so as that happens, then glTF as a format becomes more and more powerful to have these extensions to have, like, say, geospatial information or all the different shaders and all this other information, animations. There's obviously a lot of different aspects of the behaviors and the metadata, as well as the coding as to what happens to these objects once they're into these game engines. Neil was just explaining how that's going to be a big point of discussion in terms of how do you start to add those layers of behaviors? Is it going to be on the objects or is there another open standard that needs to be there to be able to have additional information in terms of the code? I mean, for each of Unity, You have C-sharp scripts, and you have Blueprints and C++ coding snippets within Unreal Engine. And so each game engine has its own way. You know, 3GS and Babylon have their own ways of integrating with JavaScript as a language. And so what is going to be the interchange for having those behaviors and how to describe those? Is it going to be more of a high-level declarative language and then have each of the different languages implement it? I mean, it's sort of an open question as to how that's actually going to play out at this point. That's going to be one of the topics of discussion for the Metaverse Standards Forum. Stay tuned. A lot of the work that they do with the Kronos Group and all these different organizations happens a lot behind closed doors. Eventually, they come back, usually around some of the big conferences like SIGGRAPH or GDC. They'll come up with some announcements of what the latest interoperability standards may be so it'd be very interesting to see what kind of extensions start to happen with each of these different things like GLTF and USD and you know there's avatars with VRM as an extension building on top of GLTF and so yeah just to see how this conversation continues to develop over time very very curious I mean there's a lot of hype around the metaverse but I think this is actually a critical mass of companies that can actually make a difference in terms of what the metaverse actually ends up being and yeah so keep an eye on the chronos group and a lot of these discussions for the metaverse standards forum so that's all i have for today and i just wanted to thank you for listening to the voices of vr podcast and if you enjoy the podcast then please do spread the word tell your friends and consider becoming a member of the patreon this is a list of supporter podcast and i do rely upon donations from people like yourself in order to continue to bring this coverage so you can become a member and donate today at patreon.com slash voice of vr thanks for listening