I’ve been an executive committee member of the IEEE Global Initiative of the Ethics of Extended Reality for the past six months, and we had our official kickoff meeting on Friday, February 5th. The goal of this effort is to produce a white paper that helps augmented reality and virtual reality designers and developers to understand the landscape of ethical & moral dilemmas when designing for XR, and to provide a framework with some guidance of applied XR ethics & ethically-aligned design. There will also be some technology and privacy policy and recommendations for the government policymakers and technology policy lawyers.

I’ve volunteered to chair and head up the white paper committee, and so I presented an proposed timeline and an initial survey of the landscape of ethical and moral dilemmas of XR based upon my previous work within my XR Ethics Manifesto. Here’s a video of my presentation from that initial meeting on February 5th, 2021:

Here’s my initial survey of the landscape of ethical issues. This is merely a starting point, and how we end up breaking this up into chapters is very much open to deliberation by this community-driven effort.

There’s going to be lots of stuff that’s not included, and there will also be generalized principles independent and universal across all contexts, but also other lenses and perspectives to be included. I’m going to attempt to be as inclusive and pluralistic as I can in how we structure this white paper on XR Ethics.

To get more involved, then you can sign up here to stay informed over the whole time period, or if you want to dive in and help collaborate on one of the subcommittees, then send an email to xr-ethics-chair@ieee.org with the “Subject: Add Me to iMeet Platform.”

The full slide deck can also be found here.

We’re getting our collaboration platform set up, and I look forward to working on this project with the community. If you have any questions, then feel free to ping me @kentbye on Twitter.

LISTEN TO THIS EPISODE OF THE VOICES OF VR PODCAST

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Rough Transcript

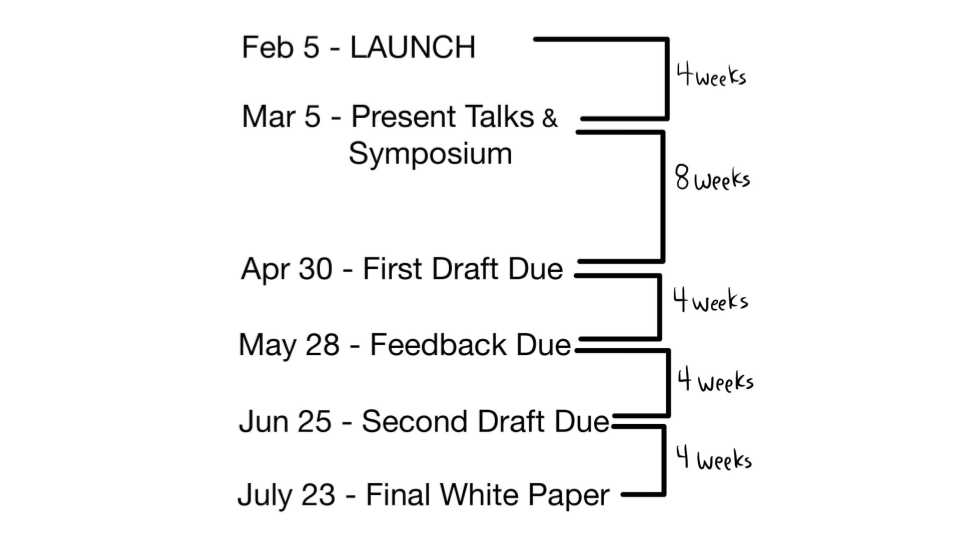

[00:00:05.452] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to The Voices of VR Podcast. So there's the launch of a brand new XR ethics initiative by the IEEE, and I've been involved with this effort for the last six months on the executive committee, and I volunteered to head up the XR Ethics White Paper Committee. And so over the next six months, we're going to be trying to bring together all sorts of different perspectives from both the academia industry and policymakers to try to produce this white paper that is going to be presented to the wider XR community to not only bring more principles of ethical design into the technology itself, but to follow on from that, create some policy recommendations that are going to be sent up into the policymakers to see what kind of new things need to be defined within biometrically inferred data and how to handle some of the sensitive and intimate information that comes out of virtual reality technologies. So on Friday, February 5th, 2021, there was the kickoff meeting and there was a presentation that I gave giving like a brief overview of what I'm proposing as a starting point. It's almost think of it as like a Wikipedia stub article where this is like an initial framework as some of the surveying that I've done on the landscape of the ethical and moral dilemmas of XR. But really, the next step is going to have folks come and get involved and participate within this deliberative process. There's an email address that's given at the end to be able to send off to get involved and be added to this platform. And feel free to also reach out to me and I'll be kind of the cat herder in some sense of trying to manage this whole process of creating this white paper over the next six months. So I wanted to send this out on my podcast, just if you have I haven't heard of this so far, that this effort is going on and it's now properly getting underway. I should also mention that if you want to see the slides of this talk, this is a talk that has actually a lot of slides and a lot more additional information. You can either download the slides from SlideShare and listen along or watch the YouTube video. But I wanted to also just get it out to my podcast listeners because I think it sort of stands on its own. But if you do want to look at the slides, then you can get even more additional information. And it's, I just think it's something really important for me to just help facilitate with me being in the industry and get more people involved into creating a document that can help do this survey and reflect to the larger community. Some of the big ethical and moral dilemmas that we face and perhaps some strategies for how to face them. So that's what we're covering on today's episode of the voices of VR podcast. So this talk that I gave happened on Friday, February 5th, 2021. So with that, let's go ahead and dive right in. So hello, everybody. My name is Kent Bye, and thank you so much for being here. This is the IEEE XR Ethics. It's a global initiative. It's an industry connection group where for the next two years is what we have as our remit. But over the next six months or so, I'm hoping that we could start to quickly iterate on creating what's essentially a white paper about XR ethics. So this is a topic which I've been looking at on the Voices of VR. I've recorded about 1,500 oral history interviews, and just since 2016 have been tracking the issue of XR ethics has been something that has been emergent within the community. And the challenge, I'd say, this is something that Thomas Metzinger has said, is that there's a pacing gap, meaning that the technology is moving so quickly that our conceptual frameworks for understanding it to be able to both have ethical design, but also the technology policy is not keeping up. So this gap, the faster the technology progresses, the more that we have to come together. And I think this is what this group is. It's the academia and industry and other people from policymakers coming together and say, hey, let's do an assessment of what the landscape of the ethical and moral dilemmas are within XR. Let's consolidate that within a white paper. And then that white paper will be feeding into further policy recommendations. So just a little bit more context is my own journey in this topic. First to just kind of talk about a little bit of a brief glimpse of the landscape, because you have biometric data that is going to be all sorts of EEG, ECG, EMG, your eye tracking data, galvanic skin response. All of this information is going to be fed into XR. What happens into that? There's volumetric privacy is going to be a concern. We have private property. Can you consent? like the Holocaust Museum and Pokemon Go was an issue that came up. So that's an ethical issue that fits in here. We have virtual violence and the impact of virtual violence. We have the capability of healing from PTSD, but also potentially causing PTSD as well. Virtual harassment is a big issue. Aldrich McBias, which is something that's focused on AI, but certainly relevant here to these virtual worlds. and accessibility. So this is just a brief look at some of that, and I'll be diving into more of a comprehensive look at that landscape. But first, I wanted to further track my evolution as I was talking about these issues. At the Laval Virtual in 2019, there was a think tank that I participated in. One of the issues that we focused in on was XR ethics. We did this big brainstorm And to a certain degree, we'll be replicating this process here through whatever technology platform we end up having. I've done some initial surveying of the landscape, but there's always stuff that I didn't have. And it's just, that's why you are here. Cause you may have specific puzzle pieces and two concerns that need to be included within this overall process. But once you sort of brainstorm, then it's like, how do you organize it? And that's been a part of my work has been trying to break these up into these different domains of human experience, or also could be considered contexts, which we'll be diving in here. So at Evolve Virtual, we ended up producing this as our artifact, but. This was our initial take. There's obviously way more stuff that we didn't include in this initial take. And so this catalyzed me into like a nine month process of digging into this more. I went to Augmented World Expo, gave a talk about the ethical and moral dilemmas of mixed reality. Then October of 2019, I gave this XR Ethics Manifesto. And then a lot of this talk that I'll be going through, we'll be just giving a brief overview of that. And the intention is to say, this is just the way that I'm kind of breaking up these issues. So I wanted to start with Lawrence Lessig's Pathetic Dot Theory. There's like four major dials you can turn to shift culture. In this case, we're talking about XR and XR technologies. There's the market dynamics, there's the underlying technological and architecture of the code of the technology, there's the culture, and then there's the law of the policy. All four of these things are happening at the same time and they're interfacing in different ways. And you can kind of think about what we're doing here is that we're producing an XR ethics white paper. So we are the culture, we're the academics, we're industry, we're coming together to produce an educational piece of this white paper. And that white paper will hopefully help inform the rest of the industry as they start to design their architectures and their codes so that it's done in an ethical way. eventually from this white paper we're going to be coming up with policy recommendations to feed into the government so that if there are specific regulations that need to happen then that will be essentially our second phase after we do this first six-month process of the white paper. Okay, so this is the basic proposed timeline and it'll really depend on who's participating and if we get momentum and what all the steps are on the way, but roughly we have from about four weeks from now on proposing the first step is to get together and present some talks and symposium. It'll be a little bit of a brainstorming process and people will step up in these different domains and say, I want to talk about this. I want to talk about this. And then based upon how we break up into subcommittees and groups that will determine those chapters and how those talks break out. And then like basically eight weeks after that, the end of April, we'll have a first draft. Then we'll have like a four week process of getting feedback and then integrating that feedback and then producing a second draft. And then finally, about six months from now or so, we'll have a final white paper, so the end of July. That's sort of like, if all goes well and we continue to move forward, this is what I hope that we'll be able to do with this process. And it really depends on everybody that's here jumping in and participating and chipping in in whatever way that you can. So context is a big aspect here. This is how I'm breaking up, and this is not meant to sort of do a deep, deep dive, but just to say, like, Helen Niesenbaum's privacy and context, a lot of privacy has a contextual dimension. Identity also has a contextual dimension. If you do a search for XR ethics, you'll come up with Michael Madry's and Thomas Mechenser's piece, Rural Virtual Reality, A Code of Ethical Conduct. And there's a whole section here about how important context is in XR and these environments. And so a lot of what I'll be presenting here is my taxonomy of these different contexts. So just to think about the difference between AR and VR when it comes to context, when you go into VR, you're in an existing context, but you're switching into a completely new context. In AR, you're in a center of gravity of whatever your existing context is, and you're overlaying different layers of context on top of that, but you're not really completely shifting. You're still in that center of gravity of that context. There's lots of different ranges of context from our identity, economy, our values, language, education. Basically, as you start to come up with all these different dimensions of context, I start to map these out into, okay, what's the public? What's private contexts? What are different dimensions of yourself and other? So these are two axes to map these out. And one of the things that I had done is just go through a lot of these different papers and then just do a survey of all the different aspects and issues that come up. And this brainstorming process will be taking something like this and having sticky notes and then basically trying to construct from talks and then eventually the white paper. There's been some existing work, but it's not complete. You know, there's gaps here. You can see it's not comprehensive. And so some of these pioneering pieces, they do a great job of doing the initial groundwork, but there's more research that's done since this came out in 2016. And everybody here is going to have specific expertise that they want to potentially contribute to that. And then there's the whole AI ethically aligned design. You could do a similar thing and say, well, there's actually similar insights that we can get from these other papers that IEEE has produced. I should say that it's sort of unknown as to whether or not we're going to end up using this taxonomy of these different contexts for how we end up structuring the white paper. Again, that'll really be up to the community. I'm proposing like this is one proposed structure, but if it ends up being like, Hey, we should focus on agency and embodiment and these other things that are across all of these different contexts. you know, like there's general principles that will be universal to every context. And so there'll be universal principles, but also like as we dive into each context. So ultimately, I think it would be helpful to say, if you're in education, here's some ethical principles you should follow. Or if you're in medicine, here's the ethical principles. And a Part of this process is to bring all of those contexts together to learn from each other, to say what's the universal aspects that we need to know about our perception across all of these contexts. And there may be things that are unique to those contexts and we can decide what the outputs are eventually. But to start off, we're going to do like an initial survey and whether or not another iteration is to kind of like really do a deep dive and to say, you know, there's a lot of stuff here. It's like really overwhelming. And if you're only focused on this specific industry vertical, then here's a very niche condensed version of what you need to know. So that's another potential outcome. But I think the first draft is just going to be trying to be a one-stop shop where we are having all this different information that's coming together. So this is sort of the taxonomy. I'm proposing like I'm going to be walking through each of these just to give you a bit of a flavor. And I think part of the intention here is that as you're listening, you could say, oh, hey, you know, I have something to say about that or you know, maybe that's a group that I want to join in. Maybe there'll be some of these that there's not a critical mass that merits a whole chapter and maybe they get combined or often as I read through some of these, these contexts end up getting blurred together. Usually as I read through a lot of these, a lot of these different areas are represented. So I'll just quickly walk through each of these and we'll get into the next steps here at the end. So the first one is self biometric data identity. This is the big part of virtual reality is that it's modulating your perceptual senses and what's that mean for your identity and all this biometric data. So you have your identity expression and avatar representations. You have the biometric data that's coming off of your body. You have the ability to hack your consciousness and to start to substitute different senses. You know, you have self-sovereign identity. And in my XR ethics manifesto, I sort of go through, that's a whole half hour talk where I list each of these different issues. I don't know if I'll be comprehensively covering all these, but when I look through like Michael Madry and Thomas Metzger's piece, the majority of that piece is a lot of that research that would be in this section here. So this is a good chance to kind of do a survey of some of the core affordances of VR. Okay, then we have like resources, money, values. So this gets into stuff like economies and what's the business model and the differences between open versus closed walled gardens and some of those different dynamics from an economic sense. If you deal with money, you have to deal with anti-laundering laws and the different ethics around currency exchange. So you have the potential for virtual gift economies. Probably one of the biggest issues is The surveillance capitalism and the business models, you know, do we own our data? What can we do with our data? What can other people do with that data to be able to undermine our agency? Is surveillance capitalism a business model that we want to move forward with? Obviously there's going to be lots of different companies that are involved here that may have a number of different viewpoints on that. So this will be an opportunity for like, what is the take on this? Are there different ethical dimensions of this that should be considered in terms of the data that are available and how that is monetized? Okay, so we have early education and communication. So FERPA laws, what is the ethical implications for youth using virtual reality, but also different dimensions of telepresence, data visualization, spatial language and metaphoric language, private communications, and how much of our data that are being tracked about who we're talking to. So then you have home and family private property. So you have the people that you're connected to, your home and your family. And then when you start to do volumetric scans of your home, then what's that mean when you could be actually violating the privacy of your family members or spatially doxing yourself and revealing your location? And to what degree do property rights relate to the right to be able to consent? So you have, like I mentioned earlier, the Holocaust Museum example. Augmented reality comes into a lot of play here. You could start to look at histories and our memories and memory holing and to what degree that we have the ecological impact, the relationship to the earth. So there's lots of issues here that you could dive into. Entertainment, hobbies, and sex. Obviously entertainment is a huge aspect in terms of what are the ethical considerations when it comes to entertainment and different aspects of immersive storytelling and then The ethics around documentaries. I know Mandy Rose is here, has done a lot of work with the ethics of documentary and media production. There's also just the creativity and the arts, but there's also like virtual violence and how much violence are you willing to have? What are the impacts of some of these different immersive experiences? What kind of trigger warnings do we need to have? What about the sexually explicit content? Obviously VR pornography is a whole thing, but you know, I think a concern here is preventing explicit content and the different moderation that you have to have. And I think that's for anybody that's doing user-generated content, they have to consider some of these aspects as well. So yeah, lots of different aspects here about the entertainment. Just in generally, what are the things if you're in the entertainment industry, what other types of considerations should be thrown in here to this industry vertical? And then you have like the medicine and medical health. And I think this is interesting just because when I read through a lot of the ethical articles, you start to get into things like, what if you have a certain mental health condition? Are you more susceptible to having your agency undermined? Or if you have epilepsy, you know, there could be specific things that are triggering you. Or if you have PTSD and other traumas, then there are medical connected issues that we may need to be aware of. And as we move forward, then what are those considerations for what kind of harm can be done from a medical perspective, but also just generally medicine within VR as a whole industry. So what are the ethics around do no harm when it comes to VR medicine and, you know, being able to heal trauma and the implications of that. So lots in general around XR medicine. So this other partnerships that you have a dialectic between the self and the other, the other here could be everything from. Others that are hostile. So the degree to which that you have harassment within XR, I think this is for anybody that's doing a social VR application, you'll have to consider how do you deal with hostile people? You need to have blocking of personal space bubbles. You have all sorts of like best practices that have been emerging. If there's suspensions and blocking and you know, how do you deal with this harassment issue? If there's a cultural issue to what degree can you technologically architect around it? There is also the empathy and truth and reconciliation. So to what degree are there ethical considerations around VR as an empathy machine has been quite a controversial in terms of, you know, leading towards this technological determinism. What are the benefits and the risks there? That could be a part that is discussed here as well. I think there's lots of room for discussion around some of these things that have been talked about, but this could be an opportunity for folks to really like, okay, let's have this conversation that we've been avoiding. So yeah, like deep fakes in your own identity and traumaturism and also virtual influencer AI beings and the risks there. You know, each of these contexts, there's so much stuff. This is certainly not a comprehensive thing. It's just trying to give you a flavor so that if you say, okay, here's something I have to contribute here. Here's something I contribute here. So here we talk about death and grief rituals that are happening and how do we deal with death and grieving. Zoom funerals have been a big thing, but also bringing the dead back to life. There's ethical issues there around like trying to resurrect people through AI or through virtual reality, but also just image rights. You know, after people die, then what's it mean to be able to have your avatar representation represented after you die? There's also the whole area of the military in terms of like using extrajudicial military force to be able to actually use virtual reality robots or drones to be able to actually torture or kill people. So there's like different ethical issues. So experiential warfare and death and dying and violence I think could fit in here as well. So then philosophy, higher education, law. So this is an area where here we are, we're all talking about the ethics. There's going to be lots of different ethical approaches. And I think in the ethically aligned design, you have different traditions. And so what kind of lenses we're going to have, utilitarianism, deontology, or if there's going to be a contextual approach, looking at AI ethics and how we can learn from that. So just ethical approaches and this is an opportunity for discussion about that, but also there's a lot of academia in terms of the way that VR is breaking down all these academic disciplines and really trying to potentially take into consideration how VR is going to be used in some of these other academic disciplines as well. Just being able to comprehend complexity. And yeah, the filter bubbles of reality. What is truth? What is reality? I mean, there's so much rich philosophical questions about what VR introduces that, you know, what type of content is illegal and yeah, just a whole list of things here. Okay. So the last three career government institutions. And so these are the ways in which that institutions are using VR, whether they're governments or private institutions and enterprise applications. So that's like spatial design architecture or using virtual screens for production. But as an example, it could be like the Fourth Amendment, to what degree should the government have access to all this information with the third party doctrine? What are the future of public spaces when all these private companies are owning these spaces? So what is a public space as we move forward into the metaverse? You know, what if you have countries like China that are doing like loyalty tests or the really black mirror-ish, real negative potential of what VR could do? and algorithmic transparency in general, like being able to see what's actually behind a lot of these algorithms. And then friends, community, and the collective culture. So this is everything from the social gathering. I think there's a lot of different implications when you start to have groups of people together. How do you cultivate a culture within XR? This may be something that if you're just a software programmer, not all of a sudden you have to like learn how to cultivate and engineer culture because you can only so limited with what you can do with technology. So these are things like the code of conduct and ways to be able to say, like, here are the rules for these environments, maybe doing a little review of some of the best practices, ways in which that you're hanging out with your friends, what are the different dilemmas that come up there, but also just generally like algorithmic bias. So when you talk about the collective culture, like who's been included, who's being excluded when it comes to creating these different algorithms and making sure you have a real diverse representation there. So that's a big issue here. And what are the normative standards and all these things of principles of diversity and inclusion and social scores, I think is another big thing. Like a lot of these applications are actually putting a score on you and your behavior. What if the government gets access to that? What are the implications of these social scores that are being implemented and who has access to that? If the government has access to that, are we okay? If it's like an implicit area of trust. And then finally, you have all the ways that you're hidden, exiled and different levels of accessibility. So this could be everything from banning or having temporary suspensions and putting people like the virtual prison or actually just prison rehabilitation. It's also combating isolation as well as accessibility is a big issue here. You know, this could be a whole chapter in its own right. What are the ethical considerations for how to do accessible design within XR? And yeah, I mean, this is, again, this is like a rough taxonomy. As I've been doing interviews and issues come up, trying to put them in these different buckets. And as I read through things, I can sort them out. Whether or not we end up using this as a conceit to say, okay, these are the different chapters, It may end up being that in order to really robustly cover some of these issues, we have to kind of break them apart and see how they're in relationship to each other. I think that's the existential dilemma, which is that this is so vast and interconnected that these will end up showing up a lot of these themes that I'm talking about and how we decide how we're going to structure it. We may need to have like subcommittee members who are taking ownership of this and say, hey, I'm going to really be the facilitator. And hey, I know there's somebody who wasn't at this meeting who would be great. And they have a lot of great research. Let's see if they want to come in and start to collaborate on creating these different chapters. The XR Ethics Manifesto that I did was sort of like an initial survey. And really, this group is to like put it into that next level of rigor, connecting to the research, having a deeper deliberative process, stress testing, seeing what's missing here, and just trying to be as comprehensive as we can. So again, the whole goal of what we're doing here is that us as the culture, the industry and academia, we're coming together and we want to put together this white paper. We're trying to close the gap, the pacing gap, the technological architecture and code is just zipping off. And this is our chance to say, Hey, we're going to try to close this gap. So whatever effort we put forward is at least something that could inform other people as they put together the policy recommendations. And again, this is the rough timeline. We're here right now. I think the next step is to break up into these groups. We're going to have some sort of collaboration platform. It's not completely decided yet. It'll be maybe some sort of clone that looks something like Slack, if it's not Slack, and maybe I meet in other ways of like, what are ways that we can have high bandwidth conversations, these different channels start to have the conversation after this. So we don't have that immediately ready, unfortunately, to say, Hey, go get started right now. We still have to set it all up and figure out what the technology platform is. That's all GDPR compliant. This in some sense is pushing the IEEE to evolve, to be able to have. the tools and technologies that they're going to be able to facilitate this type of collaborative process with all the people that are here and the people that are maybe watching this video afterwards to start to get involved. But this is the goal, you know, within the next six months, we want to be able to at the end, come together, start to write and iterate a few times and produce this white paper. So we need to split into the subcommittees. And if you're interested in like any of these and say, Hey, I would really love to like head this up, or I think this could be a whole chapter on its own. You know, the next big deadline I'm set is like about a month from now of March 5th, 2021, to have like a series of talks so that we could aim towards, okay, the next step is to just connect to each other and say, Hey, I want to talk about this. And, you know, to do a little bit more of a deeper dive. whether that's like all synchronous or asynchronous recorded, you know, we'll have to sort of decide. Maybe we each record videos and we watch them and we all come together and have like a brainstorming session. But I'd like to just, before we start to get writing and stuff, let's just like talk to each other, connect to each other, have discussions. And then from there, the first draft due by like the end of April. So that's about 12 weeks from now to be able to actually like consolidate what I just gave you here as a big overview into like the first draft in the week and kind of iterate from there for the end of July. And if you are interested in collaboration, what we're asking you to do is to send an email to this email address at xr-ethics at IEEE.org. Overall, we're trying to be pretty open, but also have at least some ways in which that you can get onboarded into this to go from this meeting. You all made it here. So congratulations for making it here and participating in this meeting here. But to take it to the next step and say, Hey, you know what? I'm actually interested in producing and collaborating and just being a part of the discussion. And then we'll be able to add you to that platform once all that is set up. And I think with that, again, my name's Kent Bye, I do the Voices of VR. I'll sort of hand it back to the rest of the committee to be able to further discuss and answer any questions. All right, so that was myself giving the official launch video for the XR Ethics White Paper Initiative, which is a larger part of the IEEE Global Initiative on the Ethics of Extended Reality. We're producing a white paper after six months and then giving some policy recommendations after that. And so, yeah, this is officially kicked off now. The next big step is going to be like on March 5th. It's going to be some sort of symposium or series of talks, and there's a lot to be figured out. We're going to get onto our platform, our collaboration platform, and we're still figuring out the logistics of that. But if you send an email to xr-ethics at IEEE.org, if you're interested in getting involved, you can be added to that platform. There's also a signup page that you can look in the notes of this podcast. If you want to see the slides, you can actually go get the slides from SlideShare or watch the YouTube version to be able to see all the different visualizations and whatnot as well. Like I said in the talk, this initial framework that I'm providing is just merely a way to kind of map out the landscape. I'm going to be really listening to see what other things are emerging and who wants to kind of head up specific topics. And there could be things around hardware and the differences around the hardware and ethical concerns about how the hardware is produced. This is kind of an open-ended process that is going to be producing this white paper to be given back to the larger community to help navigate all these different ethical dimensions. And like I said at the top, this is sort of like my Wikipedia stub article. They found that when you go to a Wikipedia article and it's like a green link and you click on it and there's nothing there, it actually is harder for people to create something out of nothing. And usually what happens is they read something that is there and they say, no, that's actually wrong and here's what I'm going to say to be able to fix it. I think that's what I'm presenting here is in that spirit of like, OK, here's an initial mapping of the landscape. There's things that are missing. There's things that are wrong. Let's come in and try to figure out how to reach some sort of consensus within this deliberative process from the community. And for me, part of the reason why I am doing this is because when I went to the VR Privacy Summit, at Stanford University that had helped coordinate with Jeremy Balanson and Jessica Outlaw, as well as Phil Brosedale from High Fidelity. We wanted to bring together like 50 different people from around the immersive industry. And we wanted to have like this one day XR Privacy Summit. And at the end of it, produce some sort of recommendations for the larger community about VR privacy. But it was such a vast space that just spending one day was not enough to be able to even understand conceptually all the different dimensions. And so it was just like a first step. And I think as a result of that, I was like, OK, This is actually an open area for a lot of work to be done. And then I went to the American Philosophical Association and listened to Dr. Anita Allen say there's no existing comprehensive framework for privacy. Like, philosophically, it's an open problem. So there's a lot of things philosophically that are just interesting for me to help figure out what this landscape is and to help close this pacing gap that the technology is just rapidly evolving and moving so quickly that our conceptual frameworks around them, as well as how to handle them from a policy perspective, is just really lagging behind. And so we need this type of effort from the community to come together and to try to upgrade our conceptual frameworks around all this stuff and, and to be able to give something to designers to make sure that they understand these different trade-offs that they have to make when they make these experiences, because you're mashing together all these different design disciplines and people who are engineers may not know about all the different nuances of embodiment and the considerations for PTSD and the different considerations for creating what is essentially a digital therapeutic, but we're not like medical practitioners. And so being able to navigate all this different information. So this is just an opportunity for the community to come together to consolidate all this information and to feed it back into the community. And then from there, to be able to distill it down further into these policy recommendations. So, like I said, this is an effort where it's just something that needs to be done. And I just saw my role of the Voices of VR podcast that even though I've been talking about these things, to really take it to the next step and to put it into that sort of academic rigor and have the folks in the research all come together and to put it together to be able to actually produce an artifact that is able to help shift the overall culture as we move forward. and to potentially help inform these other policy decisions that are being made. Because there's actively just a lot of discussion about all this right now, especially when it comes to the discussions around the federal privacy law within the United States. But I should also just mention that this is a global effort. There's people from around the world that are going to be involved with this. And so it's not going to be specific to the United States. It's going to be from around the world. And I think just from a copyright perspective, anybody that's contributing information that's like a singular piece of information, you maintain your own copyright, but then As soon as you start to combine that with other people, then that collaborative effort becomes a part of the copyright for the IEEE. And so we're just trying to bring people together to be able to help consolidate all this information and then to put it back into the community. Yeah. Anyway, I'm excited to see where this goes. I've never done anything like this before, so it's a little like overwhelming to say, okay, now we have to like actually bring all these folks together. But my whole take as a podcaster is just to keep the conversation going in different contexts. You know, we may end up having like different things on clubhouse and just having these regular meetings or creating videos that we're putting out there. So I'm just excited as this is a big. opportunity to have this collaborative and deliberative process to be able to really get lots of more folks thinking about this and to contribute something that's going to be fed back into the community to help close this pacing gap that we are facing. I should also say that I'm like a volunteer here. I'm not being paid by anybody, except for the listeners here of this podcast. And for me, I want to be involved with this and I am volunteering my time. And if you want to help support this effort financially, then one of the things you can do is just to help support my Patreon, to help support my time and effort and energy, to be able to help facilitate this process. This also just supports just all the work that I'm doing here in the Voices of VR podcast, which is facilitating not only these conversations within the community, but also to capture an oral history of the evolution of this medium. So if you'd like to support the work I'm doing here on the Voices of VR and to help support my own personal participation within this IEEE effort, then please do consider becoming a member of the Patreon. You can become a member and donate today at patreon.com slash Voices of VR. Thanks for listening.