Ikrima Elhassen of Kite & Lightning talks about the process of developing the INSURGENT: Shatter Reality virtual reality to promote the Lion’s Gate film called The Divergent Series: INSURGENT. He talks how the project came about as well as many of the lessons learned along the way.

Ikrima also talks about the two plugins that they developed for the Unreal Engine 4 in order to complete this project

- UE4 Stereo 360 Movie Export Plugin – to easily create GearVR ports of their passive desktop experiences. It’ll be available as an open sourced & free plug-in here soon.

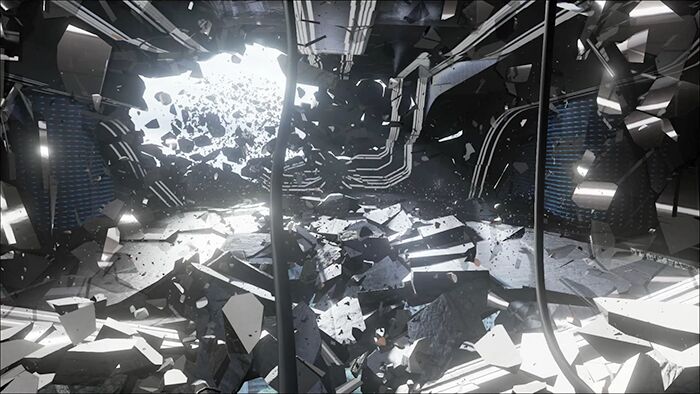

- Alembic Cache Playback – enables playback of Alembic files in UE4 so that they can import vertex cache animation such as water simulations or rigid motion animation to handle up to 10k fragments.

They wanted to have the widest release possible for the INSURGENT experience, and so it’s available via:

- A DK2 Version

- A Gear VR Movie Theater experience to watch the movie trailer and GearVR port of the DK2 Version (via Oculus Home on GearVR)

- A traveling city tour with a custom designed/built chair from the VR experience with full haptic feedback and 4D components

- Google Cardboard mobile apps (Android & iOS)

Theme music: “Fatality” by Tigoolio

Subscribe to the Voices of VR podcast.

Rough Transcript

[00:00:05.452] Kent Bye: The Voices of VR Podcast.

[00:00:11.918] Ikrima Elhassan: My name is Ikrima Ilhasan. I'm one of the co-founders of Kite and Lightning, along with Corey Strasburger. And we had just finally released publicly our last VR experience. And it was for a promotional piece for the Insurgent movie. And it basically takes you as a divergent in the movie. And you kind of get to experience being tested and put through a VR simulation by Kate Winslet's character in the movie, along with some of the other cast from the film.

[00:00:46.388] Kent Bye: Nice. And so maybe you could talk a little bit about how this project came about.

[00:00:50.476] Ikrima Elhassan: Sure. It came about through almost happenstance. We share a co-working space with a couple of companies in Los Angeles and Lionsgate was meeting with their marketing agency at the time and had happened to be walking by our office and I think they had mentioned that they were interested in exploring VR and the marketing agency that we've kind of worked with quite a bit for our commercial projects. introduced them to us and we put them through the Genesis and we put them through Sense of Peso and they kind of got really excited about it and you know, we just thought that was gonna be the end of that and a couple of months later they came back to us and they were like, hey, we're really excited about VR. How can we get you guys to do a VR experience for one of our movies? And that's kind of from there is when we started having the discussions and it led to the Insurgent piece that everyone is seeing now.

[00:01:46.992] Kent Bye: Yeah, and it seems like that they also had some people working with you and helping create the look and feel of this, because it seems like this is a sci-fi movie where you're kind of being taken into another world. And so with this VR experience, you're starting to get a little taste of this world. So maybe you could talk a bit about that collaboration in terms of getting assets and trying to get this look and feel of this other world set up into a VR experience.

[00:02:13.038] Ikrima Elhassan: Sure. Yeah, you know, we kind of really lucked out with the Insurgent movie in the divergent world because there's a, you know, rite of passage sim that all the citizens of this world go through and it's kind of very much lends itself to VR, you know, it's almost like a VR experience. And so we had a really easy tie-in with the movie content to be able to create kind of a VR experience out of it. So we started the process by reading the script. They invited us over to read the script and to kind of have some brainstorm sessions of kind of what we thought would look and feel really good in VR. And, you know, to Lionsgate's credit, they were kind of very much, you know, very supportive and encouraging and kind of hands-off in the sense that they gave us the creative freedom to come up with the experience. And once we started to go down our usual process, which is writing the script and Corey creating a play-based animatic of what the experience is going to be, and we started to get a good feel of what the experience is going to be, we were able to ask the VFX houses for certain assets, for concept frames, for example, the holograms from what the lab is going to look like. And we were basically sourcing from as much of the movie material creatively as we could to make sure that the VR experience felt like you were actually being transported into the movie and not kind of this other thing that existed outside of the movie. We got really fortunate. I think Corey knows all the details because he was the one who was making all the art, but we got to train assets from the movie. We had the references from the lab. When we also went to set and filmed and shot Kate Winslet and the other actors, and while we were there, we also took a bunch of references of the sets. And we basically took all this reference material and then rebuilt everything from scratch or created original stuff to kind of fit the movie palette.

[00:04:20.939] Kent Bye: And because virtuality is so new, when you're starting to shoot with these actors, do you give them an opportunity to kind of have a little taste of VR before they step into the process of creating a VR experience, just to give them a little taste?

[00:04:34.305] Ikrima Elhassan: Absolutely. We didn't get a chance to do that with Kate. because she was just on such a quick timetable that she literally had to fly out to London afterwards. But with Makai and Miles, we get them to go through Sense of Peso to kind of experience that. And that kind of got them even more excited about VR and the fact that they were going to be the first actors doing a VR experience that's especially tied to the movie that they're in. And that just helped tremendously.

[00:05:05.728] Kent Bye: Now, in terms of the technical parts of the actual shooting, were you shooting with a stereoscopic camera that you guys built? Or talk a bit about how you actually are getting actors within this virtual environment in Unreal Engine 4.

[00:05:19.135] Ikrima Elhassan: Sure. We shot it with Corey had cobbled together two black magics and we just shot everything basically on a green screen so that we could bring it in as a plate. So we just had a left eye, right eye for a single direction. And it was really quick and we had the support of the film crew. They set up a side stage with the green screen and the lights and kind of helped us set up that space so that the actors could just basically walk in, you know, do their take in like two, three minutes, and then they would be done and back to all the heavy duty film stuff that they had to do. Once we got all of that, I mean, we knew our experience was going to be pretty much like a on-rails experience because of a bunch of constraints. And so we were able to do what we've done before, and that is just cheat the location of where the stereo billboards were going to be of Kate, of the lab tech, of Makai, and Miles. And then we basically just composited them in 3D space where they needed to be, similar to how we did things in Sansepeso and the train station.

[00:06:26.583] Kent Bye: I see. Yeah. So if people were actually able to walk around, then maybe that would break down a little bit, it sounds like.

[00:06:31.307] Ikrima Elhassan: Totally, yeah. It's a total kind of a cheat that works for right here and now for the experience that we created. But yeah, if you could walk around, you would totally see that they were flat, paper thin.

[00:06:44.295] Kent Bye: And in terms of the distribution, it looks like you have both a DK2 version, a Gear VR version, a Google Cardboard version, as well as a traveling city tour with what you say is a custom designed built chair from the VR experience and a full haptic feedback with 40 components. And that's quite a range of different experiences. I'm wondering if you could maybe talk about what's different in each of those.

[00:07:08.230] Ikrima Elhassan: Yeah, it definitely has the widest release I think of any VR promotional experience that has come out to date and you know that's kudos to Lionsgate. They kind of wanted to put it out to as many people as possible and give people as many avenues to consume the content as they could. You know, a lot of things that we've seen before are generally kind of the traveling tours, and then if you don't happen to be in one of those cities, you never get to see it, which is kind of a bit of a shame for us. So we were really excited that Lionsgate wanted to kind of just blast us out. The main tour has a full haptic chair that was designed by us at Kite and Lightning. Corey drew out all the designs for the chair and it fits basically the chair that's in the VR experience. We designed and wrote out a 4D script of the moments of when the haptic feedback, when the chair should rumble, when the butt kicker should kick on. along with there's a fan component that's in front of the viewer that kind of blasts air while you're kind of being pulled through Chicago or when the crows are attacking you. The company that is in charge of the actual tour and the build-out, the thinking box, they were amazing. They built out the chair, they decked out the entire traveling truck. If you got a chance to see in one of the traveling cities, you could see all the craziness that they put into it. So they were responsible for actually building out the designs that Kite & Lightning made. And so that's probably the most full-on experience that you can experience from the Insurgent piece. And then we obviously have a version that goes on Oculus Share that we put out. And then we made the ports to MilkVR and a separate GearVR app and to Google Cardboard on iOS and Android by taking the existing Unreal Engine 4, you know, real-time rendered experience and we wrote a custom plugin to export out a stereo 360 panoramic video from the Unreal Engine, you know, experience and that way all the mobile devices and lower-powered devices can just basically play that video back in real-time with kind of head tracking enabled. And that worked out tremendously well. You know, it kind of really allowed us, instead of having to create a crappier version of the experience, we could keep the same visual fidelity across all the different devices, especially because our experience was an on rails experience.

[00:09:45.819] Kent Bye: Wow, nice. Yeah. And why did you have to end up writing a separate app for gear VR rather than just distribute it through milk VR?

[00:09:54.068] Ikrima Elhassan: The MilkVR component is the experience itself. And I'll say that RealFX, they're another kind of VFX company, actually were responsible for building the mobile app wrapper. And the mobile app wrapper was made basically for two reasons. We kind of needed that mobile app wrapper for Android and iOS on Google Cardboard. And it also had a little bit other functionality as well. So you could see a bonus clip from the movie as well inside the app. And you could kind of see some little behind-the-scenes footage or photos as well, in addition to kind of as an add-on to the VR experience. So that's what you would get if you downloaded the separate Gear VR app.

[00:10:38.108] Kent Bye: Ah, I see. It's very similar to running it on Cardboard. Is that the same app as if you wanted to watch it in Cardboard then?

[00:10:46.053] Ikrima Elhassan: Exactly. It's the same app that you would get to see in Cardboard. OK.

[00:10:50.380] Kent Bye: Great. So tell me a little bit more about this haptic chair, because I feel like the haptics would give people a lot more immersion. And I'm just curious from your own personal experience of going through the experience in the haptic chair and what you've seen with other people who have gone through it.

[00:11:05.287] Ikrima Elhassan: Yeah, the haptic chair is definitely a big component. It adds to the level of presence and immersion. Anytime you can add more senses into the VR experience, the more powerful and more immersive it gets. The haptic chair was really awesome because we tied the rumbling of the experience when the lab exploded, you felt your entire body shake from the chair. When the tentacles injected you with the Sim serum, we had actuators along the edge of the armchair and the armrests of the chair so that you felt it in your wrists and it kind of just sucks you into the experience a lot more. And, you know, the haptics doesn't necessarily need to be so fine-tuned. Even just a little bit of kind of haptic feedback or force feedback I feel like goes a tremendous long way into achieving that emotional effect.

[00:12:01.410] Kent Bye: Nice. And one of the other things that you said in your email blast that you went out is that you wrote an Alembic Cache plugin. So maybe you could talk a bit about what does that enable for you guys now?

[00:12:13.825] Ikrima Elhassan: Sure. Yeah. So we wrote two plugins, basically, for this project. The first one was the Alembic Cache plugin and kind of very briefly Alembic is kind of a file format, a baked down file format that all the VFX houses use. So you can imagine that you have this crazy massive like ocean simulation or you know this destruction sequence and it's super complicated and has all sorts of moving parts in it that's specific to that software program that you're using. And then you can play back that Alembic file pretty quickly and performantly, you know, relative to the offline world. So what we did was that we knew we were going to have this huge destruction sequence and we also wanted to do kind of like soft body like animation later on in other things we were doing, you know, like oceans or like fluids. And we decided that basically the best way to do this is that we were going to write an Alembic importer so that we could import it into Unreal and play that back in real time. So that was a big boon for us. If you see the lab destruction, it has 10,000 shards of objects being destroyed everywhere. And if we had done that with a traditional FBX file, it would have brought down the performance to a crawl. So that was a huge help and we're going to be releasing that hopefully soon in the next couple of months or so. Then the other plug-in that we wrote was the stereo export plug-in, which was I think probably a lot more applicable for a lot of VR developers because it enables people who create these high-end VR experiences for desktop to basically create a mobile port. version of their experience without doing any additional work. We've gotten a lot of interest from people saying you know they want to use it whether they want to use it to create kind of a VR let's play video for what they're going through or a lot of developers want to do it to create kind of a gear VR trailer or when WebVR takes off, a WebVR trailer for their game experience. So someone can just basically go watch the video in VR, and it'll feel like they're just in the game, minus any interactivity, without them having to download the game, install it, get all the prereqs, and all of that. So we'll definitely be releasing that, I think, next week. And we're going to open source it, and it's going to be for free. And hopefully the guys at Epic will take it and integrate it into the engine. And then everybody will be able to benefit from that.

[00:14:57.604] Kent Bye: Yeah, it definitely seems like even the Oculus Story Studio and Oculus VR have been using the Unreal Engine 4 a lot for a lot of their cinematic VR experiences. Do you see that it's a split between if you're going to do more cinematic VR rather than a game, you may go with Unreal Engine 4? Or if you're going to have a little bit more game mechanics, then go with something like Unity 5?

[00:15:20.888] Ikrima Elhassan: You know, it's kind of up to the developers on that front. I wouldn't say that the distinction would be whether you're making cinematic stuff versus not. For us it was always that The Unreal Engine was a world-class AAA engine, and being able to access source code has been the only way that we could accomplish a lot of the stuff that we've accomplished over the last six, eight months. So that's kind of been the biggest strength and why we chose Unreal. I think Unity has a lot of really awesome advantages as well. They are bringing up their render engine to a higher visual fidelity with Unity 5. And I think it's kind of geared more towards an artist-driven workflow, you know, where, you know, if I was on a team that didn't have a deep technical expertise, I would maybe go to Unity because everything there is kind of accessible and made so that it is accessible for everyone. You know, it precludes you from doing some awesome advanced things, but, you know, if I was in a team that didn't have deep technical expertise, I would see Unity as an attractive option.

[00:16:33.701] Kent Bye: Interesting. Yeah. And in your DK2 version of this Insurgent experience, I'm curious what the positional tracking is adding, or if you're using that at all, or if it was just making it more complicated, or what's the difference between the interactive version, or maybe it's not interactive, but the DK2 version of it?

[00:16:51.248] Ikrima Elhassan: Sure. So it has the positional tracking and I think for some people, the positional tracking, the subtlety of just being tracked to your space, even if you're not necessarily moving a lot or leaning in, helps tremendously to reduce VR sickness. So that's always a big boon and big plus. As far as the experience itself, I think we have an avatar that uses the costume from the Divergent character in the movie. And so when you look down, your avatar rig is tied to your positional tracking. So if you lean in, it's kind of set on this IK rig so that you feel your body kind of shifting its weight as you kind of move around and shift your own weight. And the other portion that we put in is that there's a section in the train destruction sequence where You are able to kind of look around and destroy the tracks so you can interact with the world. So kind of where you're focusing on like that section of the train tracks would kind of just explode and shatter and you can kind of move the shattered pieces around by kind of focusing on them and looking at them. Obviously in the Gear VR video version all of that is kind of scripted so you can't really control it.

[00:18:02.037] Kent Bye: Nice. So it seems like doing a client project like this, you are kind of ironing out your pipeline to be able to do these types of, you know, scoping and planning and production. And I'm curious what some of the big lessons learned after going through this whole process. When you look back, things that you may improve or do better next time, or just sort of like golden nuggets that you've learned through this process now.

[00:18:27.118] Ikrima Elhassan: Yeah, absolutely. I mean, these client projects have been tremendous in helping us kind of iron out our pipeline, figure out how to do things and how to kind of explore different avenues of VR. The biggest lessons I would say from the Insurgent experience is that We kind of are becoming more cognizant in the sense of how big of a technical challenge to take on or a new technical challenge to kind of take on for each kind of like VR experience for the size of our team specifically. The Olympic thing for example was a huge undertaking just on its own but bringing it into vr had its own kind of making it performance and run well. In vr in the unreal engine was kind of a it's own hairy ball of problems. I think the other thing we've kind of learned as well, you know, pipeline wise is that every time we're trying a new pipeline, there's going to be kind of like hiccups. And so a lot of times there'd be things that we could, for example, the dynamics destruction for the lab environment was riddled with problems from like the buggy software on the VFX front. In the offline world, you have a lot more time and a lot more resources and people can basically just hand fix the errors or spend a lot of artist time to fix the errors. For example, the lab ceiling was just exploding because it was unstable in the sim every time. So we just jitter and explode and it would be completely incorrect. On the forums, the way everyone was fixing it was basically by hand adjusting these settings on thousands and thousands of objects. It was like, well, we don't have the time and bandwidth to do that. So the big takeaway from that was that realizing that even if something is technically possible, there's still the pragmatic or practical workflow process that might also be hidden with just a myriad of problems. Then from the VR side, I think one of the big things was that it's the pullback in the VR, the insurgent experience, surprisingly makes very few people sick. We're exploring and expanding the boundaries of what you can and can't do. A lot of people early on were like, no, that would have absolutely been a horrible thing to do. But to pull someone backwards with this downwards and backwards camera move, We tried it and it worked and Corey finessed it to where it felt really good and it rode that fine line of something being nausea-inducing versus something that gives you the anxiety that you're supposed to feel in that moment.

[00:21:19.750] Kent Bye: Yeah, and it's really interesting to think about like using a new interactive and immersive medium like virtual reality to promote a 2D film from, you know, something from the previous medium. And so there's going to be strengths and weaknesses from like a film and a film trailer. And so I'm curious how you go about trying to distinguish yourself from not just creating a film trailer.

[00:21:42.028] Ikrima Elhassan: That's a good question. We haven't really thought about it that way, although a lot of people, after seeing the experience, said, man, it'd be awesome if all trailers for movies were made like this. And, you know, a lot of people said after they've seen it, they were like, well, I'd never heard of the movie before, but after this, I kind of want to see the movie. So for us, you know That's kind of like the best thing that they could say about the experience because that's its ultimate goal is to get be someone excited The VR experience gets them so excited and they love it so much that it spills over into them You know going into actually seeing the movie so we I wouldn't say we're too worried about the film being thought of as a film trailer for the film and

[00:22:26.741] Kent Bye: how does Lionsgate kind of measure the success of a project like this? I mean, there's not a lot of people with DK twos, maybe a hundred thousand or 150,000. I'm not sure what the number is and give VR maybe around the same. And then, you know, there's probably a lot more people with Google cardboard access, but you know, maybe not with the HMD cardboard. So I'm just curious, you know, with the limited amount of HMDs, it's not even a consumer released product aside from, you know, maybe perhaps you could consider the gear VR, but. You know, what kind of metrics are they looking at to tell whether or not this is a success?

[00:23:01.221] Ikrima Elhassan: You know, that's a really good question for Lionsgate to figure out kind of exactly what their specific metrics are that they look for. I think from a high level, as far as where we're concerned, the amount of buzz that it generated and the amount of people having conversations about it and the views of even if people didn't necessarily don't have a DK2. They've seen that there's this VR experience and they could go see it, or they've seen their favorite YouTube celebrities, like PewDiePie covered it, for example, go through the experience and watch it. It raises awareness about the movie and then it also drives interest and engagement to the movie. As far as the portion that we're directly involved with and can see into, You know, it's the articles on Vanity Fair and Variety about covering this along with the, you know, I think it's on Oculus Share, it's been downloaded 5,000 times already, you know, in the two, three days that it's been available. Those things have been kind of like the big success factors for us.

[00:24:03.323] Kent Bye: Nice. And in your email blast, you said that you've got another client project that you're going to be working on until about June or so. And then for the last half of the year, you're going to start to be working on your own projects. And what can you tell us about what you're going to be cooking up?

[00:24:17.922] Ikrima Elhassan: Can't say much about either of those. I think we have a client project, another VR client project that we're super stoked about. It's going to be really exciting with one of our clients that we absolutely love working with. And we're basically, we have a huge book of ideas of VR experiences, narratives, games, you know, interactive pieces that we want to do. And we just kind of have to go through the creative process of whittling out the ones that we want to try out. The beauty of it is that we're structuring it so that the rest of the year we'll be able to just focus solely on that and not work on client or commercial work. We fortunately have been able to save enough capital from all these client commercial projects so that we can use that capital to fund these things, original K&L experiences that we love creating. And then hopefully by the time the end of the year rolls around, consumer headsets will be out, and we can transition into primarily creating original content.

[00:25:20.900] Kent Bye: Nice. And I know since the last time I spoke with you and the rest of the Kite and Lightning team, you have since also done the voice VR experience. Did you do anything beyond that? Or maybe you could talk a little bit about some of the other projects that you've done.

[00:25:35.253] Ikrima Elhassan: Sure. Yeah, we've done three client projects to date. We did the voice for NBC. That was kind of our first one way back. And we have also done a GE subsea experience that kind of took you down into the depth of the ocean through a submarine ride to showcase the R&D that GE is doing down on the ocean floor. And then we did the Insurgent VR experience. And I think those three, since we last talked, those are kind of the three big ones that we've done.

[00:26:08.827] Kent Bye: Nice. And did you get a chance to try out the HTC Vive at the GDC?

[00:26:13.870] Ikrima Elhassan: We did. I got a chance to sneak in there, and it was amazing and awesome. Super fantastic. I saw the Vive, I saw the Weta Crescent Bay demo, and I saw the new Sony Morpheus demo.

[00:26:28.110] Kent Bye: Right, so have you guys started to think about how to do VR cinematic experiences with the fully walkable two-hand interactions as well?

[00:26:36.013] Ikrima Elhassan: Absolutely. We were super stoked. We can't wait. You know, I think it's going to be interesting because people are going to, you know, it's a big investment from a consumer's point of view in terms of just setting up the thing. you need a big space, right? And even if you can walk 15 feet by 15 feet, I don't know if, you know, like we don't even have a 15 foot by 15 foot empty space in our studio, much less in our living rooms. So it's going to be really interesting to see kind of how people take that into consideration and how many people actually go through the effort of carving out that big of an empty space in their living room. But the fact that you have input controllers, and they are super solid, and they're intuitive, and they just nailed the controllers to the point to where we can do a lot of amazing things for them, that makes us extremely excited.

[00:27:31.825] Kent Bye: Cool. And finally, what are some of the types of VR experiences that you want to experience?

[00:27:38.768] Ikrima Elhassan: I loved the Weta demo. I know that probably cost the price of a young nation state, but it was unbelievable. I could be in that type of experience for an hour, hour and a half. The other one that I absolutely loved more than anything was the London heist for the Sony Morpheus demo and you were just kind of opposite a London, a British gangster and you're delivering his dialogue and I just remember the interaction design they did for that piece, they just nailed it to the T. When you lean to side to side, he would shift his body weight and he would look at you. I remember I leaned in close to look at his face and he flicked his cigarette at me and I leaned back. I remember looking away from him to study the room and he pulled out the gun and shot at the room to bring my attention back. I mean, it was perfect. It was exactly what I think VR is going to be, what's going to make VR amazing and critically so. It's this dynamicism between you and your environment. I couldn't affect the storyline. The storyline was the same no matter what I did, but it was reacting to what I was doing while I was in it. I was like, you know what? This is what cinematic narrative in this space should be like.

[00:29:01.885] Kent Bye: Oh, that's really interesting. Yeah. Because, you know, in the, both the Weta and the Lost, I'm not sure if you've had a chance to see the Oculus story studio short called Lost, but both of them were very passive in the sense that it is very on rails and you don't have a lot of agency to be able to interact with it. But it, it sounds like they're starting to do a little bit more user interactions with that London heist piece that the Sony production crew put together.

[00:29:25.622] Ikrima Elhassan: Yeah. No, it was super well done. I mean, I think it was a AAA studio, a Sony AAA studio that did it, and it showed. It was absolutely beautiful.

[00:29:35.171] Kent Bye: Awesome. Great. Well, thanks so much, Akrima, for joining us today.

[00:29:39.595] Ikrima Elhassan: Absolutely, Ken. Always a pleasure talking with you.