I did an interview with Moor Insights and Strategy principle XR Analyst Anshel Sag at Meta Connect to hear some of this latest thoughts on the biggest announcements, but also a broader reflection of how Meta is focusing on how these new AI glasses wearables like the Meta Ray-Ban Display Glasses as well as upgraded Ray-Ban Meta Gen 2 and Oakley-branded AI glasses will provide a platform for Meta’s AI products. You can see more context in the rough transcript below.

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Podcast: Play in new window | Download

Rough Transcript

[00:00:05.458] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR Podcast. It's a podcast that looks at the structures and forms of immersive storytelling and the future of spatial computing. You can support the podcast at patreon.com slash voicesofvr. So continuing my series of MetaConnect 2025, today's interview is with Anshul Sog. He's a principal analyst at More Insights and Strategy. So this is actually the first interview that I did at Metaconnect. And it was before the keynote, before all the big announcements had been made. I had a chance to attend the press day at the last minute and go through all the major demos that were there. And so this was an opportunity for me to kind of share some of the different first impressions and reflections on Metaconnect. what i was able to see but also really focused on the upgrades to the ray-ban meta glasses generation two then they have the oakley glasses they also have the vanguard glasses which is getting more into fitness for athletes but i think they're actually going to be expanding this out to all of the glasses of these integrations with the garmin and strava so with garmin there's watches that are actually tracking a lot of biometric data and strava is an app to track your exercise and hiking and whatnot. So having these different integrations, so taking these streams of data and overlaying it into like either time-lapse or other video. So with the Oakley Vanguard, they have a single camera right in the middle of your face. So it's a little bit more centered and also oriented as a vertical video rather than a horizontal video. So made for more sharing different videos on social media. They have like a slow motion option. They have like a time lapse and also just an increased amount of decibels for people who are out exercising and they want to listen to music. So again, leaning into more of the audio features and creating more of a hands-free computing experience for folks as they're going about their day. On the second day, they did announce that they're going to be having the Wearables Device Access Toolkit, which is more of a developer kit for mostly the existing AR glasses and more of the camera and speaker and microphone features. Nothing around the meta Ray-Ban display, like getting access to the HUD or anything like that. There's no roadmap for developers to get access to that, although I imagine that they will eventually. But as of this moment, it's mostly first party apps that are focusing on. So it's barely a developer kit at this point because we don't know what the future is going to be. And it is curious that the only way to get access to buying one of these, you can't buy them online. You have to actually go to one of the physical locations where they're showing demos. And when I just checked, most of the demos are booked out for most places. So it might be difficult to actually get a hold of some of these because you'll have to get fitted for the neural band. And I think they just want people to have a direct experience with the technology. And maybe after I say they're so wild that they'll buy it, I guess that's the idea that they'll have better success if they limit it to that. Although there's going to be a lot of people who do want it, who won't be able to get it because they can't get access to a demo slot. And the other thing that we mentioned here at the end, the Hyperscape demo, being able to use your Oculus Quest to take a scan of the room and then send all that data up to Meta that then translates it into a Gaussian splat. That is probably one of the most impressive things technologically that we're also coming out. So just the level of quality of what you're able to do with Meta. this kind of high quality scans of Gaussian splats that are just using the normal Quest headset. And there's obviously a lot of processing that's happening on the back end that is able to facilitate that as well. So the Hyperscape demo is also something that you can download and scan and try out for yourself. Also, just some reflections on the larger pivot towards artificial intelligence for meta in terms of both the Ray-Ban metas and the meta Ray-Ban display glasses, where the primary use case that they've been talking around is for artificial intelligence. And so there does seem to be, again, this kind of pivot away from explicitly VR-centric technologies. They did have demos there for mixed reality and more of an emphasis of, let's say, the entertainment or storytelling. At the keynote, Mark Zuckerberg said that there is a shift towards more immersive storytelling and 3D storytelling, and it's going to be one of the more exciting developments in the coming years. I think it's going to drive a new wave of adoption of virtual reality in classes. And so basically they rebranded the Oculus TV to Horizon TV, where now they're going to be focusing on a lot more virtual 3D videos, they were showing a demo from James Cameron's 3D movie that's coming out, Avatar, Fire and Ash. No details as to whether or not they're actually going to be distributing that in any capacity, but it did look impressive. Although from my eye, I don't know if it was the frame rate of 24 frames per second. or if it was stuttering a little bit, but it wasn't as smooth as the experience of watching Avatar on the Apple Vision Pro. From my experience, the Apple Vision Pro was a little bit higher quality. But for people that were just using the Quest as a media consumption device, that seems to be an area where they're seeing a lot more people using that in that capacity and so they want to do other things like what Cosm is doing where they're showing the matrix and they're showing all these associated things around it they're doing something similar where they're showing a video in a 2d frame but then adding all these kind of mixed reality components or even like more fully VR environments where they're adding aspects of what's happening in the scene into the environment around you so you get more of an immersive storytelling experience and immersive storytelling in the sense where it's from traditional cinematic tradition and adding more spatial elements within the context of the things that are beyond the 2d frame so this melding between those two realms is something that they were also demoing there on site and something that zuckerberg has identified as this kind of new casual mixed reality use case for media consumption so that was also an emphasis at this year's conference So overcoming all that and more on today's episode of the Voices of VR podcast. So this interview with OnShell happened on Wednesday, September 17th, 2025 at MetaConnect at Meta's headquarters in Menlo Park, California. So with that, let's go ahead and dive right in.

[00:05:59.168] Anshel Sag: I'm Anshel Sag. I'm principal analyst at Moor Insights and Strategy, and I cover the XR space from a hardware and platforms perspective with a focus on semiconductors and how all of it meshes together with other areas that I cover like PCs and smartphones and 5G. Nice.

[00:06:20.741] Kent Bye: So maybe you could give a bit more context as to your background and your journey into being an XR analyst.

[00:06:25.939] Anshel Sag: Yeah, so I started way, way, way, way back when I was 16 working in electronics prototype design. I was like an intern, started in hardware, and then I went into PC hardware, graphics cards and motherboards. And then I started becoming a journalist and covering the smartphone space and actually XR. And then as I transitioned to being an analyst, I continued to cover XR. So I've basically been covering XR since 2013, and I've seen all the ebbs and flows and the ups and the downs, and it's been quite the journey for a lot of us.

[00:07:01.803] Kent Bye: And so we're here at MediConnect. We're still in the press period here where all the announcements and keynote is actually going to be tonight at 5 o'clock where all the big announcements are going to be made. We have revealed some of the embargoed information that's going to be talked about. And so for you, what are some of the biggest news items that are going to be covered today?

[00:07:20.146] Anshel Sag: It seems like there's a much stronger focus on fitness and health, especially with the wraparound glasses, the vanguards. But also, I think they've really addressed a lot of people's concerns around the last generation of metaray bands with battery life. You know, they've effectively doubled it, at least according to them. And I think that will help a lot with the number of battery cycles and the longevity of the battery because You know, the more times you recharge the battery, the less long it will last. So I think that's a big focus point for a lot of people. And all of the products they're introducing have pretty good battery life, regardless of which one you pick. So I think they really focused on battery life as a key user experience improvement across the line.

[00:08:06.144] Kent Bye: Yeah, the Oakley Vanguard wraparound glasses that they say it's 122 degree field of view. And I asked them because it was in a more of a up and down portrait perspective. And so, you know, when I come from the VR world and 120 degrees field of view, you know, I put my hands out. And then when I watched the video, it was like immediately cut off because it was just a vertical video. So when I asked, I said, oh, well, it's just that's the diagonal field of view. So Yeah, they had me do a demo on a bicycle and they hired a Garmin watch that was hooked up. And so they had data that was being sent over and kind of encoded into the videos. And so, yeah, I'm just curious to hear some of your impressions of the Oakley Vanguard glasses.

[00:08:48.072] Anshel Sag: Yeah, I actually was very impressed with what I saw. I actually had them send me my footage. I don't know if you did that too. But it was really interesting because they let you control what kind of data you share, right? Because you can share heart rate data, you can share all kinds of different types of data. I think the partnership with Strava is going to be really powerful and useful. I see that they're not just connecting Garmin watches, but also other Garmin bike accessories, which I think is important because... you know not everybody's going to want a Garmin watch some people are going to want other accessories so I think that's good and honestly the three-point like mounting system on your face it's pretty solid those feel comfortable they feel like they're really on your face I wouldn't worry about you know going in the wind and having them fly off my face so I think there's gonna be a lot of cool applications of these glasses that maybe we didn't see in previous iterations because you know they weren't really designed for more extreme sports but i would expect lots of people who go skiing this winter are going to be really excited to wear these they're going to be like a perfect fit for that kind of experience but also other fitness applications i think I would love to have seen them figure out a way to do like a heart rate sensor built in. But I think that right now they're focusing on partnering with people who have really good track records like Garmin. And I think maybe that's the right approach as well, because, you know, I don't know if they really want to take on biometric data because then that introduces more regulatory challenges. but yeah I'm pretty impressed with what I've seen I do like the adaptive audio that kind of adapts to the speed that you're going so if you go really fast it creates more wind noise so then the glasses get louder and you know they're the loudest smart glasses I've ever worn obviously I don't need them to be that loud but I will say there are some venues like concerts and sporting events where you kind of want them to be louder because the environment around you is really loud and When I was doing the demo, I actually had them almost at max, not even at max. And I was having a hard time hearing the guy literally standing next to me. So I had to turn them down. So they're very loud. And, you know, they don't really look special. They look like regular Oakley glasses. So they've done a really good job with the ID and ensuring that they just fit in. And I think that's kind of been a part of Meta's success with this is that

[00:11:06.588] Kent Bye: you know they have worked really closely with s laurel exotica to really fine-tune that design so that it's socially acceptable which i think is what google glass missed out on yeah i also had that same experience where i wanted to hear what music sounded like because if i were to wear those glasses i wear glasses and i would have to wear contacts in order to wear that because when i try to put them over my glasses they just wouldn't fit at all because they're not built for putting over anything else. And so, yeah, when I was talking to the other guy, it was hard to hear what he was saying when he's standing right next to me. And so they said it was around 60 B louder because it's designed for people who are on a bicycle or running. And so, yeah, there was just like this connection between the Garmin watch and asking information in terms of like, how far am I on this trip? What's my heart rate? And so, yeah, I guess as people are doing their fitness exercise just to get all that biometric data fed back into them to, I guess, encourage people in this fitness sector, which I think we've seen in the VR and XR space in terms of fitness really being an area where XR has picked up because it can actually encourage people to exercise. Yeah, just kind of the quantified self of having additional information and metadata just to help encourage you and to have some trace of that overlaid on some of the videos. There was also like a time lapse option and also an option to do slow motion. It's that same trigger button that you can do. He had actually accidentally set it to slow motion and then we saw that like slow motion video and then the time lapse, which I thought was also kind of a cool thing to be able to take time lapse of an exercise.

[00:12:37.918] Anshel Sag: And the battery life is designed to enable a time lapse. Like you can wear it for multiple hours. And that just wouldn't have been possible if they had increased the battery life. And then they have that action button so you can have programmed functions that you one tap on the glasses, which I think... you know, makes them more useful. And I think people will wear these glasses more because they have these capabilities that are useful. And it seems like they're also upgrading Meta AI quietly to make it more useful. So I just think that most of what I'm seeing is pretty welcome, but I feel like there's not enough of a talk about developers and enabling third-party applications to take advantage of these glasses. And You know, it's pretty limited right now to like, you know, Spotify and Amazon Music and a lot of first party Meta apps. But that's like, you know, the universe doesn't really revolve around Meta, whether they like it or not.

[00:13:36.359] Kent Bye: Yeah. And I'm curious why you decided to go with the Oakley classes, because we had a choice between the Gen 2 of the Meta Ray-Ban versus the Oakley. So it looks like you got the latest Oakley's.

[00:13:45.515] Anshel Sag: Yeah, I mean, it's kind of a combination of I had two generations of Wayfarers, and I liked them, but when I tried on the Oakleys, they just looked right on my face. And these ones are the all-black ones, so they look a little bit more like regular glasses. While I like the white ones with the gold lenses, they're a bit flashy. And these ones are also with transition lenses. I think these are the only ones that came with transitions. So yeah, I really like transition lenses. I had my previous pair of transitions. Again, it seems like they're not quite as dark as if you just got the sunglasses. So I'll probably still stick to getting a pair of regular sunglasses or using the Oakley's sunglasses.

[00:14:27.799] Kent Bye: And have you been using these AI smart glasses in your day-to-day life?

[00:14:31.960] Anshel Sag: Yeah, extensively. One, because I have a toddler at home and it's a lot easier to capture her with the glasses on. But she's also smart enough already to be aware that I'm taking pictures of recording. She's not convinced that they're not real glasses. But also, you know, walking dog down the street, going for hikes, it's really just nice to have the glasses for taking pictures. Like, you know, sometimes I don't want to take my phone out. I want to be present and I can just tap a camera once really quickly or even just use a voice command and have my hands be free. I've also done some product videos with the glasses on. So, you know, there's no better a POV camera than the one that's on your face. And yeah, like, you know, I've done it for texting, like the voice to text functions really good. So I text my wife back and forth all the time without taking my phone out, which is really nice. Because, you know, if I'm walking a dog, or I'm holding the baby, it's just not really ideal to have to pull my phone out.

[00:15:29.215] Kent Bye: Yeah, in terms of the specs that are increasing, what are the things that you're noticing in terms of the bump up of these next generation, the gen two of the meta rebands, smart glasses, as well as Oakley, what are these glasses called that you're wearing?

[00:15:41.280] Anshel Sag: They're the HSTNs. I forget what that actually stands for. I will never remember, but it's Houston, I think it is. But most of it's battery life. I think it's the same chipset, but they did upgrade the cameras. I don't know if they did it on the Wayfarers, but these Oakleys and the other Oakleys are both 3K video. So that's an upgraded image quality there too. But otherwise, I don't think there's like a huge upgrade otherwise. But I think that's really what people wanted was just better image quality and on the Vanguard's better field of view and battery life. Otherwise, it's pretty good. I think Meta AI has room for improvement, but that's software and that can just kind of be a rolling thunder for infinity.

[00:16:27.037] Kent Bye: Yeah, just arriving here on the campus for MetaConnect. And there's a lot of VR content creators who were not invited this year. So it seems like they're much more focused on AI and wearables this year. And we'll see how that plays out in the keynote. That'll be later tonight. But just curious to hear any of your reflections in terms of the momentum that you see in the broader ecosystem of what's happening in the context of these kind of AI-driven smart glasses and Meta's own focus of putting a little bit more emphasis on the next generation of AR in the long distance, but also the short term with these kind of AI glasses and wearables.

[00:17:02.765] Anshel Sag: Yeah, I think they're kind of victims of their own success because the smart glasses sold so well and everybody took notice that it kind of became a very obvious pivot point for the company. I would say everybody was chasing them last year and now you're starting to see more of the competition come out. And I think that they want to continue down this path because they're seeing some traction. And the truth is, I think moving forward, every AI company is aware that some form of smart glasses is one of the best ways to interface with AI because you have a camera on your face. You have microphones, usually around five. And then you have speakers. So you have all these different sensors and outputs that directly connect to your brain and your mind, but also give AI your own perspective that I just think that it's going to be really difficult for a lot of AI companies that want to get into hardware. and into wearables to really deliver a better experience than something in glasses. And that's why I think Meta's push around these glasses is almost more about AI than it is about owning the smart glasses category. And obviously there's a ramp from smart glasses all the way up to AR. which also needs to occur and can't happen overnight. So they're going to have to continue to iterate. But I think smart glasses are here to stay. And they're kind of the lowest common denominator for what an AI experience can be that also maybe leverages some AR technologies. And the truth is, I think so much of what they're developing for these smart glasses and AI will translate to AR and other XR technologies, you know, like Some of the speaker technology in these glasses is spatial audio, which kind of came from VR. So I just think that there's a lot of borrowing of technology across the XR spectrum leveraged and accelerated by AI. Think about all the hand tracking that happens in VR. That's all AI. And without AI, that hand tracking wouldn't be possible. I think that we're just in a place where right now AR is the focus for a lot of reasons, but also so is AI. And the mixture and blending of AI and AR together is so strong that that kind of drives where Meta is going today.

[00:19:28.542] Kent Bye: One of the things that we haven't seen so far is any sort of broader cultural backlash against these meta Ray-Ban smart glasses. You have people who are walking around with cameras on their faces with a company that historically has had not the best track record when it comes to privacy. Just curious to hear some of your thoughts and reflections on that because we had with Google, we had the whole glass hole effect and a lot of backlash. That was when someone's eye was occluded in a way that you couldn't actually see them. I don't know if this is just that it's more the form factor or if people at this point have just become resigned to everything's being tracked and they're not as concerned as some of the different privacy concerns. But it is interesting to me that there hasn't been any sort of broader cultural backlash. I'm just curious to hear some of your thoughts on that because it seems like that could be one thing that would provide some headwinds for this type of future product.

[00:20:17.216] Anshel Sag: Yeah, I think you're right in a lot of senses. But I would say that the form factor is so much more socially acceptable. But also, you know, they have gone to quite lengths to make sure that if you're recording, the light is on so people around you are aware. And if you block the light, that the recording will not actually capture. So there's a lot of good things that they're doing. And it is true that, you know, they do have some past history with privacy issues. But I would say that I also think that it's maybe not quite at the level of saturation yet where social upheaval will occur. But I would also say that I'm seeing in a lot of places and like it's been shocking to me how many of my Uber drivers have them. But it also makes sense because like I would want them to have those glasses instead of putting an earbud in. And yeah, I just think that like so many people who are using them gain so much from using them that they overlook some of the privacy concerns. But I think, you know, people have every right to be concerned if people are wearing cameras and they have the right to feel the way they feel. And there's definitely some people who are also fear mongering about the glasses. And talking about the stickers you can buy online and stuff like that. And like they're not even aware that the stickers being blocked will automatically turn off capture, which I accidentally learned by just covering it while I was holding the glasses. So I think it's always valid to have privacy concerns regardless of what the company is and to always ensure that they are protecting users privacy to the degree that they want to be.

[00:21:52.674] Kent Bye: Now, there was some leaks ahead of the MetaConnect where there were some videos that were showing what looked to be some of the early prototypes of the Control Labs neural interface, as well as with a future version of the smart glasses that have more of a monocular overlay. And so I'm just curious if you've had a chance to see that, and any comments or anything that you can say in terms of the future directions of what might be announced and talked about more today at MetaConnect.

[00:22:19.713] Anshel Sag: Yeah, so I think they're going to be announcing that. I assume that this will be published after that point. Yeah, yeah. But yeah, I think that they're really cognizant of how much we are drowning in notifications. Because imagine you have smart glasses on with a display and people are just constantly notifying you and you don't want those notifications in your face. So they're doing a really good job of keeping those things out of the way and leveraging first party applications and using the EMG wristband to enhance user inputs. Because the truth is you don't always want to use voice for everything. And, you know, the gestures are pretty good. It is an evolution of what they've previously shown with Orion. So yeah, it's really cool product. It's intended to be worn all day. So you don't really like worry about, you know, battery life. So it's an all day wear kind of wristband. I think Mark was actually shown wearing it already. And yeah, it's like one of those things where you wear the wristband all day and whether you have the glasses on or not, the wristbands on you. So I asked them about the whole like, why don't you also make it a health wearable? But I think that just adds more regulatory challenges. But it sounds like it's a very rugged product that's still very comfortable. And yeah, it's cool because you can do some really interesting gestures. And while I don't know what will be shown, so I'm a little bit sensitive about the demo that I got, I do think that there's, you know, the choice of where they put the display is also really important because a lot of the demos and videos I've seen, it's front and center, and that's not really how it is. It's kind of more towards the bottom of your field of view, which makes sense because if you want to converse with people, you don't want the overlay right on top of them. And yeah, I just think that they've made a lot of good decisions, but it's not perfect and no product really is. But, you know, they've really moved themselves much closer to where Orion is. But, you know, Orion is still quite a leap from a single monocular color display. but i will say that like you know orion wasn't perfect either and it's just a prototype so i think we are slowly moving towards the full ar capable future but right now it's very much still smart glasses with a display and i kind of liken it to like a smartwatch on your face which is how i saw stuff like you know focals by north five years ago now and you know obviously that was a very different time but you know we're kind of coming back to that point again and the truth is like having a smartwatch on your face is cool but you still need application developer support and you need more than just first party apps and i think that that'll be the biggest challenge for meta is like getting more developers on board and to enable these glasses with AI, but also their apps so that it's a complete ecosystem of applications. So that way you can kind of leave your phone in your pocket and use these glasses to really experience the world while also enhancing your interface.

[00:25:25.018] Kent Bye: And they certainly needed those third-party developers within the broader VR industry, at least, in terms of helping to push the platform forward and to continue to innovate. And I've seen some of the most successful applications over the years have been by these independent third-party apps. And so do you have a sense that they're going to be opening up some of these different platforms to something beyond with the first-party apps that they've had so far?

[00:25:44.985] Anshel Sag: I really hope so I kind of got the inkling that they would but I'm not for certain I feel like they need to and if they don't they should do it fairly soon but I just think that there's going to be a point where the core capabilities and functions are going to run out of their usefulness and people are going to want to like start wanting like more of their applications and more of their capabilities in the glasses whether they have a display or not And I think the display kind of drives that demand higher because people want to see visual data. They want to see visual representations. And if you want to keep people's smartphones in their pockets, you're going to have to drive the capabilities into the glasses. And that's all developers. Yeah.

[00:26:31.994] Kent Bye: Yeah, well, I know last year at MetaConnect, I was not on the list to be able to see the Ryan demo, and I'm not in the foreseeable future to be able to see their latest demos with the EMG wristband. So to me, this EMG wristband from Control Labs that's now a part of Meta seems to be like the most exciting technological innovation for where the future of human-computer interaction could go in terms of, like, these more gesture-based and maybe, like, micro-movements in your fingers. You know, talking to Thomas Reardon a number of years ago, he was talking about how you could essentially just type on a table and type at somewhere between 90 to 150 words per minute, something that would seem to be a lot higher. But you could also just be walking around and do these different types of gestures that Could be a part of like more of an augmented reality future. And so curious to hear if you could just talk a bit more around your experience of like what this felt like in terms of this EMG wristband in terms of this new way of having input into AR.

[00:27:32.492] Anshel Sag: Yeah, I mean, I do think it's a big part of what they're accomplishing here. I think, one, it's an iteration of what they had shown last year. And, you know, I think the gestures have improved. I think last generation, I really had to make much bigger gestures with my hand to make those. It was mostly finger movements, really. But I had to make bigger finger movements and more significant tap functions on my hand. And this year just felt a lot more natural. So they've definitely refined the accuracy of what they're doing. And, you know, it was a lot of swiping, some scrolling. My favorite was the knob turn. You can do a knob turn and it'll recognize it for volume, which is what everybody wants, right? So I really enjoyed that. I was not sure if I didn't get this demo, but I was under the impression that they might have a handwriting recognition, which is the one I'm a little sketchy about, but I'm not sure if they're actually demoing that now.

[00:28:29.208] Kent Bye: I think there was in the video that was leaked, there was someone that looked like they were kind of scribbling on the, like they're writing something with their hands that looked like it would be one of those handwriting demos that was in the video at least.

[00:28:38.737] Anshel Sag: Yeah, so I guess they're kind of showing it, hopefully. But yeah, the handwriting seems like a really cool one because it's like, you don't even think about it. You just write what you want to write and it gets captured. And, you know, I think that last generation, it was a very snug fit. And this year it was still a tight fit, but it didn't feel, I didn't really notice its presence once the Titan was dialed in. But it still does need to be a fairly snug fit because of all the sensors and and also the materials were a little bit more refined so the EMG wristband on its own is kind of like its own little like masterpiece but I feel like they had also kind of refined it quite a bit already last generation and they just put a lot of finishing touches on it and together it just seems like a really complete package And I also think that it's going to enable them to continue to use that interface moving forward, kind of like Apple is with Vision Pro and the pinch gesture. You know, I wish there was eye tracking. You know, that would be a great thing to have. And I think it will inevitably become necessary when AR becomes a reality for calibration and authentication purposes. But right now there's no eye tracking, so you can't just look and pinch. But, you know, I really do think the EMG has really dialed in quite a bit, and it's a good user experience.

[00:29:55.135] Kent Bye: I know that if you go back to the mother of all demos, that demo is incredible in 1968, where they were showing like the future of video teleconferencing and the mouse and but they actually also had in that demo, if you remember a corded keyboard, which is ability to have two hands and you're kind of like, of course, stenographers actually have like a a way that they kind of play it like they're playing a keyboard or pushing five buttons at the same time. And so I would imagine that something like that with one hand might get you so far, but it seems like that having two-handedness for some of those different interactions where you need to have both of your hands, like when you're typing, you want to have... the ability to do both hands. But if it's just like writing, maybe that's a way of getting around that. But oftentimes people can type a lot faster than they can write. And so do you imagine that they might have a form factor where they would require people to be wearing two of these EMG wristbands? Or do you expect that this is going to be going down a pathway of just only requiring people to have one?

[00:30:54.946] Anshel Sag: That's a really good question. I think the rational approach would be to have one in a watch and have one on a wristband that isn't a watch, if you need to. Ideally, I don't think we want to have people having to wear two wearables on top of the one that's on their face. So I think, if anything, one should be the future, but two may be necessary, like you said, for some applications. I think we might also see some other forms of wearable controllers like rings. Cause you know, like I tested out the ring that Qualcomm announced at AWE and that's a capacitive touch ring, but also something that you can use to control stuff. And I just think that maybe you'll get a combination of different types of wearables, whether it's a watch that also has some EMG functionality in the band, or it's just a pure EMG device that's only for function. Hmm.

[00:31:50.410] Kent Bye: Yeah. And at AWE this year, there was a lot of focus on it. It felt like a bit of a beginning of a new cycle of like with smart classes and AI. And there was a lot of focus on this combination of AI with XR and that meta certainly going down that path of emphasizing the meta AI and the different types of features. There's also some backlash of folks writing against AI in terms of, you know, Emily M. Bender and Alex Hanna wrote a book called The AI Con, looking at some of the different limitations of large language models in particular. And also Karen Howe wrote a whole book of the empire of AI critiquing some of the different business practices of open AI. So I'm just curious to hear some of your reflections on if you do see that AI is going to be this thing that is going to really fuel this XR and these wearables, or if we're at the peak of a hype cycle where some of the real limitations of AI technologies whether it's the hallucinations or overinflated expectations for what might be possible versus the actual reality of where we're at with AI.

[00:32:49.986] Anshel Sag: I would say it's a little bit of both. I think we are, without a doubt, in one of the most insane hype cycles we've ever seen. You know, tens of billions of dollars, hundreds of billions of dollars are being thrown at data centers for some applications that don't really need it. But I would also say, I do think AI is going to continue to be a driver for XR. And I think there's going to be continued improvement, especially as these smaller language models improve. And more agentic AI picks at the smaller models that are specific for the application that's necessary and really nails the experience in a way that massively improves your daily usage, but doesn't really necessarily always have to call to the cloud and only really uses cloud when it's necessary. Partially for privacy reasons, but also for just better user experience and cost, because the truth is spinning up a GPU in the cloud is so much more expensive than running it locally. And yeah, I just think AR and just XR in general will continue to move down the path with AI, especially when you consider that just search alone, all search is moving towards AI, whether we like it or not. And if you're trying to search anything on your glasses, that's going to be AI powered if it isn't already. So I think that you look at the big companies in the spaces that we normally touch, regardless of XR, and AI is just kind of consuming a lot of that. And I think at a certain point we won't even really consciously call it out. It'll just be like, you know, nobody really talks about the Internet. The Internet just is the Internet. I think AI is going to end up being the same way where like everything has some form of AI in it because without it, you know, it's a lot dumber and it's not as personalized. And I think the personalization of AR is so dependent on AI that I think that makes a lot of sense, too.

[00:34:44.491] Kent Bye: And as you're an analyst in the XR space, I guess AI is being, to some extent, folded into your analysis. And so when you look at the field of Google Gemini, OpenAI, you have Anthropic, and then also there's MetaAI. There's lots of different AI companies out there, different chatbots and large language models. How do you go about evaluating or comparing them? Because it's such a huge thing that it almost feels like you need a team of researchers who are trying to red team it and stress test it to really understand the what it can and cannot do, or there's benchmarks that are out there that people often look to. But just curious if you have like an experiential way of like testing, you know, going down the list of things that you want to do like day to day through like sending text messages or just accuracy or like, how do you get a sense of like even trying to compare what's out there?

[00:35:32.699] Anshel Sag: I use it every day. That's really what it is. Like, you know, I have a folder on my other phone that's just an AI folder. And I'm constantly using Perplexity, OpenAI, Gemini, even Grok. There are some that I don't like. I don't use Claude as much. But really, I'm just constantly using them and trying to understand the limits of their capabilities. and how they can be applicable and you know like for example open ai is talking about how they're going to do a smart ai wearable and it's not going to be smart glasses but i don't buy that i actually think that's just a distraction from them actually doing smart glasses because i just think that like a lot of the form factors that i think make sense are going to be some form of glasses they don't have to be you know we've seen some of the pins and necklaces and things like that but I really think that the best way to understand how AI fits into XR is really just using it. Whenever Gemini comes out with a new model, let's say the nano banana for photo editing, I'm jumping on that day and assessing the quality and capabilities of these models by using them. And sometimes they're really impressive and other times they're very much disappointing. And I'm also working AI into my workflows as well and trying to understand where it's a good fit and where it's not. I do a lot of writing, so I actually don't use AI for writing because it's really bad. And also, I don't trust it. So I want my customers to know that it's me who's writing and not an AI because they could just do that and pay for that. So I'm really careful about the writing part of AI, but I've been using AI for other research purposes and it's been helpful. But again, I keep finding regardless of which AI it is, I still find errors and mistakes and hallucinations. And so like it's still not perfect. And, you know, that's part of my job also is just being able to assess the state of AI itself and And, you know, my favorite thing is when one of the AIs makes a mistake, I call it out in chat and then it admits it was wrong. And I'm like, yeah, I knew you were wrong, but that requires me to have the knowledge to be able to correct it. So I think that's one of the problems with having a lot of these AIs out there to the general public is not everybody's an expert to understand when the AI is wrong. And that's just going to be something that we have to accept and improve on and understand about AI, at least for now.

[00:37:59.608] Kent Bye: Yeah, I know that talking to a lot of artists, AI in a workflow is really great for concept art in early stages to get out a lot of ideas and to brainstorm. And I certainly did that this past week where I was generating 1,800 different images for some creative projects that I want to pursue at some point. Yeah, just to kind of do that creative ideation. But with Meta, they have Llama, which in the past, they've really emphasized how much they're focusing on open source. And there's also critics who are saying, how much is this actually open source when it comes to Emily and Binder saying, OK, if you really want open source and AI that also includes not only the weights, but also the training data and all this model card. And there's a lot of other layers of what it may mean for the threshold for true open source, in my opinion. But they have been focusing this open model in the past with Llama, where they want to try to share it out there and build an ecosystem, as it were. And they had a whole separate event earlier this year that was focusing more on AI developers and creators. And MetaConnect is usually leading up to the Christmas where they're announcing all the wearables that they're going to be wanting to get buzzed around so that when it comes to Christmas, they can start to sell these latest products. But when you look at, say, Meta AI and their strategy overall with what Meta is doing with AI, I'm just curious to hear some of your reflections in terms of where they may be going. I understand there are some recent acquisitions and this kind of whole pivot towards superintelligence and emphasis that. So just curious to hear some of your reflections on where you see meta AI going here in the future.

[00:39:26.548] Anshel Sag: Well, I definitely think the superintelligence thing is very much a reactionary approach to what Gemini is doing or Google is doing with Gemini or OpenAI is doing with ChatGPT. So I think that's kind of a reactionary thing. It's maybe a little bit of a pivot there. But their investments in AI have been very strong and they've done pretty well with their open source models and having some of them be adopted for use across industries. I think that the meta approach to AI in a lot of ways from the beginning, if you think about all the models they had on phones and in XR, it was really about making sure that those models become the default for the industry. and then they can capitalize on it being performant on all kinds of hardware so that it runs well for them in their data centers, but also on their devices, and when application developers take advantage of them on hardware that runs their models. So I think there's a multifaceted approach to it. It will be interesting to see what this new superintelligence approach will do. Maybe that's what the Meta AI apps purpose was all along. Because you know how they had transitioned the Meta Ray-Bans app into the Meta AI app. So they're making themselves a one-stop shop for AI, whether it's smart glasses or other things. But yeah, I just think that they're in transition right now. And it'll be interesting to see what comes of it. But I think we're a little bit early because I feel like they're kind of in a little bit more of a reactionary phase right now with that super intelligence push and hiring all those people. Mm-hmm.

[00:41:06.920] Kent Bye: Yeah, I'm curious to hear some of your thoughts of some of the demos that you're able to see here. They have everything from Meta AI sections. They have Oakley sections with more fitness examples. They have walking tours for the Meta Ray-Ban smart glasses. They had a lot of VR demos that I got a chance to see. So just curious to hear what stuck out to you in terms of some of the demos that they're showing here.

[00:41:28.035] Anshel Sag: Well, yeah, we also had that mobile demo, and that was a Horizon Worlds demo. I thought that was interesting that they're trying to push the mobile side. Was that on the phone? I don't know if I saw that. Yeah, the phone gaming experience. I didn't get to try the Deadpool experience for VR, but... I think that it was really interesting that we got so many VR demos. I wasn't expecting to see that many considering all the sentiment and rumors and talk of what's going on today. So it's very clear that they're still in VR and wanting to continue to push that. But yeah, I think the one that really stuck with me the most was probably the Oakleys with Vanguard just because it felt like a very complete experience and focused on really sticky health and fitness, which people are continuing to focus on. And, you know, if you can make fitness and health more fun, like people are more likely to do it. And that's why, like, I became such a big fan of, you know, Supernatural. I thought that was a really good, sticky experience. And it had such a broad audience. It's not just young kids who love VR. It's anybody who's trying to get in shape and doesn't want to, you know, necessarily go into a gym, but also wants to be able to experience fun workouts. So I think that Vanguard could be the kind of smart glasses AI experience similarity there.

[00:42:49.398] Kent Bye: And because we are ahead of the keynote, there may be other things that we didn't talk around that I don't know about yet. But is there anything else that you are excited about them potentially discussing more or announcing?

[00:42:59.571] Anshel Sag: Not that I'm aware of. I think I've pretty much talked about everything that I'm aware of.

[00:43:03.874] Kent Bye: I know that I got a press release around the Hyperscape, which it looks like they showed a Hyperscape last year, which was a way of doing neural rendering of scans. It looked like that you could have an ability with the MetaQuest 3 start to scan rooms and share these neural rendering of your location or your home and then potentially have different social interactions with that. From the video or the assets they have, again, they haven't announced it formally. I'm curious to hear what Mark says about it. But it seems like they're moving towards also trying to create the ways that this kind of volumetric scanning technology can be shared in an XR context and have social dimensions around that as well.

[00:43:40.345] Anshel Sag: yeah and i haven't really seen anything about the hyperscape stuff except for the press release i'm reading right now but it is interesting because i think there's some cool ways that hyperscape can be used to create a more personalized experience we haven't really seen much of that yet so i think you know if you can level up the personalization for people and whatever experience people are doing can be customized to that i think that's positive but it might be computationally also quite expensive. So it'll be interesting to see how that translates. But based on the stuff I'm seeing here in the press releases, it looks like we've covered most of the things. I did see the Blumhouse cinema thing, which I thought was a little bit interesting. I didn't do the Megan experience. I did the black phone one.

[00:44:27.910] Kent Bye: Is that used like photogrammetry or neural rendering in there? Or what was it like?

[00:44:31.931] Anshel Sag: You were watching like a 2D video, but it was floating in a mixed reality-ish slash VR space where some of the objects and the room you were in represented what you were seeing in the movie. So it was kind of like a mirrored experience where you kind of get a little bit more of what's happening in the movie in VR. So I thought that was interesting. I don't know what that will look like for a feature-length film, but for a couple-minute video clip, it was kind of cool.

[00:45:02.199] Kent Bye: Yeah, there's a Chris Pruvit talk at GDC this year where I think at GDC, these talks that they talk to developers, it's good to listen into those because you get a little bit of a sneak peek for how meta themselves are thinking around the future of XR because for a while they were saying, oh, there's this new demographic of folks that are going to be really interested in narrative VR games. And then they funded a lot of that. And then by the time that those were coming to market, they kind of abandoned that and went on to the next thing, which was that there's going to be more of a casual mixed reality use case where rather than gamers, more people who are not VR gaming enthusiasts, let's say, are going to be watching media content within XR. They were emphasizing how, like in the Horizon Worlds demo that we're showing, they were showing like an exclusive trailer of Avatar 3D. And so they're just emphasizing this more casual media consumption aspects, which I think we've also seen from Apple, which, you know, by the way, Apple. So there are some rumors that they may be announcing like an upgrade for Apple Vision Pro with an M5 chip, but that didn't come to pass just yet. But. Yeah, just curious to hear this kind of move towards casual mixed reality media consumption and then where Meta and Apple are playing into that.

[00:46:10.990] Anshel Sag: I think it's a utility thing. I think they want people to feel like they're getting the value out of their investment. And truthfully, they're also battling for people's attention spans. And they kind of want you to consume media in the headset rather than on some other device. And that's why it's like, you know, they want you to scroll on Instagram on your headset. They want you to do other content consumption in the headset and and truthfully there are you know they are better experiences in a lot of ways than just having it on your tv assuming you don't have a super high-end tv or a gigantic one but like you know i have seen my fair share of giant 100-inch virtual screens in the last you know six to 12 months so that seems to be an experience that everybody wants to deliver in some fashion and it's really about just giving people more things to see in the same amount of space So, you know, a lot of virtual screens, a lot of bigger screens, a lot of ultra-wide screens. You know, X-Reel does that too. Apple does it with Division Pro. So it's like everybody wants to augment your experience and enhance it in a way that you would literally not be able to do because you can't carry around a 57-inch screen with you everywhere you go. So I think that's kind of what they're trying to do is, like, really take advantage of the virtual medium. And it's such a low-hanging fruit too. Yeah.

[00:47:34.659] Kent Bye: Yeah. And your new immersive home world, they had a whole wall where they had Instagram feed. And I believe that the stereoscopic photos or videos that were there showing it, I was assuming that those were using AI to turn them into like more depth perception. But it looked pretty cool, some of the different scenes, but taking these 2D photos and then using AI to make them look spatial. So anyway, that was something that was also in the demo that I saw.

[00:48:00.868] Anshel Sag: Yeah. I also saw that. I did find it interesting that you couldn't really get too close to it because the quality wasn't that high, but you know, with like a vision pro, you can really get your face in those Apple spatial videos. So I think it's really comes down to the quality of the capture and there's a lot more room for improving capture. And truthfully, you know, Instagram, it would be great if Instagram could become a medium for sharing spatial photos.

[00:48:27.657] Kent Bye: Great. And finally, what do you think the ultimate potential of XR, AI, all this kind of move towards smart glasses with AI, what do you think the ultimate potential of all these XR wearable devices might be and what it might be able to enable?

[00:48:41.403] Anshel Sag: I think it's really about giving us superpowers and kind of enabling us to be smarter, more aware and more custom to our personal needs and being able to enable us to be better versions of ourselves. That's ultimately what I think it should be, because, you know, I would love to be able to remember everybody's birthday. I would love to be able to remember everybody's name. And I think there's just room for that in a really thoughtful and careful way. Because a lot of people I talk to, they're like, I would love to have every single one of my LinkedIn contacts just be plugged into my smart glasses so I know who I'm talking to or if I should remember their name. But that's a huge privacy thing. So I think there's lots of room for improvement and lots of enhancements. And, you know, I like the idea of enabling us to just be better versions of ourselves, but not losing our humanity on the way there.

[00:49:43.021] Kent Bye: Anything else left unsaid you'd like to say to the broader XR community?

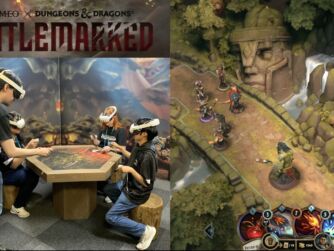

[00:49:47.066] Anshel Sag: know i think we're really moving in an interesting place with ai and ar but i still think there's lots of exploration and fun to be had in vr as well and mixed reality i think it's a really good bridge between the two and i hope to see more use of mixed reality because i was really i really enjoyed the uh the demio dungeons and dragons demo i thought that was a lot of fun and My first reaction was like, I want to do that with friends. So I think ultimately social experiences are a huge factor in driving us to get closer together, whether it's in AR or VR.

[00:50:21.107] Kent Bye: Awesome. Well, Anshul, thanks so much for joining me here on the podcast to share some of your thoughts on all the latest news here from MetaConnect that will be, I guess, formally announced here later today during the keynote. But we had a chance to see some early look at some of the stuff that's here. And yeah, just always appreciate some of your insights and reflections on where things are at now and where they may be headed here in the future. So thanks again for joining me here on the podcast.

[00:50:41.274] Anshel Sag: Thanks a lot for having me. Happy to be back on.

[00:50:43.953] Kent Bye: Thanks again for listening to this episode of the Voices of VR podcast. And if you enjoy the podcast, then please do spread the word, tell your friends, and consider becoming a member of the Patreon. This is a supported podcast, and so I do rely upon donations from people like yourself in order to continue to bring this coverage. So you can become a member and donate today at patreon.com slash voicesofvr. Thanks for listening.