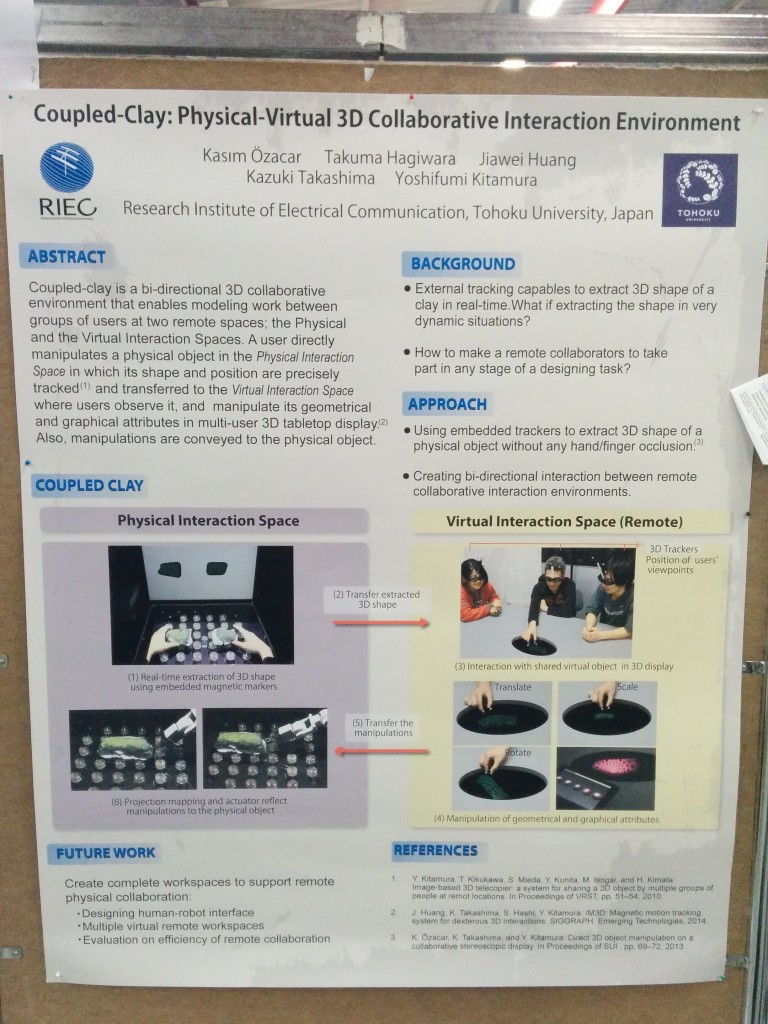

Kasim Özacar is a Ph.D. Student at Tohoku University working with Virtual Reality. He was presenting a poster at IEEE VR 2015 about Physical-Virtual 3D Collaborative Interaction Environment with Coupled Clay.

This is a really interesting mixed reality poster that puts magnetic sensors in clay connect VR to reality.

Become a Patron! Support The Voices of VR Podcast Patreon

Theme music: “Fatality” by Tigoolio

Subscribe to the Voices of VR podcast.

Rough Transcript

[00:00:05.452] Kent Bye: The Voices of VR Podcast.

[00:00:11.924] Kasim Ozacar: This is Kasim. I'm from Japan, Tokyo University. We have a couple clay, physical, virtual, 3D collaborative interaction environment. Our system consists of two interaction workspaces. One is the physical and the other is virtual. In the physical interaction space, we can extract the 3D shape of any physical clay model while a user is manipulating it. Because we have an embedded tracking system inside the object, we can transfer this extracted 3D shape to another virtual interaction space, which is very remote to this space. in which users interact with shared virtual object in 3D display here, so that they can observe the object from their point of view, they can translate, they can scale and rotate it, which are geometrical manipulation. They can also change the graphical attributes of the object, such as changing color, changing texture. And then this manipulation can be transferred to the physical interaction space, which we have a robot arm, is a robot manipulator, and we have a projection mapping which reflects the graphical manipulation onto the object. So, the point is here, we have completely occlusion-free embedded magnetic markers, so they are not affected users' hand occlusion. So, we can real-time track and then real-time send the 3D shape to the virtual environment.

[00:01:34.511] Kent Bye: I see. So there's clay with sensors in it and you're modeling the clay and then it's being transferred into a simulated virtual interaction space. And so what is the outcome or the intent to be able to have the combination of virtual and physical in this way? How would you see this being applied?

[00:01:50.721] Kasim Ozacar: Well, for example, even kind of application scenario. Let's assume that there is a car manufacturer, they have a model car, and they want to share this to a very remote clients. And they can extract the 3D shape and then allow to remote users, clients, to see the object and then allow also to manipulate them, changing color and changing the view and see the... And then also to allow them to change the attributes so that you can see that. I mean they can share their opinion with you.

[00:02:23.804] Kent Bye: So it sounds like people that are in the virtual interaction space, they can translate, scale, rotate it. Are they able to take any of those actions and put it back into the physical space? And so, you know, so yeah.

[00:02:35.793] Kasim Ozacar: Absolutely. For example, if I move the object here, then the robot arm is moved here. Actually, we have a video for this. If you rotate the object, it's not implemented yet. But if you rotate the object, the robot, they rotate the physical objects, just like you're doing.

[00:02:50.923] Kent Bye: Great, and so what's some of the reference work that you're building off on in terms of other research that you're using in order to kind of get this far?

[00:02:59.893] Kasim Ozacar: Actually, there are two separate research here, actually, partially. One is developing this one. It's our laboratory's research area, which is IM3D, Magnetic Motion Tracking System for Dexterous 3D Interactions. It's submitted in SIGGRAPH Emerging Technologies. The other one is Multi-User Supported 3D Display, which provides multiple users to see the stereoscopic image from their point of view. Even if they turn around the system, they can see, because we have a head tracking system, they still can observe the object from their point of view.

[00:03:33.557] Kent Bye: Great. And so what's next for you in this line of research?

[00:03:36.618] Kasim Ozacar: For this research, I want to complete the workspace, which means designing a robot-human interface to enhance the manipulations. We can add also more virtual interaction space, which we can have more users from any place in the world, so they can have a bidirectional interaction. And the other one is to find a way how to evaluate the efficiency of this remote collaboration.

[00:03:59.963] Kent Bye: Great, and have you thought about using virtual reality head-mounted displays, or do you find that using this virtual interaction space allows for better collaboration?

[00:04:10.426] Kasim Ozacar: There is no specific reason to using this one, because we have this one, but instead we can also use HMD displays. There's no difference between them. The point is to having this kind of bidirectional remote collaboration. If we can use HMD instead, of course.

[00:04:24.330] Kent Bye: Great. And finally, what do you see as sort of like the greatest and largest implementation or use of these types of things?

[00:04:30.912] Kasim Ozacar: I got feedback from a user here. He told me that it can be used for kind of analyzing or to observing a, for example, a radioactive material which you cannot touch it. And then remote users, they may manipulate and change the attributes. It could be a very nice example for this kind of system. You have a like a radioactive material here and you have a robot and you have remote users and the remote users can manipulate it. This could be a very nice example.

[00:04:56.159] Kent Bye: OK, great. Thank you. You're welcome. And thank you for listening. If you'd like to support the Voices of VR podcast, then please consider becoming a patron at patreon.com slash voices of VR.