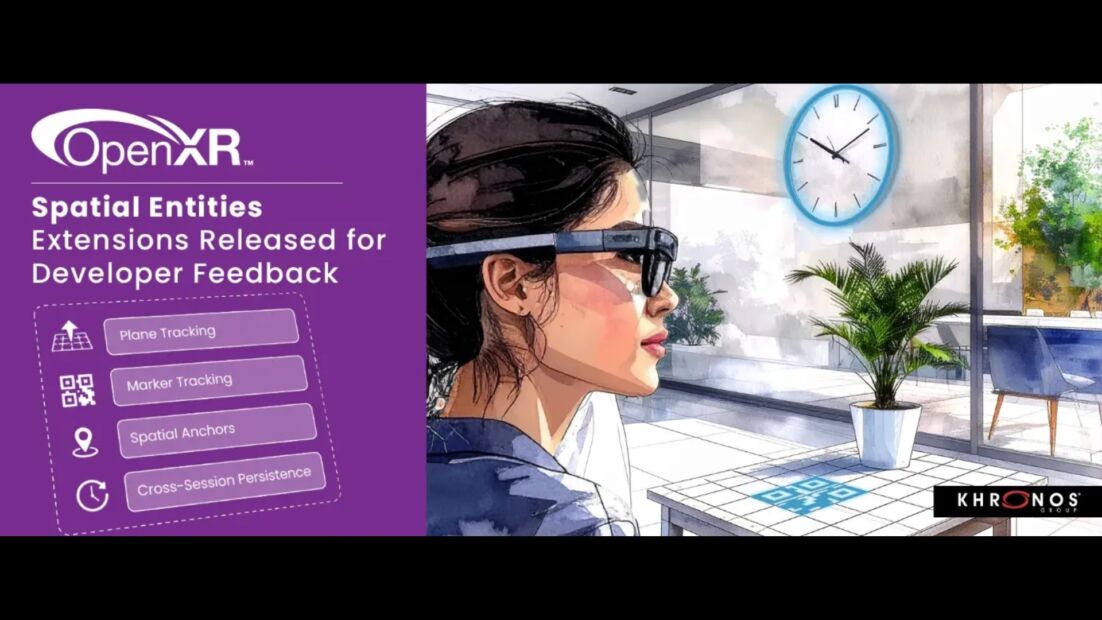

Here’s my interview with Neil Trevett, President of the Khronos Group talking about the new OpenXR Extension of Spatial Entities that has been in development for two years including PICO, Collabora, Godot Engine, Google, Meta, Unity, and Varjo, Microsoft, and ByteDance. You can see statements from many of these companies at the end of the blog announcement titled “OpenXR Spatial Entities Extensions Released for Developer Feedback.” My interview with Trevett was conducted on Tuesday, June 10, 2025 at Augmented World Expo in Long Beach, CA. See more context in the rough transcript below.

This is a listener-supported podcast through the Voices of VR Patreon.

Music: Fatality

Podcast: Play in new window | Download

Rough Transcript

[00:00:05.458] Kent Bye: The Voices of VR Podcast. Hello, my name is Kent Bye, and welcome to the Voices of VR Podcast. It's a podcast that looks at the structures and forms of immersive storytelling and the future of spatial computing. You can support the podcast at patreon.com slash voicesofvr. So continuing my coverage of AWE past and present, today's episode is with an interview that I just did at AWE 2025 with Neil Trivet, the president of the Kronos Group. So the Kronos group at every gathering like this at AWE, SIGGRAPH, and GDC, you know, there's a number of different events over the course of the year where Kronos will be announcing whatever is coming up next. And so they were announcing for developer preview. So there's not ready for release yet, but they're getting early feedback on this open standard, probably the first open standard for spatial computing ever. which is this thing called Spatial Entities, which is an extension for OpenXR and allows developers to start to define different types of planes, like walls, tables, and then from there to be able to do like detection and interaction and, you know, just kind of object tracking types of functionality. So what are the core API level things that you want to have across all these different platforms? And so they were announcing this new Spatial Entities OpenXR extension at Augmented World Expo 2018. And I wanted to just sit down and talk to Neil just because when I think around like the story of the metaverse, metaverse is still speculative. And some people say, oh, it's never going to happen. But in fact, if you look at some of these different types of open standards that have been put out, then those are the building blocks that is going to define where this industry goes in terms of the things that you can rely upon for the universal building blocks and concepts and APIs and just, it starts to define the future shape of where the XR community is going here in the future and will be the very things, something like the Metaverse may be built upon. Right now, the Metaverse is still like a lot of these enterprise types of entities, but there are actually a lot of these consumer companies that are involved in these different types of discussions. And so there is a blog that's called OpenXR Spatial Entities Extensions Released for Developer Feedback that was released on June 10th, 2025. That was the same day that I had this conversation with Neil. So at the bottom of the blog post here, there's actually a lot of interesting quotes that are coming from these different companies. And so they have Pico and Calabra and the Godot engine and Google and Meta and Unity and Vario. So again, there's a lot of support for this kind of collaboration amongst all these different entities where, you know, we're still kind of in this blue ocean realm where there's a lot of collaboration that's happening. That's trying to define like what's going to be the universal thing across all these different companies and entities, you know, Noticeably, Apple's not a part of these conversations. They're not a part of the Kronos group. They're off doing their own thing. And so this is kind of like the entirety of everybody else that's not Apple collaborating together in some fashion to put together the core concepts and ideas and APIs that are going to be driving the industry forward. So it's always interesting for me from that perspective to hear what some of the different latest announcements and conversations that are happening. And this is something that's been incubating and baking for a couple of years now, and now they're starting to announce it and get more developer feedback for the wider XR community. So I'll put a link to this blog post that goes into lots of other talks that they gave at AWE, as well as other resources for looking at other OpenXR extensions. Anyway, kind of the new cutting edge things that we can expect here in the future, where it's still a developer preview now, but we'll be launching here at some point as an official extension. So that's what we're coming on today's episode of the Voices of VR podcast. So this interview with Neil happened on Tuesday, June 10th, 2025 at Alcaminia World Expo in Long Beach, California. So with that, let's go ahead and dive right in.

[00:03:44.478] Neil Trevett: I'm Neil Trevitt, so my day job is at NVIDIA. I'm the president of the Kronos Group and we are doing a bunch of open standards that are relevant to spatial computing and the XR industry.

[00:03:56.038] Kent Bye: Maybe you could give a bit more context as to your background and your journey into working in this space of XR standards.

[00:04:02.462] Neil Trevett: So I got hooked on standards back in the day when OpenGL first came out in 1992. That dates me, I know. But I got the bug on seeing what good things could happen when people work together for the common good. And I've been involved in standards ever since OpenGL ES and WebGL, OpenXR, Vulkan, GLTF. The common thread is 3D, but also compute and XR are in there as well.

[00:04:31.274] Kent Bye: Well, so at gatherings like Augmented World Expo, it's an opportunity to come together and announce some new initiatives or other things. So maybe give me a bit of a sense of what's new, what's being announced from Khronos Group in terms of new emerging standards or updates on existing standards.

[00:04:44.543] Neil Trevett: Yes. So we have a number of standards that are relevant to spatial computing in general. Our newest standard isn't even out yet. It's the camera API, Camros. So camera modules and sensors, that'll be coming hopefully next year. We have a bunch of compute APIs, which are great now for inferencing and machine learning. We have the 3D APIs like WebGL and Vulkan. But the ones that are most relevant are OpenXR, which is a hardware API for driving XR devices, and GLTF, which is a 3D asset format for delivering 3D assets to wherever you need them. And we actually had an announcement this morning, a pretty good announcement, a pretty major announcement actually for OpenXR. It's called Spatial Entities and it is standardizing for the first time across multiple vendors how you can scan, detect and track your immediate user environment. It's a set of extensions, very well structured, so to be extensible. It lets you detect planes, floors and ceilings and walls and coffee tables and track them in real time, track markers as well. But then also place anchors in the environment that you can place virtual content relative to very precisely. And actually kind of the cool thing at the end of that list is there's management APIs for managing that spatial context across different sessions. So you can power down and come back a week later and your environment is still there and that's all managed in a standard way. So a lot of XR runtimes have been doing this before, but just like the story is always with open standards, the advantage we believe to the developer community is for the first time we have multiple XR device vendors agreeing to do all of that functionality and expose that functionality in the same way. So you won't have to keep rewriting your spatial computing code as you go from device to device. And we have a lot of support in the industry. This has taken two years of work by multiple device manufacturers and runtime vendors to put together. So we have a lot of industry buy-in. So the specifications are released today. You can go to the website and check them out. And they're going to be shipping and rolling out in actual products over the next six months, through the rest of 2025.

[00:07:27.731] Kent Bye: So just to get some clarification for what this is and what it's doing, it sounds like that if you have like a scan of a room, you might have a mesh, but that in some ways you want to understand like where a table is or a chair. And so it's like, what is the archetypal patterns that are in the space? And then if those have an entity of like, if this is a chair, then it's probably going to be able to move and move around and be dynamic. Or this is a table. You can maybe assume it's going to be a little bit more static and maybe you could put some other mixed reality things on or with the different types of mixed reality experiences maybe have things that people are using a table to put other virtual objects on that make it feel like they're in the room. So it sounds like that there is a bit of scanning the room and maybe extrapolating out these deeper archetypal patterns of what these entities might be and then what you can do with them. And then also with that, have a little bit more contextual and relational understanding of a space and how that space may fit into some of these existing forms that you could create dynamic mixed reality experiences based upon whatever someone may have in their home. So that's kind of a sense of what I have. I'd just love to hear if you can elaborate or clarify on that.

[00:08:36.966] Neil Trevett: Yeah, no, you said it better than I did. I mean, obviously, there's a lot more to sensing a complete user environment than just the floors and the ceilings and the plainer objects. But it's the first step. And it gives you the framework for at least indoor environments. It is an extensible set of extensions, so we're not committing to timelines yet, but the discussions are already starting on, well, let's track arbitrary objects, not just markers. Obviously, that's going to be essential. And then let's not just detect and track and sense planes. The next step will be sensing and forming complete meshes. of the environment which you can use however you want in your environment so we've deliberately made the set of extensions extensible so vendors can do that early experimentation and then once we figure out the best way to do it we can come together again and consolidate that into the standard as the next evolution of this this set but honestly looking around thinking we're saying this is the first open standard for spatial computing Is it really? I think it is. I mean, obviously, again, people have been doing special computing, but to have an open standard with multiple widespread vendor support, I think it is actually a first. So it's a good step towards more advanced functionality in the future.

[00:10:03.570] Kent Bye: So how's it being stored or anchored? Because if you're doing a lot of feature detection with computer vision, then you get a sense of a room. But if things are moving around, then how does it maintain its orientation? Or is there a differentiation between what they know is going to be anchored and kind of more immobile versus things that are moving? Just how are you saving all this metadata? And what's it relative to a room that may be changing over time?

[00:10:27.594] Neil Trevett: Yes, I mean, there are definitely limitations on this first set, version 1.0 functionality. Again, what we are shipping in this version, it gives you the basic framework of the environment that you're in. We're going to need object tracking, and we're going to need to capture much more full meshes of the environment so you can do more processing. So people are going to have to build perhaps the smarter parts on top of this standardized baseline. But as always, that's why making standards is an art, not a science always, because there are so many different ways of doing that. It would be almost impossible to standardize it today. We need to experiment and build the consensus on how we would do that more kind of advanced processing. with consensus on how everyone can agree to do it the same way. We're not quite there yet. It would be premature to try to do that, but it's getting close. And this is, again, this is just the first step.

[00:11:28.012] Kent Bye: Yeah, and talking to Doug Northcook of Creature and how there's, like, Laser Dance, which is a mixed reality game where it's trying to scan your environment and do pathfinding where you have to go from one side of the room to another, and then they put lasers in the room, but they're going into early access because there's just some situations in some rooms that they just need a lot more data to be able to... dynamically modify these experiences based upon whatever scan they have. So this feels like a similar type of thing where, you know, are you starting like living rooms just to say, like, here are some basic like a couch table and chairs or like, do you start in a specific context for this or? How do you negotiate the wide variety of different types of contexts, or each of these different objects, an extension that people can start to add in based upon whatever context they have? Just trying to get a sense of how you build up this repository of different spatial contexts.

[00:12:21.693] Neil Trevett: So, I mean, the way that the extensions work is you inquire whether the extensions are there. And if they are, you can enable them. And then the application, through the OpenXR API, will get a data structure that will say, I've detected all these things and tagging so you can tell what it is and, of course, the orientation. And it's up to the application then to take that data. But the way the API is structured, it is real time. So as you move around, in the environment, the data structures around you will be updated in real time. It's limited to planes and markers right now. So again, if you want to do more, you'd have to build that yourself. But over time, we're going to have the more complex up to and including building full meshes in real time. So you can do any kind of analysis you want. But I think it's going to be a really interesting couple of years, this next couple of years, as we do build consensus on, okay, how are we doing that more kind of sophisticated app where things are moving and you're trying to navigate, building the consensus on how we can do that in a common cross-platform, cross-session way. It's going to be an interesting voyage of discovery over the next couple of years. Nice.

[00:13:40.160] Kent Bye: So planes, does that just mean walls, floors, ceilings? Does that include tables and chairs?

[00:13:44.942] Neil Trevett: So if you get planar surfaces the device is able to detect, then you'll be able to put those into the data structure, yeah.

[00:13:52.304] Kent Bye: OK, great. And so you also said that you had some updates for GLTF that were being announced today?

[00:13:56.926] Neil Trevett: So no big announcements here today, but we are making good progress on turning GLTF from being just an inert data format to being interactive. data format. So we've been talking for a couple of years now about bringing interactivity to glTF. We're actually now extremely close. We're in release candidate mode where we have a spec that's going to be rolling out and it's like a node graph that's similar to like Unity or Unreal Engine. A little bit simpler because we need it to be portable and implementable on everything down to the mobile web. So you wouldn't be able to do a AAA game, probably, with the NodeGraph that we've put into GLTF, but you could do gamelets. That's kind of the level that we're aiming at. We think it's well-defined enough and simple enough, albeit powerful enough, to do gamelet-type apps. that it will be possible to get it embedded into any engines and runtimes that want to have interactivity in a consistent way. And that's always been the mantra of GLTF, efficiency and consistency across platforms. So we're bringing interactivity in that same way, in the GLTF spirit. So you'll be able to put game-level interactivity and totally encapsulated in the glTF file that will run reliably and consistently across anywhere that wants to support it.

[00:15:31.985] Kent Bye: Yeah, just to maybe elaborate on glTF with interactivity, if I upload that into a website, is it like in that node graph, is it like Turing complete? And is that Turing complete relationship of the coding? Does that get rendered down somehow into JavaScript? What's the language, or is it abstracted enough to be every language?

[00:15:52.197] Neil Trevett: It can be implemented in any language. So just like the meshes and textures in glTF are independent of how you want to actually render it. You can use Vulkan or Metal or DirectX. It doesn't matter. You'll be able to use any underlying language to program your engine. But everything that you do in the graph, it has a trigger. It can be I get close to a particular part of the asset or I interact, push on a particular part, push on a virtual button, for example. And then you define within the node graph what do you want to happen. It can be animations. It can be transformations. It can be changing color. It can be whatever you want. So it's going to be perfect for small encapsulated interactive experiences like a car configurator. It's like the perfect example. Push a button and see the different colors or get the doors to animate, that kind of thing. But again, it'll be... very consistently deployable across any platform that supports GLTF. And then following closely along behind that, we have physics. So you'll be able to push something and it wouldn't be just programmed animation. You actually have a physics engine with enough data to do physical simulation. So push the pile of bricks over. and it'll happen in a physical way. And last but not least, audio is coming after physics. Audio, 3D spatial audio, again being embedded in the GLTF. So if you take all these together, it becomes an interactive metaverse-ready file format.

[00:17:29.761] Kent Bye: Nice. And I know that a while ago, there was some talk from Matthew Bucaneri, who was looking at meta and OpenXR. And the Cronus actually put out a statement at the time that was like, you should be looking at what the OpenXR implementations are coming from the game engines from Unity, Unreal Engine, and Godot. So there was concern that there were some sort of vendor lock-in for Meta's own implementations of OpenXR. So just curious if you have any comments on that dust up that had happened and if there's any other reflections on your take on what that was about.

[00:18:03.942] Neil Trevett: Yeah, I think it's actually resolved itself and I give credit to Meta. There was an issue with some of their licensing terms, but they worked to resolve it. And I think they've shown through their actions that they are committed to a genuinely open ecosystem. You know, it's interesting. There's a number of examples like this. I mean, not just this kind of thing, but technical examples and deployment examples. It's a more complicated ecosystem than shipping drivers on GPUs. That there's more interactions with more of the components around a complete system. the platform interactions are more complicated because your touch points and surface area is more than just a graphics API, for example. So it's not surprising to me that we're kind of figuring out the shape, how to build the ecosystem in terms of legal and business, as well as the technical, as well as just doing the spec. The important thing is once we do, and once the user and developer community find issues, Are the issues corrected? That's the important thing. I do not believe there is ill intent. Meta have demonstrated that they're willing to fix the issues. I'm sure we'll find more because it is a complicated task that we're all trying to do with 20 different companies working together. It's not trivial. But so far, everyone, I think, is committed to the open ecosystem because everyone realizes it's in their own self-interest. So...

[00:19:44.409] Kent Bye: Has Google and Android XR been involved with what's happening with these open standards with OpenXR and other chronostands? I know they've been implementing things like WebGPU and whatnot. But I'm just curious, as we start to look at right now, we're on the cusp of some new players coming onto the scene or players that have been here before with Google and reentering the scene with their collaboration with Samsung as well as with Qualcomm. Yeah, if you have any comments on as we move forward and some of the new players that are coming online. And even we have Snap and Spectacles, but they've been kind of doing their own Snap Studio, which is their own game engine. But they've talked around maybe on the long term they want to start to implement different things like WebXR, maybe other OpenXR standards. But yeah, just if you have any comments on the new devices that are coming onto the scene and their relationship to OpenXR.

[00:20:31.909] Neil Trevett: Yeah, I can't pre-announce anything for Google. But I can say that Google have been longtime members of Kronos, very supportive of the standards that we do. And they are members of and active members of OpenXR and the GLTF working groups. So I think AndroidXR will continue leverage open standards in a good way. And more generally, I see the momentum towards open standards growing over time. Niantic have announced this publicly, so I'm not outing them, but they've just joined Kronos today. I mean, you didn't do a huge announcement, but yes, their membership came through today and they have publicly stated that they are joining Kronos to bring Gaussian Splats into GLTF. And that's just one example. And yes, Snap is a member of Kronos too. They do good work and they have been very involved with GLTF. And I think the currents are pushing everyone in a good direction. A lot of the stuff... that we are seeing around the show, the time is right for standardization because it's no longer cutting edge rocket science and that's our edge if you can figure it out before anyone else. No, not everything, but lots of things are becoming proven and companies are going to get more benefit from doing it in a standard way than doing it in a weird proprietary way. They're going to make better business. And I think that wavefront of standardization opportunity is broadening, like ripples in a pond. So, yes, I think it's only going to accelerate. It's good for the industry.

[00:22:13.484] Kent Bye: I saw that Apple was announcing yesterday some new stuff at WWDC, which was around WebXR. They're not using OpenXR with WebXR, but they also are starting to push forward WebGPU over WebGL. So I don't know if you have any other comments on that.

[00:22:28.476] Neil Trevett: Well, WebGPU over WebGL is absolutely the right thing to do. I mean, we love WebGL. It opened the door to 3D on the web. But WebGPU is the new generation. And it's not quite as pervasive as WebGL yet, but it will definitely get there. And it gives 3D developers much more capability, particularly on the compute. So Kronos has always been consistent that we want to encourage people to move. We're going to keep WebGL going, probably for decades, because it isn't going anywhere. But we're very supportive and encouraging of people moving to WebGPU. As soon as the breadth of platforms is supported that they need for their business, yeah, they should be moving to WebGPU. Yeah, and it is the right way. You know, Kronos's focus is at the native level. Obviously, it's a W3C that does the web stack. WebGL was kind of an anomaly that Kronos did it, but it all worked out in the end. That first round was probably more 3D than web, so it didn't hurt. But web GPUs at W3C is the right place. And Kronos is doing everything we can to support it at the native level. They're using things like Sphere V as well as Vulkan. And historically, it's actually interesting, putting on my 3D hat, there was this one brief golden period where we had a native API, OpenGL ES 2.0, that was literally on every device in the known universe. Apple, Windows, everywhere. And that gave WebGL its opportunity. It was possible to do a web binding into that one API, and bam, you got 3D on the web everywhere. And that was, I think, enabling, because it simplified the problem to let it happen. But now, of course, we're in a more complicated world. So we have three main APIs, the Metal and DX12 and Vulkan. So WebGPU had to be architected to cope with multiple backends. And they did a good job. So it's the world we live in.

[00:24:34.568] Kent Bye: MARK MANDELBACHER- We're here at AWE. I see there's a booth. There's a number of sessions. Anything else that's worth mentioning in terms of what's new at Kronos and some of the work that you're doing here with the wider XR community?

[00:24:46.243] Neil Trevett: Well, you know, I think the big trend here that I'm getting, I'm interested where you see the same thing, is I think we talked about it at CES. It's the rise of the smart glasses. It's becoming even more noticeable than it was at CES and it was pretty noticeable there. AR, particularly in a socially acceptable form factor, is going to be, I think, where XR truly goes into the mainstream. That's actually the tagline on... Oh, I shouldn't say the Wi-Fi password.

[00:25:17.466] Kent Bye: It's fine, people, by the time they're listening, all this will be over by the time they're listening to it.

[00:25:21.368] Neil Trevett: But going mainstream is kind of the tagline for the show, and I think it's right. VR will still be awesome, but in comparison to bringing people into their environment with AR, with lightweight devices, VR is obviously a smaller market to that potential. And you see the hardware, you see the applications, You see the AI that's taking advantage of being in the environment, understanding more about the environment and understanding more about the end user, supported by appropriate open standards. Now you can see all the pieces coming together.

[00:25:58.257] Kent Bye: Yeah, it definitely feels like a new cycle with the culmination of all these XR technologies coming into smart glasses and AI coming in at the same time. And also seeing just a lot of robotics here on the floor. So we have kind of expanding out into just head mounted and body wearables into external embodiment of AI. AI in the glasses that's more virtual or computer vision, other things. Yeah, and also just digital twins, blending and blurring of the what's virtual and what's real, and having extended embodiments into the robotics and AI. So yeah, all those things that feels like convergence points as all these things are coming together, that some of the smart glasses taking off, having some of the biggest players saying that the best interface for AI is going to be through these XR wearable devices. So yeah, that's certainly some trends that I'm seeing as well.

[00:26:46.348] Neil Trevett: Yeah. It turns out AI and AR are really made for each other.

[00:26:50.430] Kent Bye: And finally, what do you think the ultimate potential of all these spatial computing technologies and standards might be and what they might be able to enable?

[00:26:58.315] Neil Trevett: Well, I think we're not quite there yet, but you can see it coming over the horizon. I think it will largely replace the mobile phone for when people are in an environment that they care about, either inside or outside. With geospatial positioning, immediate environment scanning and understanding, the context-aware processing and assistance that agentic AI can bring. It is going to be a bit like science fiction, where you're going to have assistance and context in a... transparent, invisible form, but very easily accessible form, very human-friendly interfaces, is going to transform how we interact with our compute resources and our compute devices. It's going to be much more natural and useful. And I hope positive. It'll bring people together, I hope, rather than push them apart. So we'll see.

[00:28:02.619] Kent Bye: Anything else left unsaid you'd like to say to the broader immersive community? AWE is awesome.

[00:28:06.801] Neil Trevett: Come here next year. It's a real community and it's fun. And you learn, you actually do learn a lot. It's a cool conference.

[00:28:17.785] Kent Bye: Yeah, it's always a great place to see. If you skip out on CES, then you end up seeing a lot of the big, major players coming here at AWE. It's a great time to check into all the variety of different headsets from across the industry. And yeah, the community is also coming together, and it's a great place to meet and network and tap into the zeitgeist of what the trends are. So it's a great place to come and tune in. So I always appreciate bumping into you and hearing what the Chronos Group is doing, because I feel like that There's a bit of a story of immersive and spatial computing that has been laid out through the standards that have been developed over the years. And so it's always great to hear from the standards perspective. For me, that's a really great indication for the deeper trends for where things are going to be going in the future. So I always appreciate hearing the latest updates to get a bit of a peek into where it's all going here in the future. So thanks again for joining me here on the podcast.

[00:29:04.082] Neil Trevett: Yeah, I think you're right. That's actually quite an insightful thing. I hadn't quite thought of it that way before, that people working, companies working together in a substantial way on standards is a good litmus test. It's a good indicator that people are serious about this whole industry. It's not a passing fad because standards is a long-term investment.

[00:29:22.771] Kent Bye: Yeah, not only that, but it tells a story. It's a deeper story. By looking at the standards, you can start to unpack the deeper story of where the technology is at now and where it's going to go in the future based upon these new affordances that are made possible given the fact that everyone's collaborating on these specific standards. So I've always appreciated how Kronos is telling a larger story over this last 11 years that I've been covering it, even before that. just to trace the evolution of the technology. It's always really insightful to hear from you the latest things that these major companies have agreed upon. So, yeah, thanks again for taking the time to help spell it all out.

[00:29:57.081] Neil Trevett: And it's always a pleasure to see you too, Ken, really. Let's meet again before next AWE.

[00:30:02.926] Kent Bye: Sounds good.

[00:30:03.366] Neil Trevett: Thanks.

[00:30:04.844] Kent Bye: Thanks again for listening to this episode of the Voices of VR podcast. And if you enjoy the podcast, then please do spread the word, tell your friends, and consider becoming a member of the Patreon. This is a supported podcast, and so I do rely upon donations from people like yourself in order to continue to bring this coverage. So you can become a member and donate today at patreon.com slash voicesofvr. Thanks for listening.